3 Best Practices That Transform Product Development with Claude Code

Sharing my first-hand experience from using Claude Code as my daily driver.

I jumped into Claude Code about (less than) a month ago, and it has transformed my approach to work. I have applied it across research dives, skill-building sessions, and product prototyping. My productivity has soared because I now complete complex tasks much faster. And as I keep experimenting with its features, I realize how much more it offers, so I decided to document these early three lessons for anyone looking to level up.

I notice a common issue among teams adopting Claude. They often limit it to basic autocomplete functions, where it just fills in code lines or flags simple errors. While that provides minor efficiencies, it underutilizes the tool and keeps workflows stagnant.

Claude truly excels when you empower it to redesign your entire development process (step by step). You treat it as a collaborator that outlines complete features. Sometimes it automates testing and constructs prototypes from start to finish. This evolution frees you from tedious coding to focus on strategic oversight, fundamentally changing how you build products.

Anthropic’s internal teams validate this with concrete data. They reduced research time by 80 percent through these techniques. It turned hour-long efforts into 10- to 20-minute sprints.

You can achieve similar gains by reevaluating your methods.

In this post, I detail three proven practices that accelerate product development. Each stems from solid evidence and guides you toward smarter, quicker outcomes.

I compiled this from analyzing over 50 practitioner examples, including X threads, Reddit conversations, LinkedIn posts, and Anthropic’s core documentation. Give them a try, and watch your results improve immediately.

Best Practice #1: Master Project Context with Strategic claude.md Files

I see CLAUDE.md files as Claude’s core memory system. You drop these simple Markdown files into your project folders, and Claude pulls them in automatically at startup. This loads key instructions and context so you don't have to repeat yourself every time.

It matters because projects grow complex quickly. Without a steady context, Claude drifts, which leads to inconsistent code and wasted time on fixes.

For real-world proof, Anthropic’s teams rely on this heavily. They document bash commands, style guides, and workflows in CLAUDE.md. As they note in their guide,

"At Anthropic, we occasionally run CLAUDE.md files through the prompt improver and often tune instructions (e.g., adding emphasis with ‘IMPORTANT’ or ‘YOU MUST’) to improve adherence." – Claude Code: Best practices for agentic coding

In my case, as I was transitioning from an LLM researcher into a product guy, onboarding took three days. I could easily get an idea of the code, which was based on TypeScript. I understood where the core functions were, which components were used to make a certain call, and so forth. In all this, I used my research skills to understand what was happening.

But most of the jump-start code instruction was written in the CLAUDE.md file which made learning easy.

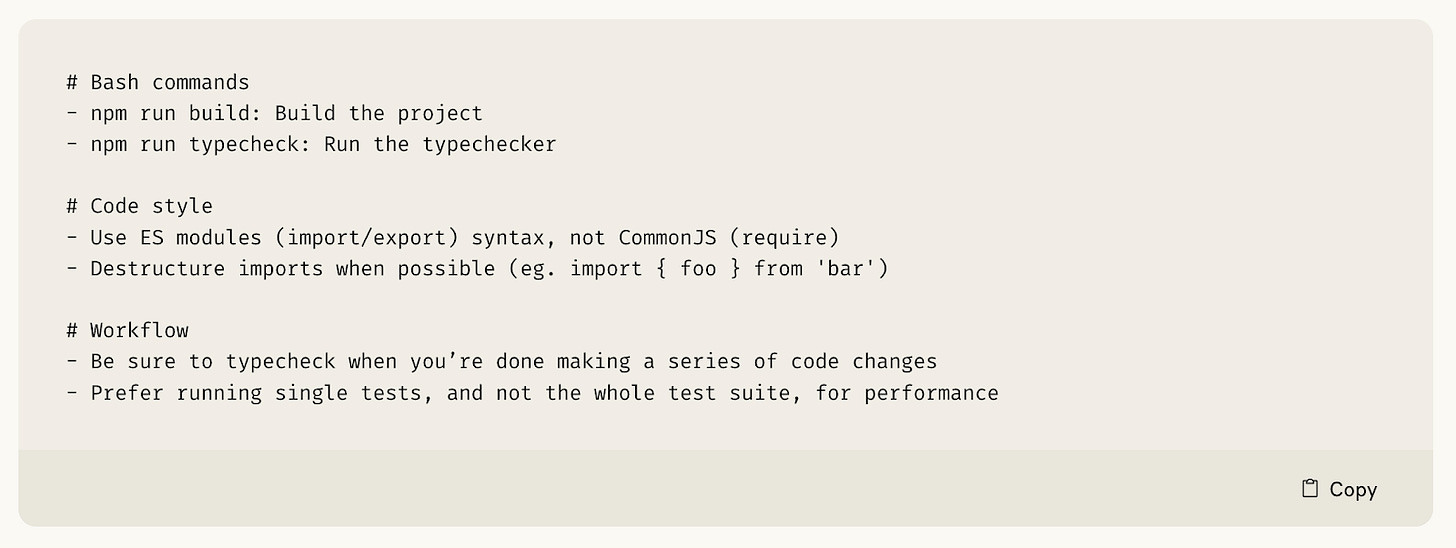

To implement this, start with a root CLAUDE.md in your repo. Here’s a basic structure:

Here are three quick wins to get started:

List frequent commands to speed up terminal work and reduce errors.

Add team preferences for consistent styling across all contributions.

Note big decisions to guide Claude's reasoning on architecture.

Initially, we made the mistake of overloading our CLAUDE.md with every detail. These buried important instructions caused Claude to ignore key rules. The solution came when we trimmed it to essentials and tested what Claude followed best. We broke it into sections like overview, styles, and commands for clarity.

Keep it minimal and effective. Avoid context overloading.

Check out Anthropic’s claude.md documentation.

Best Practice #2: Structure Autonomous Workflows for Complex Development

Claude Code stands out because it goes beyond assisted coding, where you prompt for snippets or fixes. Instead, it enables autonomous workflows. Here, Claude acts as an agent that explores codebases, plans steps, and implements changes with minimal input. This suits complex development, like building features in large repos. You shift from typing code to reviewing outputs.

Anthropic's Security Engineering team shows the power. They use Claude for infrastructure debugging, cutting time from 10-15 minutes of manual scanning to about 5 minutes.

In my project, I also saw productivity gains. When I implemented the three-phase workflow, the bug rate decreased by 10-15 percent over two sprints, as Claude caught issues early.

To set this up, follow a three-phase process:

Exploration: Let Claude scan the codebase with tools like grep or glob to build understanding. Start by using the following prompts:

"Please generate a complete tree-like hierarchy of the entire repository, showing all directories and subdirectories, and including every .tsx file. The structure should start from the project root and expand down to the final files, formatted in a clear, indented tree view."

“Please analyze the repository and trace the dependency flow starting from app.tsx. Show the hierarchy of imported modules and functions in the order they are called or used. For each import (e.g., A, B, C), break down what components (classes, functions, or methods) are defined inside, and recursively expand their imports as well. Present the output as a clear tree-like structure that illustrates how the codebase connects together, with app.tsx at the top”

Planning: Instruct it to brainstorm ideas without coding yet, then review the plan.

Implementation: Switch to execution, using Opus for tough reasoning and Sonnet for routine tasks.

Manage context technically to avoid token bloat. LLMs resend full history each time, so costs rise. Use `/clear` for unrelated tasks to reset. For ongoing work, `/compact` summarizes chats at 50 percent limit, preserving key details in a new session.

One pitfall hit us hard. We let Claude run fully autonomous on ambiguous tasks, leading to off-track outputs.

The fix? Don’t leave your screens.

The thing about Claude Code is that you can always interrupt the process and resume from where you left. So if you see that the process is taking too long or Claude has drifted away, then interrupt it immediately.

Add intermittent checks, like escape to pause and redirect. This approach doesn’t work well for ultra-secure code where every line needs a human audit.

Again, read this documentation: Anthropic engineering blog.

Best Practice #3: Create Design-to-Code Pipelines for Rapid Prototyping

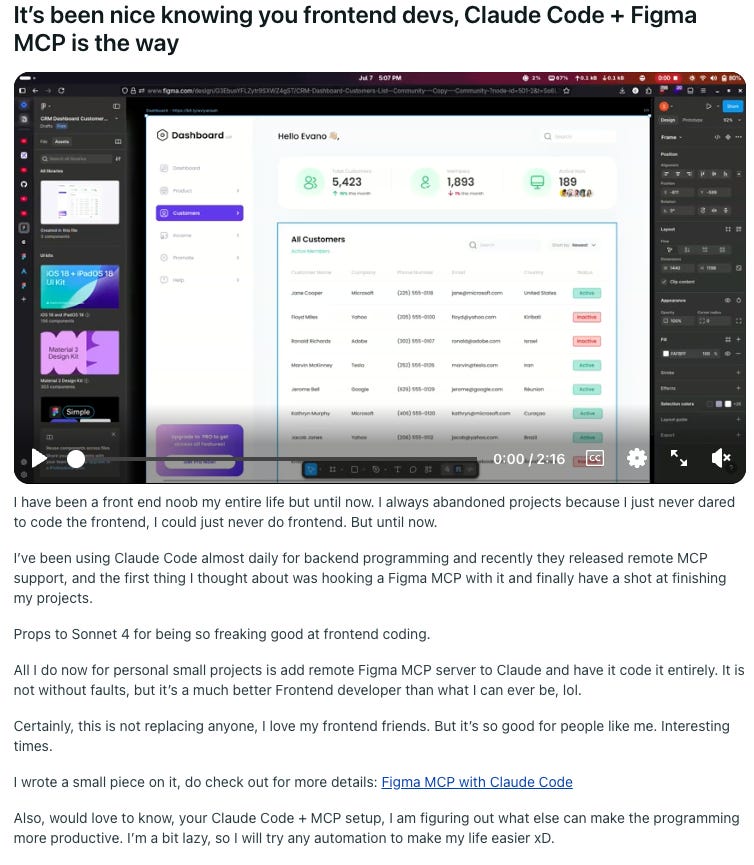

Designers sketch ideas in Figma. Engineers then rebuild them in code.

This handoff slows everything down.

Teams lose weeks translating visuals into working prototypes. Claude Code changes that with design-to-code pipelines. It pulls directly from Figma and generates functional code fast.

Anthropic’s Product Design team feeds Figma files into Claude Code. They set up autonomous loops to write and refine prototypes. Their Growth Marketing team reports a 10x increase in creative output through automated generation and Figma integration.

Well, I haven’t had a chance to work with Figma yet, but I felt it was necessary to put this topic in this blog. So I read through some Reddit posts, community tweets, documentation, and reports, and wrote this section.

As for my findings, for the technical side, start with screenshot-to-code. Claude Code, although it is a terminal-based tool, is still multimodal. You can copy and paste the image, or even put the file source into Claude Code.

You can provide Claude Code a Figma mock via copy-paste or file path. Ask it to implement, take a screenshot of the results, and iterate 2-3 times for accuracy.

Check out these resources for better hands-on implementation:

This works best for prototypes, not production apps, because Claude struggles with updating existing components or handling multiple frames without extra steps.

Bonus: Deploy Quality Assurance Through Automated Review Cycles

As teams grow, code reviews become a choke point. Humans can’t scale to check every change without delays or oversights. Automated cycles with Claude Code solve this by embedding AI checks early and often. You catch issues before they hit production.

Anthropic’s Security Engineering team automates PR analysis with Claude. They feed Terraform plans into it for quick security scans, slashing approval wait times.

Their Data Science team transformed TDD by shifting from throwaway notebooks to persistent React dashboards, gaining 2-4x speed in routine tasks.

For my personal research on Reinforcement Learning, I replicate the same approach. I built a parallel verification architecture. One Claude instance writes code, while another reviews it for bugs, style, and tests its output. This dual setup runs in isolation to avoid bias.

Set it up with GitHub Actions:

Install Claude SDK via npm.

Add a workflow YAML that @Claude on PRs to trigger reviews.

Configure claude.md for test rules and linting.

Implementing dual-Claude review reduced the buy percentage significantly.

IMO, don't rely solely on AI review for sensitive security logic, where human expertise spots subtle risks.

My Experience So Far

My journey with Claude Code started after May, but I have been extensively using it for almost all my work. Claude Code is a great example of Human-AI collaboration. It is a collaborative partner that allows you to iterate over your product and ideas more efficiently and creatively.

When you have a lot of command over domain expertise, you can be fluent in what you want. When you have a sound, creative mind and curiosity to try new things, you can be fluent in how you want.

Both of these elements will make you explore tools like Claude Code to your benefit. And the more you spend time with it, you will find out what works best for you and what does not. These are some things that worked for me and the platform that we are building.

If you like this blog, then do check out what we are building, Adaline, the single platform to iterate, evaluate, deploy, and monitor your LLMs. If you are working with LLMs, agents, MCPs, RAGs, building products with an application layer in LLMs, then you should check out Adaline.