Agentic AI Workflow for Product Builders

A brief case study on agentic AI workflows for building great products.

Understanding the Transformation

When I open community forums such as Reddit, I keep seeing the same pattern in product teams. They adopt AI tools with great fanfare, run pilots that show promise, then hit a wall when trying to scale. Why does this keep happening?

What I’ve learned from working with product leaders and reading reports and forums is that excitement often outpaces real impact. Teams quickly incorporate these AI tools, but results fall short.

Research shows 78% of companies now use generative AI at least once. Yet 80% report no material earnings impact from these efforts. With all the talk that is going around with LLMs like Grok-4 variants, especially heavy (as it uses multiple agents), Claude Opus, GPT-5 pro, etc., why are most teams not getting value?

This paradox raises a key question. How can adoption be so high while value stays so low?

So what's really going on here?

I've observed three main failure modes:

Horizontal tools, like basic chat interfaces, deliver quick wins but fail to scale meaningful impact across operations.

Vertical use cases, tailored to specific industries, excite teams, dubs yet 90% remain stuck in pilot mode, never reaching production.

Lack of mature strategies leaves most organizations scrambling; only 1% view their gen AI approaches as fully integrated and effective.

These issues stem from treating AI as a bolt-on feature rather than a core transformer. Data confirms most companies haven’t rewired processes to capture true value.

I get it. AI is moving pretty fast, and it is hard to keep up with the pace. Most of us are trying to figure out how to use reasoning models properly. And we are already here in an agentic era.

In an agent era, the AI doesn’t just respond to prompts. Think of it as a proactive partner that plans, remembers context, and executes tasks autonomously, like a virtual collaborator handling complex product development steps. Instead of asking ChatGPT or Claude for code suggestions, agentic systems build entire features by integrating tools and adapting in real time.

But here’s what most teams miss. True transformation requires empowering people alongside the tech. This means to foster a superagency where humans and AI align with each other.

Once we align ourselves with AI and AI with us, then we can explore the core components that product teams need to master next.

Adaline is the single platform to iterate, evaluate, deploy, and monitor LLMs.

With Adaline, you give superpowers to your prompt and ship them confidently.

Core Components for Product Teams

After studying successful implementations, I’ve identified four components that separate winners from the 40% who fail. Let me walk you through what actually works.

Here’s what surprised me about successful teams. They focus on building robust foundations early.

Gartner predicts that over 40% of agentic AI projects will be canceled by 2027 due to costs and unclear value. Then, what can we do differently?

Like, these [successful] teams invest wisely. They avoid hype and chase real ROI. For instance, we know how GPT-5 turned out to be more hype than actual improvement. Yes, it is cheaper and gets the job done, but we can all agree that GPT-5 was hyped beyond measure.

I’ve observed four foundational elements that drive success:

Build an orchestration layer to coordinate multiple agents, like a project manager directing a team.

Create persistent memory systems to maintain context, similar to a shared team notebook that remembers past decisions. This prevents agents from starting over each time. Something like a Claude.md file.

Develop tool integration frameworks for system connectivity, acting as connections to your existing software. Think of it as giving agents access to your company’s toolkit for real work. Think of MCPs.

Establish human oversight mechanisms for governance, like safety checks in a factory line. This ensures decisions align with business rules and catches issues early. One thing I like about Deep research from OpenAI, Google DeepMind, and even Claude Code is that they ask the user before they start their research or implement a code change to the existing codebase.

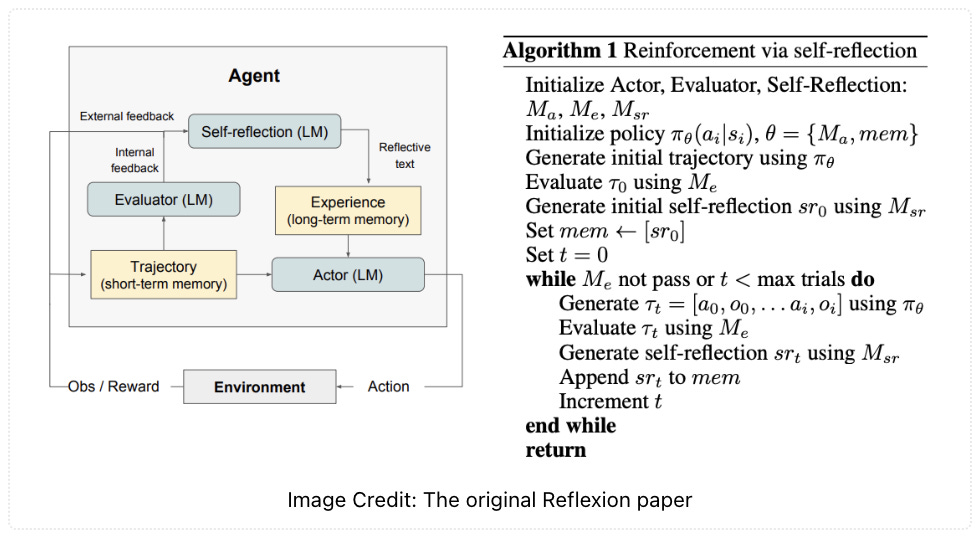

I notice three patterns that work consistently:

Sequential workflows for predictable processes, where agents handle steps one by one. Incremental iteration is more likely to succeed than a single comprehensive modification.

Parallel execution for diverse analysis, running multiple agents at once to gather insights quickly. It speeds up market research by merging results efficiently. Grok-4 heavy comes to mind for this example. Terrific product.

Reflective loops for self-improvement, where agents review their own work and adjust. This boosts accuracy over time, much like iterative feedback in agile teams.

The article from Gartner says that only 130 out of thousands of vendors offer genuine agentic capabilities. Only 33% of enterprise software will include this by 2028. Many projects fail from misapplied hype. The teams that get this right see 15% of daily decisions become autonomous by then.

They start small, measure progress, and scale with confidence.

Claude Code as Your Development Partner

Over the last weeks, I have been using Claude Code very regularly. I have also shared my view and experience. And with the rapid adoption of Claude Code, I'm seeing that it has fundamentally changed the development game. Let me show you why this matters.

From my research and experience, I found that the real shift comes from treating Claude as a true partner, not just a tool. Teams move from manual grinding to collaborative flows. I've studied Anthropic’s own use cases closely.

Their product engineering group calls it the "first stop" for any coding task, slashing context-gathering time.

Security engineers debug incidents three times faster by feeding stack traces directly. This isn't hype. It rewires daily work.

One story that stuck with me involves developers at companies like Ramp and Intercom.

The numbers tell the story. Claude Code's revenue has jumped significantly since Claude 4 launched in May.

Here's how I recommend teams approach this:

Start with development assistance, like code suggestions in your terminal.

Gradually expand to autonomous workflows, start with editing a single component, then move to handling multi-file edits and PR creation.

Add features one at a time. Slowly.

Learn how Claude Code functions. How does it switch the model?

Configure role-based access to control spending and usage.

Establish oversight guidelines using analytics dashboards for metrics like accept rates.

When it comes to cost, it starts at $17 per month for individuals, but the value multiplies quickly.

Ask yourself these three questions.

Does your team treat AI as a partner or plugin?

Can you automate routine pipelines today?

What's your oversight plan?

Answer honestly, and you'll spot the gaps.

Measuring Business Value and Managing Risk

How do I know agentic workflows are not just expensive hype? Here is what the data actually shows.

I have analyzed the results from companies that got this right. They see real growth and value.

Fujitsu built an AI agent for sales automation. It made proposal creation 67% more productive.

JM Family Enterprises created a multi-agent tool for software development. It saved 40% time for business analysts and 60% for quality assurance.

These cases prove agentic workflows deliver when focused on high-impact areas.

Based on what I am seeing, here is the investment reality.

Productivity improvements show in 40-67% gains across documented cases, like sales and development tasks.

Time savings shift weeks to days or hours to minutes, such as SPAR saving 715 hours, equal to 89 workdays.

Resource optimization frees FTE equivalents, with SPAR gaining four full-time roles worth of capacity.

Revenue impact hits over 5% for 19% of leaders using gen AI. McKinsey sees potential for billions in value through scaled implementations.

Now, about that 40% failure rate. Many projects flop from poor focus. This is what I recommend.

Start with well-defined, high-value processes to prove quick wins.

Implement gradual autonomy increases, keeping humans in the loop.

Focus on augmentation over replacement to build trust.

Establish clear business outcome metrics, like time saved or revenue lift.

If you are evaluating this technology, start here.

What processes take more than four hours weekly per person?

Where do you have inconsistent quality issues?

Which workflows require constant context switching?

The organizations that move thoughtfully capture lasting value. They measure early and adjust often.

Ending Note

You see that the agentic workflows are essential for product builders. But if you see it as a replacement, then you will make errors and panic. I get that AI isn’t perfect, and that is where human experience and expertise come in. Even if you take Grok-4 heavy, Claude Opus, GPT-5, or whatever Meta Superintelligence team is cooking. Everything boils down to:

Knowing your vision, product, and a clear path of what you want.

Starting small [maybe learning line by line] and learning how the agent behaves. Where it succeeds and where it fails.

Scaling up gradually.

Every testimony that you see showcased on a branded website is a result of experimentation and finding a spot where the agent fits in. Experimentation takes time. Learning takes time. Scaling takes time.

All things take time. So be patient and take time to learn and implement agentic tools in simple features. A great product needs regular iterations with mindfulness and grace.