Beyond Human Code: How Evolutionary Algorithms like AlphaEvolve Will Shape Next-Gen Apps

Why product leaders and AI engineers should pay attention to LLM-powered code evolution.

What is AlphaEvolve and Why Does it Matter for Tomorrow’s Apps

On June 16, 2025, Google DeepMind released AlphaEvolve, a system where evolutionary algorithms perform the search, while LLMs guide this search by proposing possible solutions.

But AlphaEvolve isn’t just another AI optimization tool. Unlike reinforcement learning (RL) systems like AlphaTensor—which output neural network policies—AlphaEvolve generates actual code.

Meaning, RL systems don’t return readable instructions. Instead, they return a policy, which is a set of learned parameters inside a neural network. When given an input, that policy tells the model what to do, like “route this task to that server” or “apply this matrix operation here.” It might work well, even better than human-written logic. But you can’t see why it works. You can’t inspect its logic, trace its reasoning, or debug it when it fails.

It’s a black box.

AlphaEvolve flips that. It’s a coding agent. It outputs complete, human-readable programs—written in C++, Python, Verilog, whatever the task demands. You can open the file, read the logic, and know what’s happening.

It outputs code that runs. Code that debugs. Code that engineers can reason about.

That’s a fundamental shift.

It means engineers don’t have to reverse-engineer a black box when something breaks—they can read the function, see the diff, and fix it. For practical systems like data center scheduling or hardware design, that interpretability isn’t optional; it’s table stakes. AlphaEvolve was built with that in mind.

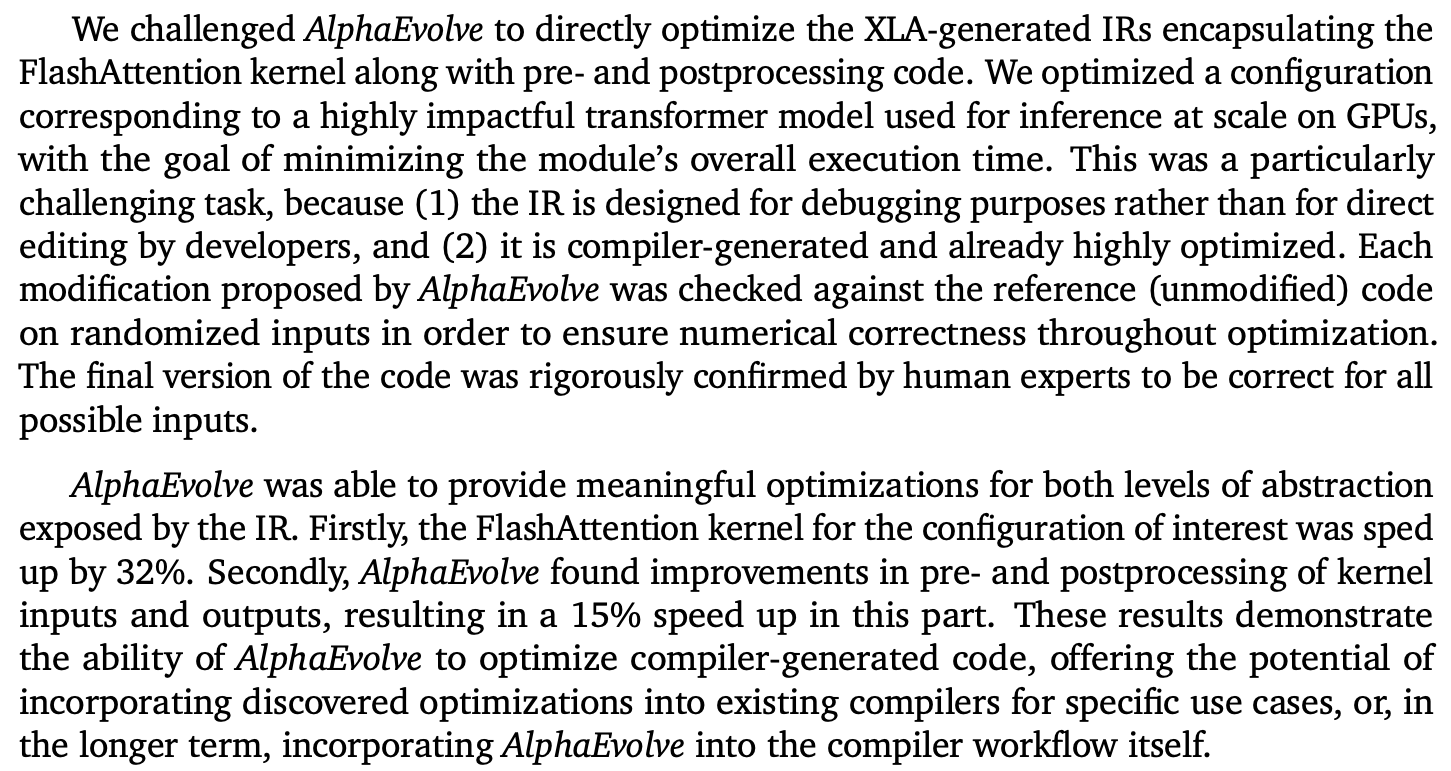

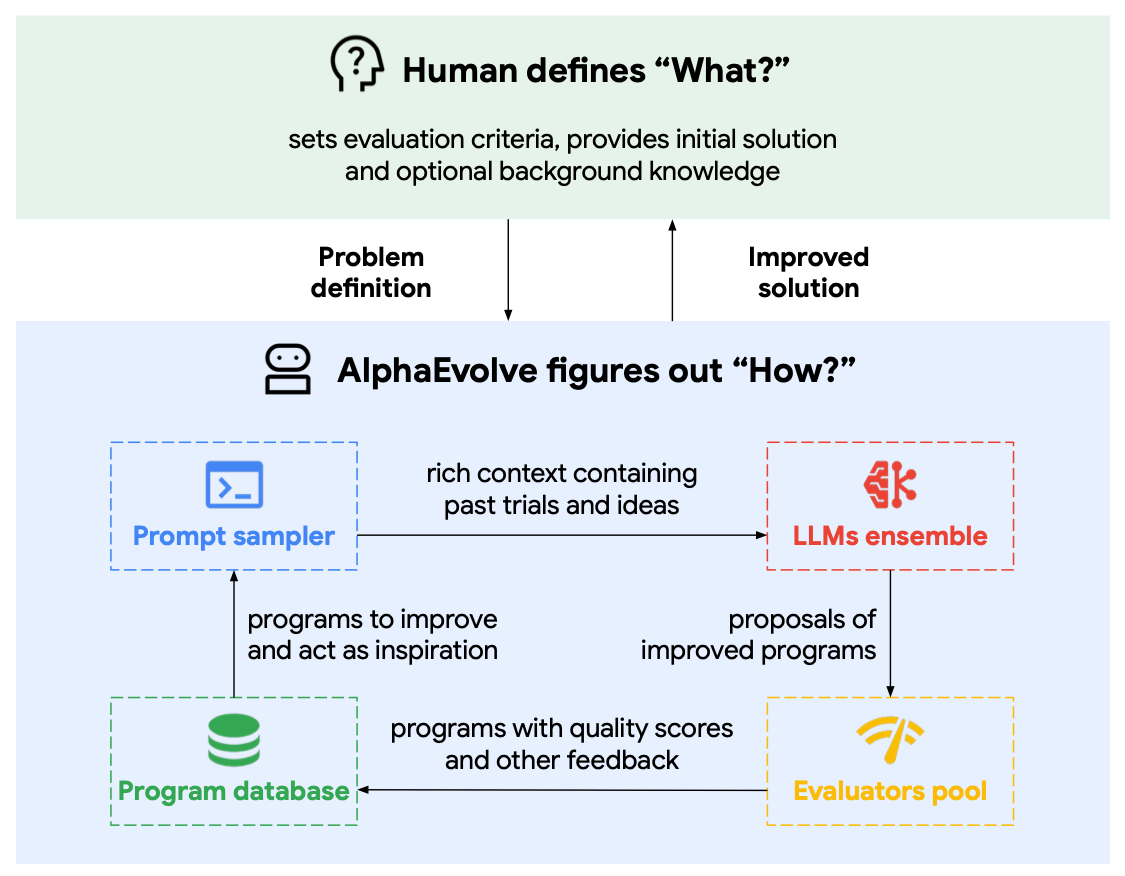

AlphaEvolve combines powerful LLMs with an evolutionary loop: generate variations, test them automatically, and keep what works.

Three Ways AlphaEvolve Will Change Your Product Roadmap

First, AlphaEvolve shortens your innovation cycle.

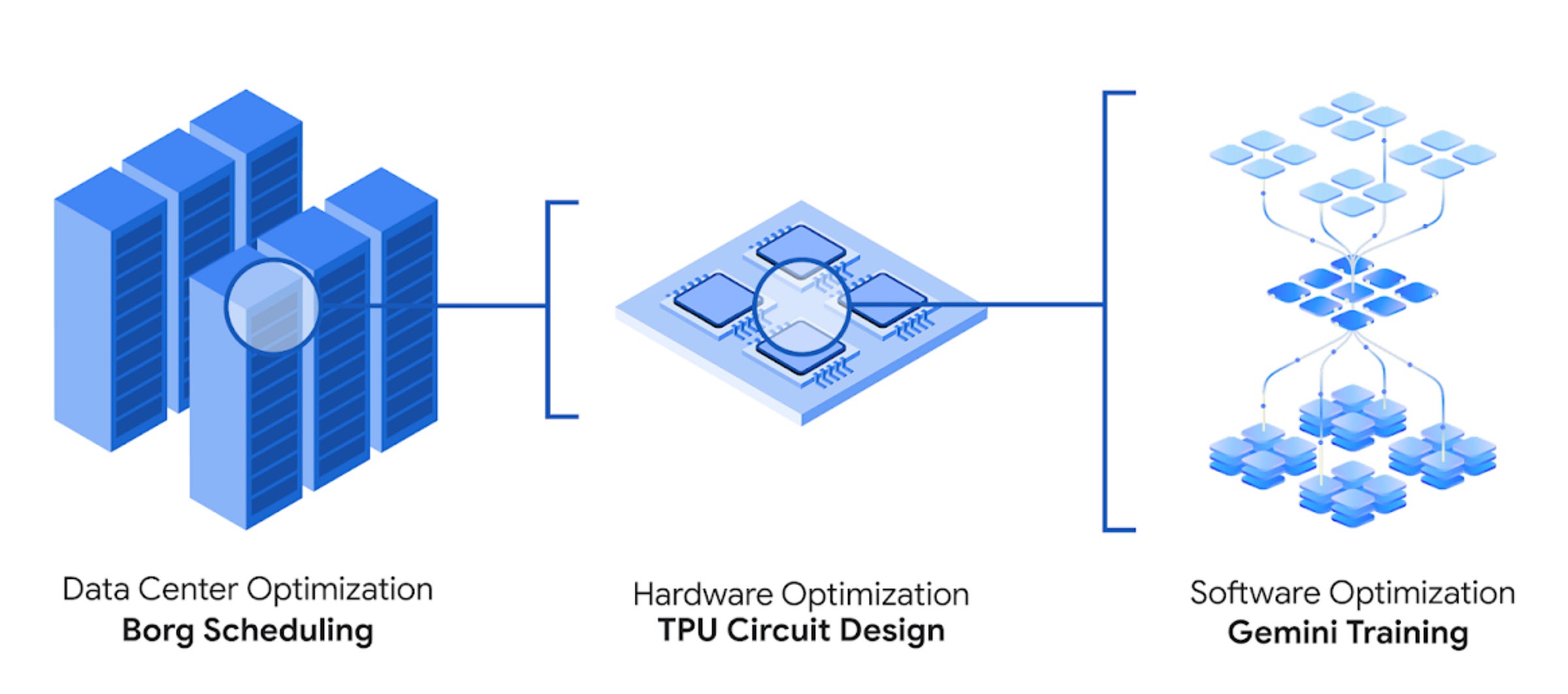

What used to take months of kernel tuning or algorithmic search now takes days. At Google, it optimized matrix multiplication kernels for Gemini training. This led to cutting kernel tuning time from months to days and reducing overall training time by 1%.

And, these aren’t prototype gains. They’re production-grade.

When the right evaluation metric is in place, AlphaEvolve can generate, test, and evolve solutions faster than any human-led loop.

Second, the efficiency gains are hard to ignore.

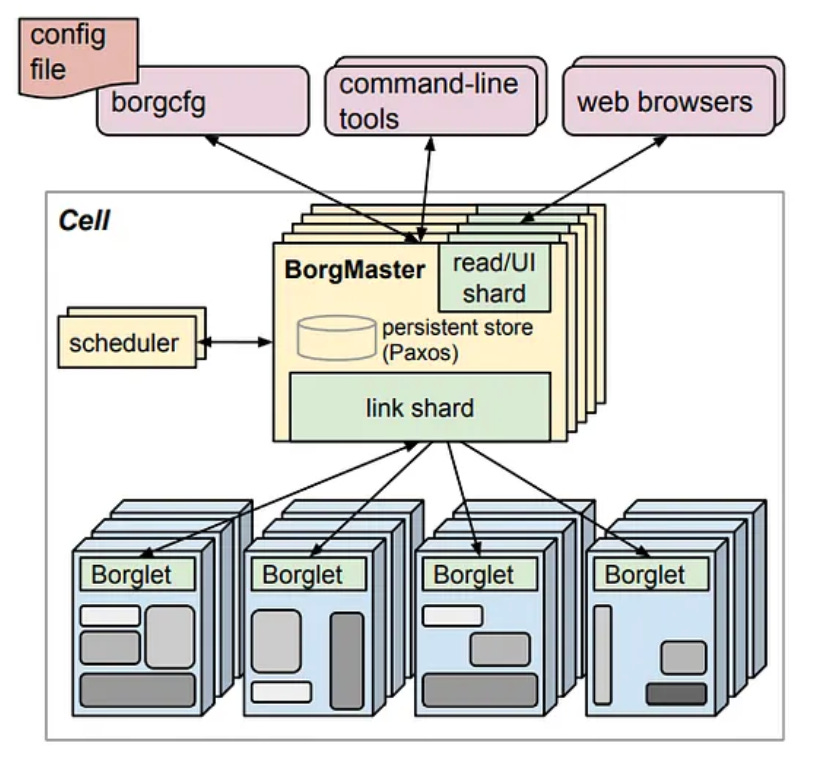

AlphaEvolve reclaimed 0.7% of compute in Google’s data centers by writing a new scheduling heuristic—code that’s now deployed across Borg. Google’s cluster management system which is used to manage large-scale deployments.

It also helped reduce TPU power and area by identifying a single Verilog-level rewrite. Verilog is the programming language hardware engineers use to design chips. It defines how the circuits inside something like a TPU actually behave.

Changing code at the Verilog level means modifying the chip’s logic itself, not just the software that runs on it. In this case, AlphaEvolve found a small change that removed unnecessary bits from the Verilog description of a core arithmetic circuit. That tweak—validated by Google’s hardware team—led to real gains in both area and power efficiency.

These gains compound.

A 1% improvement in compute or power at Google scale means millions in real savings. And for smaller teams, it means headroom: more experimentation, more models, fewer constraints. The key isn’t just the improvement. It’s that AlphaEvolve made it at the Register-Transfer Level (RTL) layer, where changes are expensive and traditionally take months. Now they happen in days, and they ship.

Third, it writes code you can trust.

Not opaque neural policies, but readable, testable programs. You get clear diffs, traceable changes, and language-native output in C++, Python, or Verilog. This makes review, debugging, and integration straightforward. It’s AI you can reason about, and ship with confidence.

How I Might Use Evolutionary Algorithms to Build Products

Now, this section is completely my (high-level) opinion on how I would use AlphaEvolve to build products.

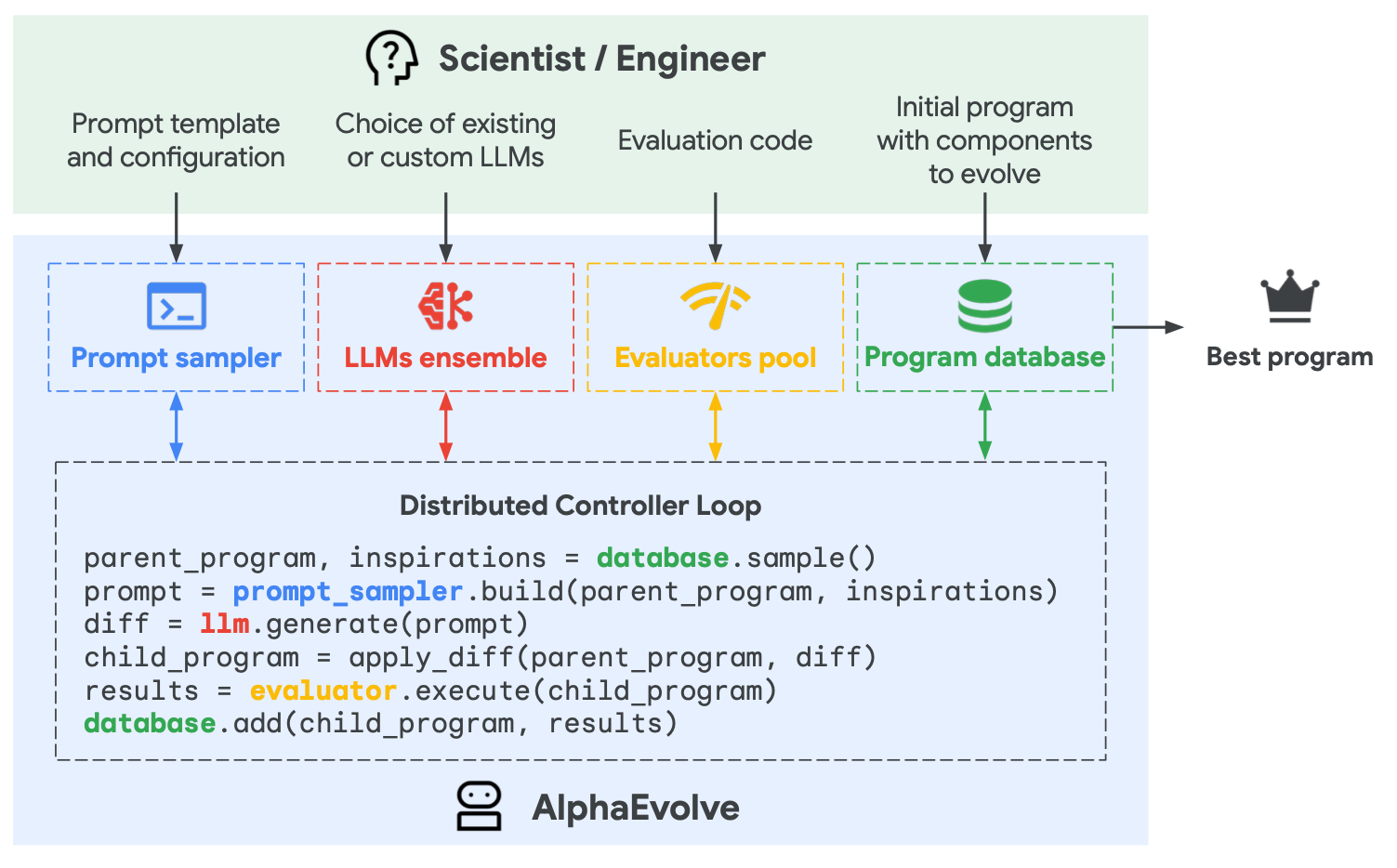

When I build products, I don’t start with a model. I start with the user. What do they need? What does success look like?

From that, I define the goal in measurable terms—a scalar function the machine can optimize.

For AlphaEvolve, this means writing a Python function that scores a solution. The higher the score, the better the output. This becomes the evaluation criterion. It could be something like recovered compute, execution time, or energy efficiency.

Then I craft the prompt.

I’m not vague.

I include the starting code, relevant context, instructions, and even previously attempted solutions. If I want it to try near-integral solutions, I’ll say that.

The more specific I am, the better the model understands what I’m after.

AlphaEvolve doesn’t just generate; it evolves. It tries, tests, scores, and iterates.

Sometimes, the changes are minor tweaks. Other times, it proposes something I wouldn’t have considered—a complete rewrite, a strange heuristic, or a surprising structure.

That’s the point.

The evolutionary algorithm does the searching—but the LLM tells it where to search. It proposes directions, not just solutions. And that’s what makes this powerful. It’s not just optimizing—it’s discovering.

AlphaEvolve can move into entirely new regions of the search space. It runs a simple search on top of a language model’s vast knowledge and, in doing so, finds patterns we didn’t know existed.

That’s how it goes beyond the data—by generating novel structures that don’t just match past solutions, but invent new ones.

It finds solutions outside the range of my intuition.

Sometimes it will not be perfect.

But it’s fast, interpretable, and scalable. I still set the direction. AlphaEvolve just moves faster through the search space—finding better answers than I would, and doing it at scale.

Staying Ahead of the Curve

AlphaEvolve isn’t a magic wand.

The technology isn’t there yet, but it’s powerful and not universal. Its biggest constraint? It needs a way to score solutions automatically.

If your problem doesn’t have a clear, machine-gradeable evaluation function, AlphaEvolve can’t help—yet.

Demis Hassabis stated that it’s essential to have an objective function to guide the search. Moreover, he emphasized that the search space is too vast to be explored randomly, so there must be "some objective function that you're trying to optimize and hill climb towards and that guides that search”.

This rules out tasks that rely on manual experiments, subjective judgment, or undefined success criteria.

AlphaEvolve works best in math-heavy, code-centric domains like scheduling, kernel optimization, and hardware design.

Even within those domains, scale can be a bottleneck. When applied to very large problems—like matrix sizes beyond ⟨5,5,5⟩—AlphaEvolve can run into memory limits during evaluation. And while it outputs executable code, there’s still a digital-to-real-world gap. Many improvements need hardware validation or real-world benchmarking to prove they hold up.

Despite those limits, the bigger picture is compelling. AlphaEvolve is an early glimpse into AI systems that improve not just products—but the AI that powers them. It has already helped Gemini train faster by optimizing its own compute stack. In time, these improvements could be distilled into future LLMs, closing the feedback loop.

This is where things get competitive. AI optimizing AI means compound acceleration. Teams that plug into this loop will move faster, cheaper, and smarter. The ones that don’t? They’ll feel it soon enough.

Lastly, I believe that a firm understanding of what you want to build or accomplish is an important skill and a superpower. If you lack that basic understanding, then such a system will not be useful to you in any way. It will just be another sentence spitting machine.

Inspired From

DeepMind blog post on AlphaEvolve (May 14, 2025) for a non-technical summary.

AlphaEvolve: A coding agent for scientific and algorithmic discovery

DeepMind's Pushmeet Kohli on AI's Scientific Revolution