Building Better Product With Tool Calling

Understanding what tool calling is and how it works.

Modern LLMs can reason well and write fluent text, yet they still miss facts. This is especially true if it is current information. This happens because the model is trained on a certain cutoff knowledge corpus, which will not have the current information. In such cases, LLMs need an external source to provide them with current knowledge.

Now, when a product is developed on top of LLMs, they need more than the current information. Depending on the product's purpose, it may require tool calling so that models can query APIs, browse the web, or run code, and then return structured results that your backend can trust.

Structured Outputs enforce JSON Schema, which reduces brittle parsing and improves reliability in production.

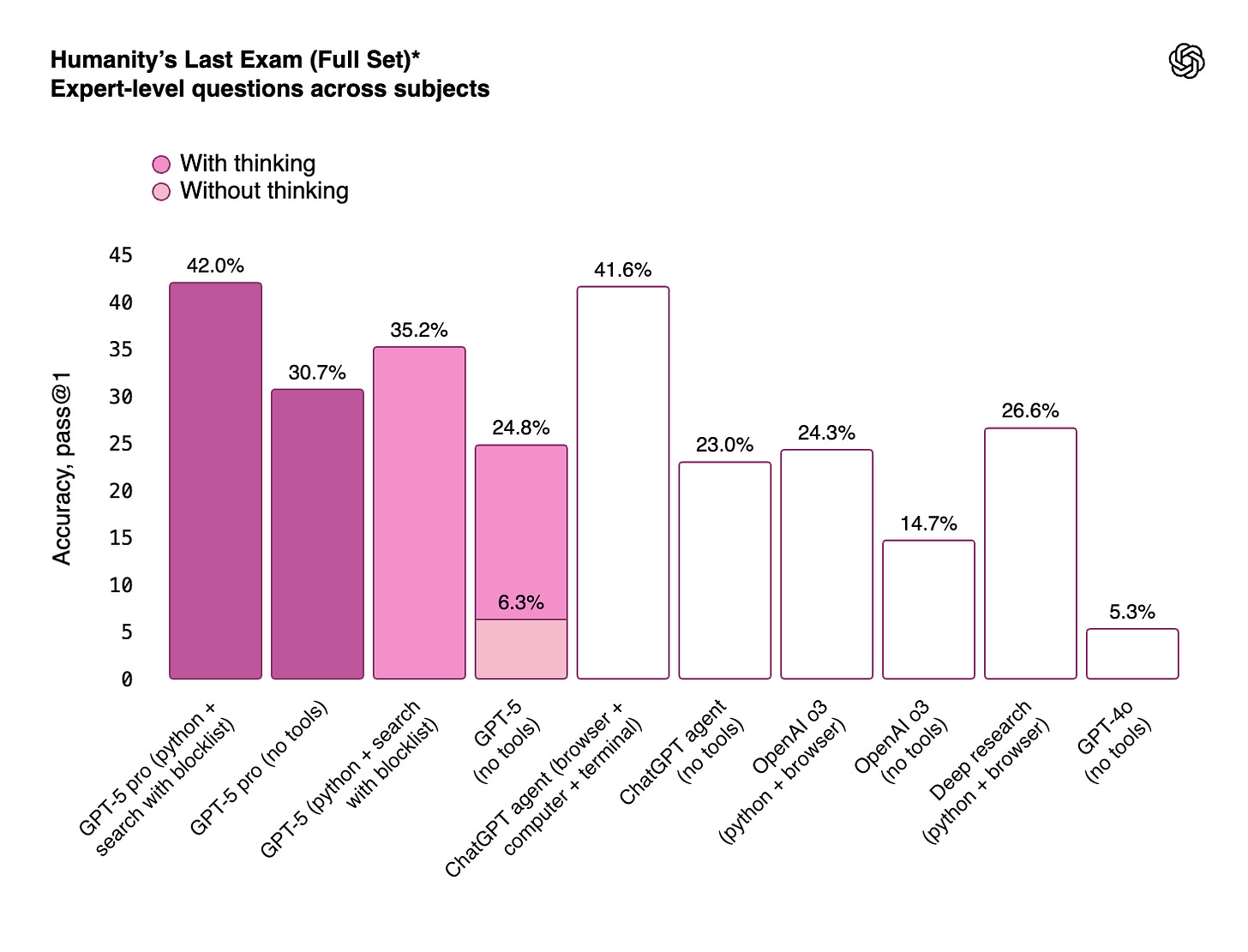

OpenAI’s Deep Research shows the full loop in action. The agent plans multi-step web searches, calls a browser tool to gather sources, uses Python for analysis, and cites every claim before synthesizing an answer. OpenAI reports Deep Research runs for minutes, autonomously pivots, and markedly outperforms chat-only models on browsing benchmarks by combining reasoning with tool use. The result is fewer hallucinations and answers tailored to a user’s task.

As a product leader, knowing how to implement tool calls with the prompt is extremely important. But what is it? How does it work?

In this blog, we will explore the basics of tool calling in LLMs.

What is a Tool Calling in LLMs?

Tool calling means the LLMs can act through your software, not just reply with text.

Essentially, you declare tools as JSON-typed functions, including names, descriptions, and parameters. The LLM selects the tool, proposes arguments, and your service executes the call. Meaning, the backend code that actually runs the tool. You then pass the result back, and the model uses it to finish the answer.

With Structured Outputs, the response must follow your JSON Schema, which makes results dependable for production systems.

Capability layer or tool calling. The core loop is simple: the model chooses a tool, suggests arguments, you run it, then return results for the model to use. This turns free-form chat into actions your product can control and log.

Interface layer or function calling. This is the concrete API that exposes tool calling. You register functions with typed parameters; the model returns a function name and arguments; your app executes and returns data. Google’s Gemini docs describe the same step-by-step flow, showing cross-vendor alignment.

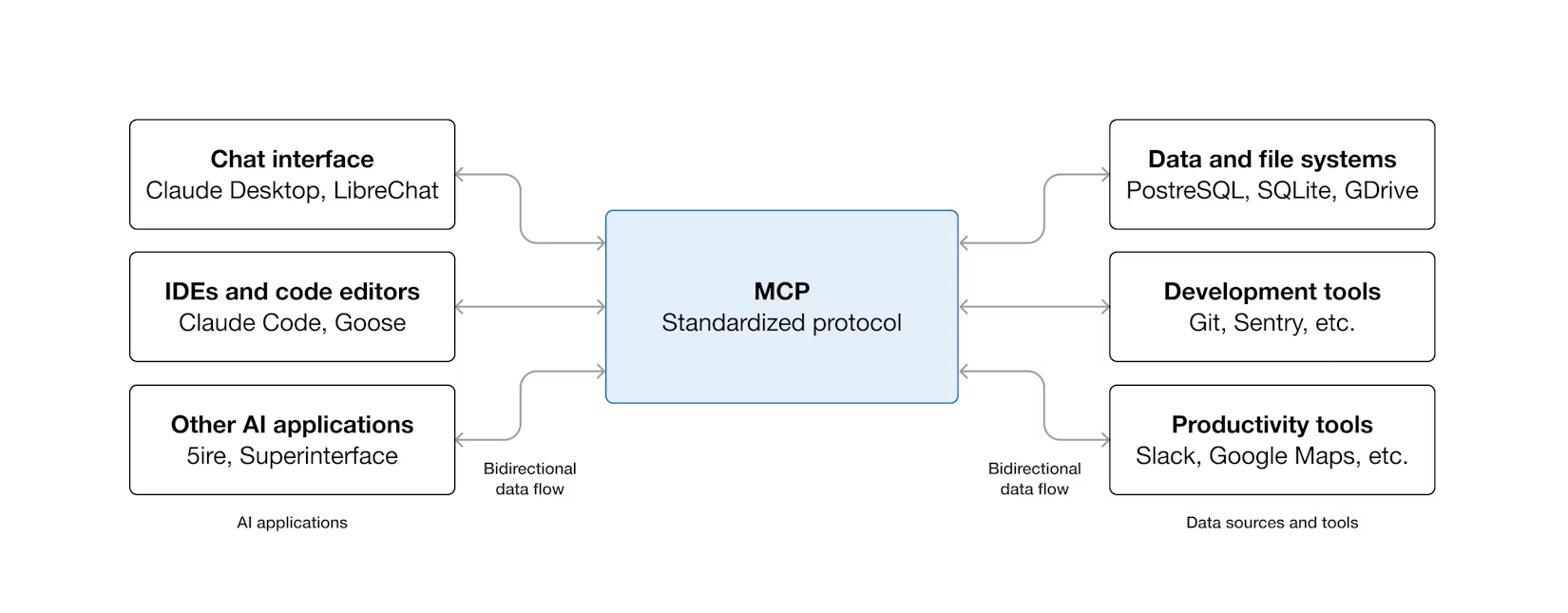

Connector layer or Model Context Protocol (MCP). MCP standardizes how assistants reach files, databases, and SaaS tools through uniform servers and clients, reducing custom integrations and improving auditability.

Orchestration layer or Agent SDKs. SDKs coordinate multi-step work on top of function or tool calls. OpenAI’s Responses API and Agents SDK add routing, tracing, and policies.

Together, these layers give you controllable actions, typed payloads, and lower-hallucination answers that fit real product workflows.

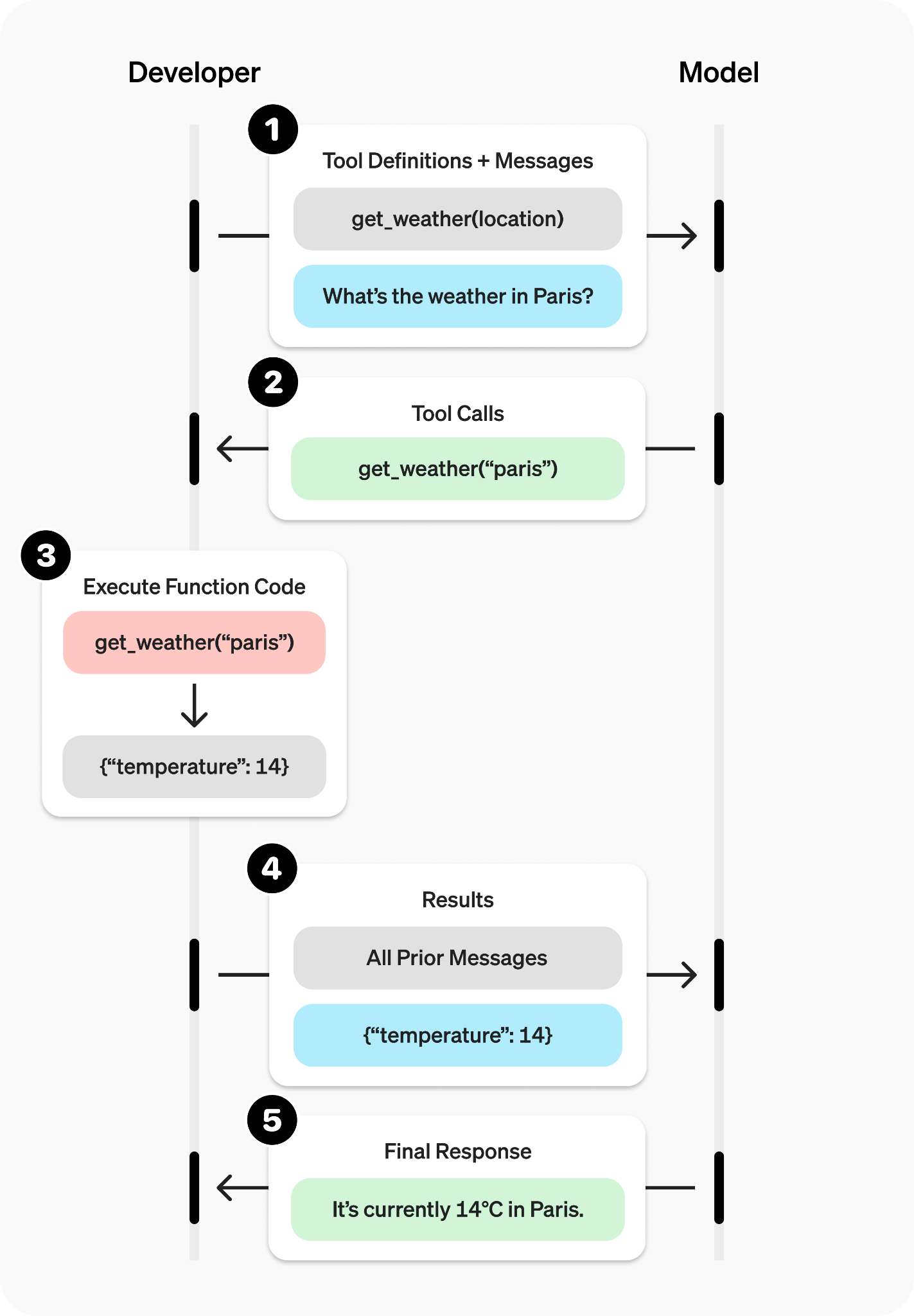

How Tool Calling Works Step by Step

Let’s understand this by defining the structure of the tool.

Essentially, you define each tool with a name, description, and JSON parameter schema. Keep parameters typed with constraints like enums, patterns, and required fields. Tight schemas prevent ambiguous arguments and reduce downstream parsing errors.

OpenAI’s Structured Outputs validates responses against your JSON Schema before your code runs.

{

“name”: “lookup_order_status”,

“description”: “Return the current status for a customer order”,

“parameters”: {

“type”: “object”,

“properties”: {

“order_id”: { “type”: “string”, “pattern”: “^[A-Z0-9-]{8,}$” },

“channel”: { “type”: “string”, “enum”: [”web”, “app”, “support”] }

},

“required”: [”order_id”]

}

}

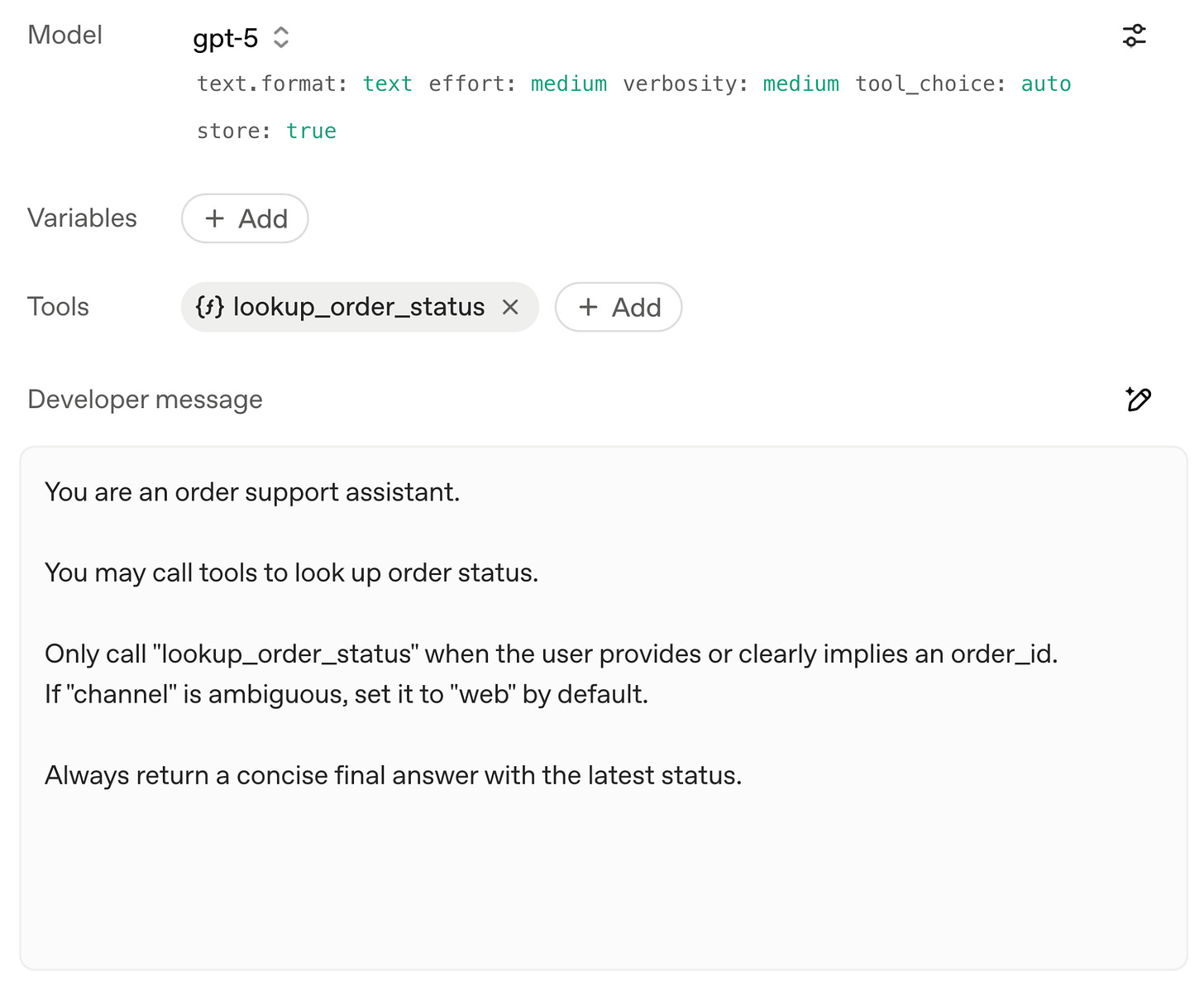

Now, to get a hands-on experience, you can try this example in the OpenAI Playground Chat.

In the platform, pick your GPT-5 model, click Add tool, and paste the tool schema above.

Then use the prompts below as a developer message.

“You are an order support assistant. You may call tools to look up order status. Only call “

lookup_order_status” when the user provides or clearly implies anorder_id. If “channel” is ambiguous, set it to “web” by default. Always return a concise final answer with the latest status.”

Followed by the user message.

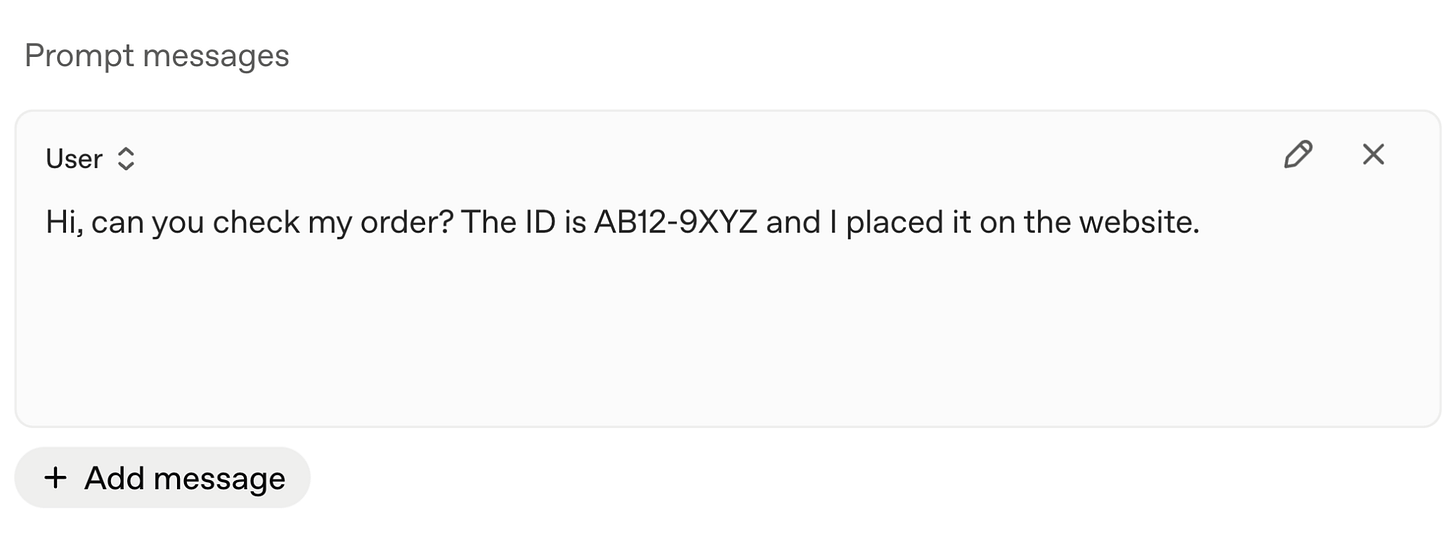

Hi, can you check my order? The ID is AB12-9XYZ and I placed it on the website.

How does the model read it?

You pass the tool declaration alongside the user message in the API request. The model sees available tool name (in this case lookup_order_status), descriptions, and parameter types. It uses these hints to decide whether a tool call is appropriate for the current turn.

Again, OpenAI’s guides describe this registration and exposure model.

How does the model decide a call?

During generation, the model emits a tool_call containing the chosen tool name and JSON arguments.

The arguments are validated against your schema to catch missing fields and illegal values. Invalid payloads are rejected before any execution path runs. This preserves typed parsing and safer control flow.

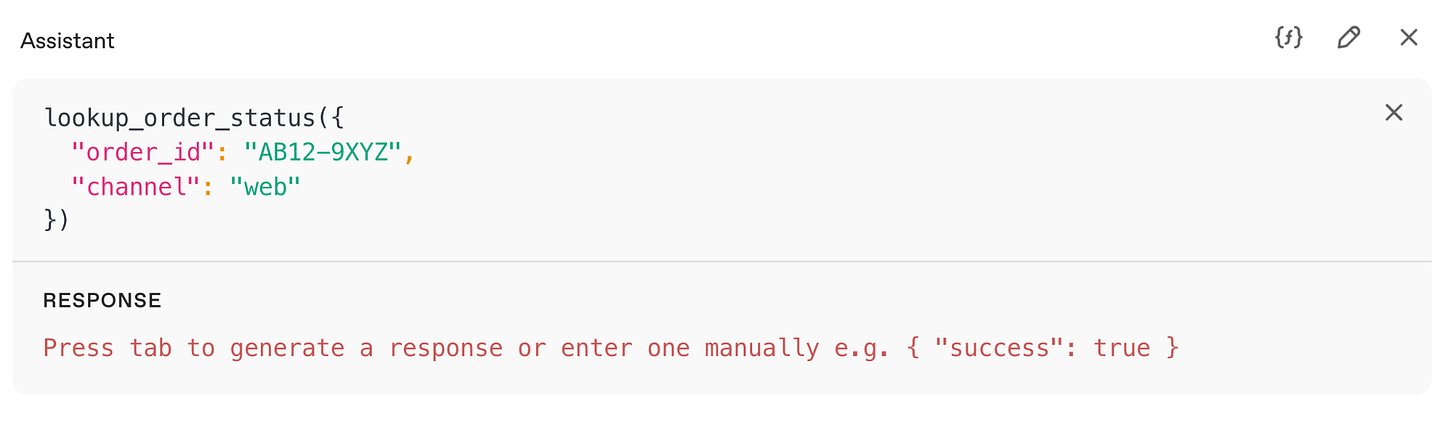

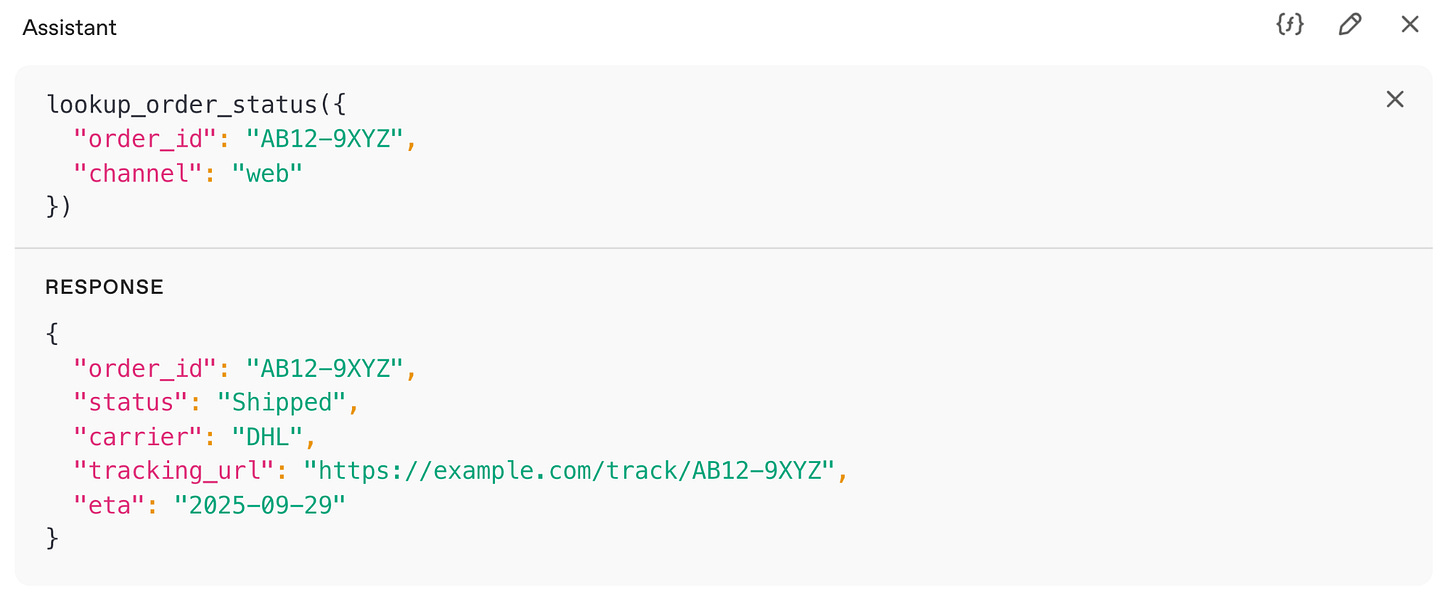

Execution and synthesis.

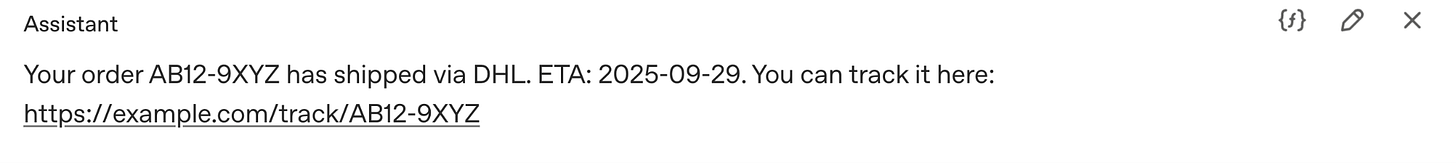

Your server executes the function and returns a structured result back into the conversation state.

The model then synthesizes a user-facing answer (like the one below) or chooses another tool for a follow-up step.

OpenAI’s Responses API formalizes this request → tool → response loop and provides state and tracing hooks.

To gain a visual understanding of tool calling, refer to this illustration.

Use Cases of Tool Calling for Real Products

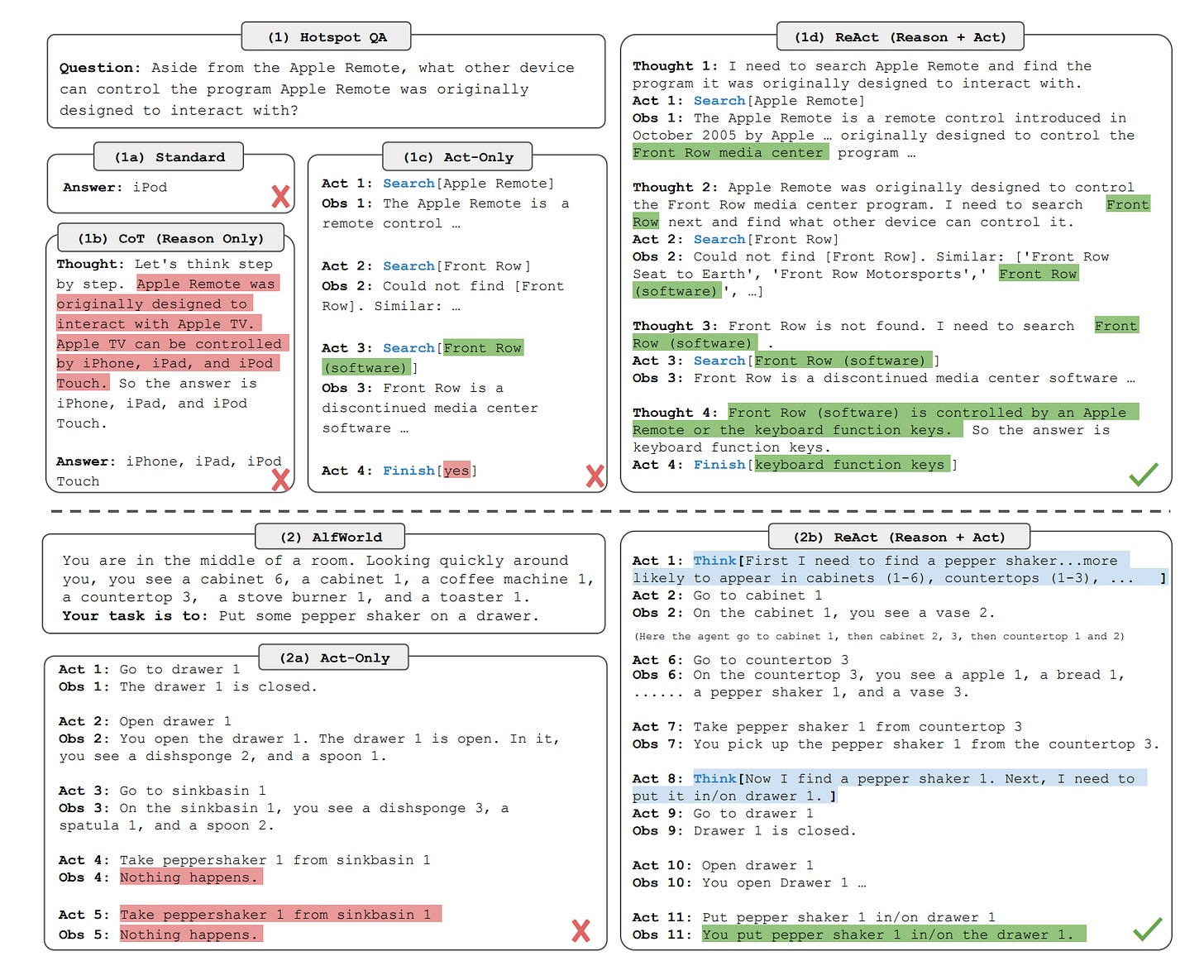

Tool calling delivers quick wins where answers must be factual, auditable, and timely. It shines in search, retrieval, and operations because the model can fetch evidence, act on APIs, and return structured outputs your app trusts. ReAct-style loops and strict schemas further cut hallucinations in practice.

Search and Retrieval

Use a ReAct pattern for questions that need evidence. The model calls web or internal search, inspects snippets, and cites sources before answering. This interleaved “think, act, observe” loop improves success on HotpotQA, FEVER, ALFWorld, and WebShop compared with baselines, making answers both accurate and auditable.

RAG with Verification

Call retrieval first, draft an answer, then invoke a verification tool or a second model, LLM-as-a-judge, to check claims. Enforce JSON evidence objects using Structured Outputs so downstream services can log, score, and display citations without brittle parsing.

Operations Automations

Map narrow workflows to single tools, then return typed results your app can act on. Meaning, pick one tiny job your app repeats often. Give that job a single tool.

A narrow job has one clear input and one clear output.

One tool per job keeps the model’s choice obvious and the code easy to test.

The tool must return a typed JSON result that your app can use immediately.

What does “typed result” mean?

Your tool returns JSON that follows a schema you define.

{

“order_id”: “AB12-9XYZ”,

“status”: “shipped”,

“carrier”: “DHL”,

“eta”: “2025-09-29”,

“tracking_url”: “https://example.com/track/AB12-9XYZ”

}Your UI can render this without guessing field names or formats.

Where do Agent SDKs help?

An Agent SDK sits above your tools and does three useful jobs:

Routing: It decides which single tool to call based on the user request.

Tracing: It logs inputs, outputs, and timing so you can debug and measure.

Policies: It enforces rules like “refunds only for paid orders” or “no writes on main.”

You write less glue code because the SDK handles these cross-cutting concerns.

Engineering Assistants

Use MCP-backed tools for code search, repo operations, and deployment helpers inside IDEs or chat. MCP standardizes connectors across data and services; early adopters include teams highlighted in industry coverage, signaling growing ecosystem support.

Closing

Tool calling turns models into dependable product components by enforcing structure, grounding answers in tools, and enabling safe actions. Product leaders get reliability, auditability, and speed when schemas, MCP connectors, and Agent SDKs work together.

As a product leader, you can amplify your prompting skills if you start incorporating tool calling into your prompts. This extends your capabilities to build products that are more aligned with the users’ needs.