Growth And Retention In An AI-first World | Takeaways For Founders And Product Leaders

AI makes products feel magical at first, but only trust, habit, and problem frequency turn novelty into durable retention.

TLDR: This blog explains why smart AI features alone don't create lasting user habits or growth. It covers three key insights: excitement doesn't equal habit without intentional design. Retention depends on how often users naturally need your product, not how smart it is. And products grow when they solve shared problems, not just individual ones. Readers will learn why forcing engagement backfires, why aligning with users' natural workflows matters, and how collaboration drives real stickiness. The main takeaway is simple: AI products succeed by becoming useful to groups, not by being brilliant alone.

Introduction

Founder Intro: Growth & Retention in an AI-First World

One of the most persistent misconceptions in AI right now is that intelligence alone drives growth.

Build something impressive enough, the thinking goes, and users will keep coming back. Retention will take care of itself. Distribution will follow naturally.

In practice, the opposite is happening.

AI products are getting better faster than teams are learning how to retain users, build trust, and compound growth over time. Excitement is abundant. Habit is rare.

Panel 3 was designed to confront that gap directly.

Rather than focusing on models or capabilities, we wanted to examine a harder set of questions:

What actually makes AI products stick?

What drives durable growth once the novelty wears off?

And how do retention dynamics change in an AI-first world?

To explore those questions, we brought together operators who have spent years studying — and living inside — growth systems at scale:

Aaron Cort, Growth & Marketing Partner at Craft Ventures, advising and operating across some of the fastest-growing AI and SaaS companies

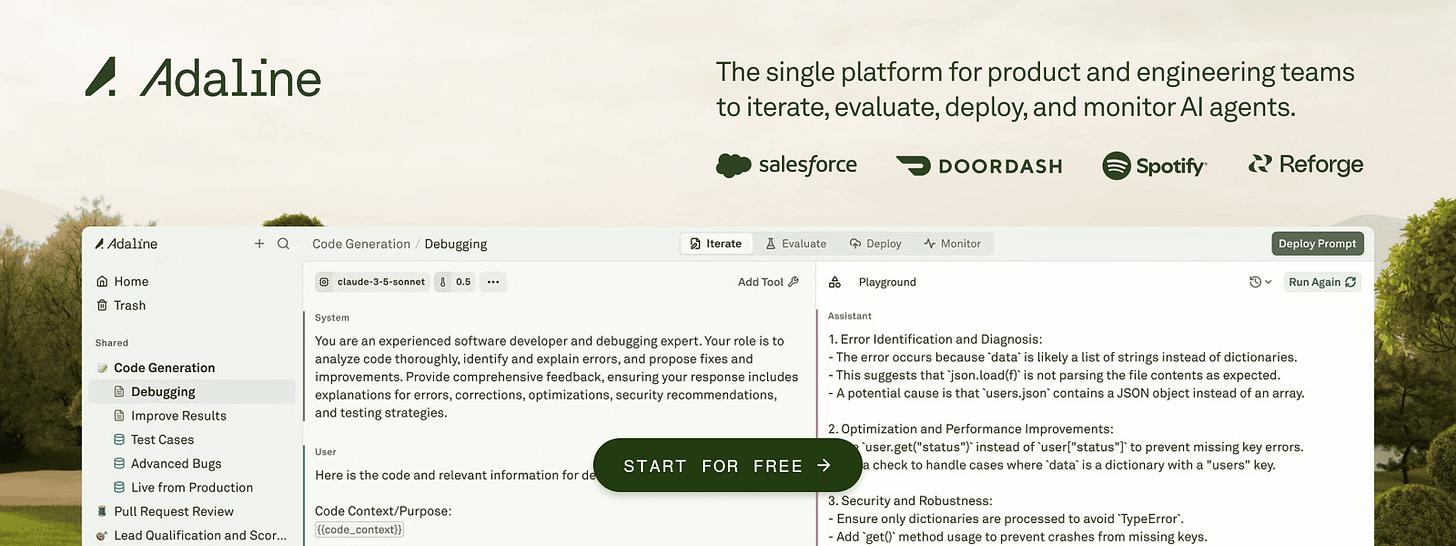

Brian Balfour, Founder & CEO at Reforge, who has shaped how an entire generation of operators thinks about growth and retention

Bryce Hunt, Founding GTM at Cognition, working at the frontier of agent-native products and new go-to-market motions

Gaurav Vohra, Advisor and Head of Growth at Superhuman, where precision, trust, and habit are non-negotiable

What emerged was a clear reframing of growth in the AI era.

This panel wasn’t about hacks, channels, or short-term tactics. It was about fundamentals — how problem frequency governs retention, why trust is the real retention loop, how onboarding becomes more critical (not less), and why community and personal brand are increasingly powerful growth multipliers.

Perhaps most importantly, it surfaced a shared conviction:

AI doesn’t change the laws of growth.

It exposes when teams ignore them.

The sections that follow break down the core lessons from this conversation — from why hype fades faster than habit, to why motion choice constrains everything, to why the most defensible layer in many AI companies today is human trust.

If you’re building an AI product and wondering why early excitement isn’t translating into durable usage — or how to design growth systems that actually compound — this panel offers a grounded, experience-driven place to start.

1. Hype Is Easy — Habit Is the Hard Part

One of the most consistent themes across this panel was the widening gap between initial excitement and durable usage in AI products.

AI excels at creating “wow” moments.

New users are impressed by:

Instant results.

Intelligent-sounding outputs.

Dramatic productivity claims.

Novelty-driven breakthroughs.

As Brian Balfour, Founder & CEO of Reforge, pointed out, this has created a dangerous illusion in the market: teams mistake interest for retention.

The Illusion of Early Traction

AI products today are exceptionally good at:

Generating excitement.

Creating impressive first impressions.

Driving short-term spikes in usage.

These early signals feel like momentum. Dashboards light up. Activation looks strong. Engagement graphs climb.

But as multiple speakers emphasized, very few AI products convert that excitement into habit.

Usage drops sharply once novelty fades. Sessions become sporadic. Power users emerge — but the majority quietly churn.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, noted during the panel, this is one of the most common failure patterns he sees across AI companies: strong top-of-funnel interest paired with weak behavioral lock-in.

Habit Is Not a Side Effect of Intelligence

A critical distinction surfaced early in the discussion:

Habit does not emerge automatically from impressive capability.

Habit forms when a product:

Solves a recurring problem.

Delivers consistent value.

Reinforces usage at the right cadence.

AI often accelerates the first interaction — but it does not guarantee the second, third, or tenth.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, framed it, delight gets users to try. Reliability gets them to stay.

The Missing Link: Problem Frequency

Several speakers emphasized that many AI products fail because they misunderstand how often users naturally experience the problem being solved.

If a product:

Solves a weekly problem,

But is designed for daily engagement,

Or pushes frequent prompts to manufacture usage,

it creates friction, not habit.

This mismatch leads to:

Forced engagement.

Notification fatigue.

User resentment.

Eventual churn.

AI doesn’t change the natural frequency of a problem; it only exposes when teams ignore it.

Shallow Engagement Looks Like Growth (Until It Doesn’t)

One of the more subtle warnings from the panel was about engagement theater.

Short sessions, repeated trials, and sporadic experimentation can look like healthy usage in aggregate. But without a clear, repeatable value loop, that engagement is fragile.

As Bryce Hunt, Founding GTM at Cognition, described from the frontier of agent-native products, users will experiment enthusiastically — right up until they don’t trust the system to deliver reliably when it matters.

At that point, usage collapses.

From Hype to Habit Requires Intentional Design

The panel was clear that the transition from hype to habit is not accidental.

It requires:

A deep understanding of the underlying user problem.

Clarity on when and why users should return.

Consistent value delivery, not sporadic brilliance.

Reinforcement at a cadence that matches real behavior.

Without these elements, AI products experience:

Rapid churn.

Novelty decay.

Shallow engagement disguised as growth.

The Core Insight

AI makes it easier than ever to impress users once.

It does not make it easier to earn a place in their daily — or weekly — routine.

As this panel made clear, habit is not created by intelligence alone.

It’s created by relevance, consistency, and trust — delivered over time.

2. Retention Is Governed by the Natural Frequency of the Problem

Early in the panel, a foundational concept surfaced — and then kept resurfacing in different forms:

Retention is constrained by how often users naturally encounter the problem you solve.

No amount of AI sophistication can override that constraint.

You can improve how a problem is solved.

You can reduce friction.

You can increase quality.

But you cannot change:

How frequently does the user feel the pain?

How urgent is it when it appears?

Whether it belong in their daily, weekly, or occasional workflow?

As Brian Balfour, Founder & CEO of Reforge, emphasized, retention mechanics are downstream of reality — not product ambition.

AI Doesn’t Change Problem Frequency — It Reveals It

One of the traps AI companies fall into is assuming that intelligence increases usage frequency.

It doesn’t.

AI can:

Make a task faster.

Make a task easier.

Make a task more impressive.

But if the task only matters once a week, daily usage is artificial.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, noted during the discussion, many AI products feel pressure to justify venture-scale expectations by forcing daily engagement — even when the underlying problem doesn’t support it.

That pressure often leads to bad decisions.

Forced Engagement Backfires

When companies try to:

Force daily usage for a weekly problem.

Manufacture engagement through notifications.

Inflate frequency with alerts, nudges, or reminders.

They don’t create a habit.

They create:

Worse products.

User fatigue.

Eroded trust.

Eventual churn.

Users don’t interpret forced engagement as helpful.

They interpret it as noise.

AI amplifies this effect because the expectations are higher. If a system claims intelligence but interrupts users unnecessarily, the disappointment is sharper.

Criticality Matters as Much as Frequency

The panel also highlighted that frequency alone isn’t enough — criticality matters.

Some problems occur infrequently but are extremely important when they do. Others occur often but are low-stakes.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, explained, retention emerges when a product aligns with moments that matter. If users don’t feel meaningful relief or leverage when the problem appears, they won’t return — no matter how impressive the solution is.

AI products that misunderstand this often chase engagement metrics instead of solving meaningful pain.

Misaligned Cadence Creates Product Friction

A recurring failure pattern described on the panel looked like this:

The product solves a real problem.

The solution works well.

But the cadence of engagement doesn’t match the user’s life.

Daily prompts for a weekly task.

Constant nudges for occasional workflows.

Persistent reminders for low-urgency problems.

The result isn’t retention — it’s resistance.

As Bryce Hunt, Founding GTM at Cognition, pointed out from the edge of agent-driven products, users quickly disengage when a system feels like it’s working for itself, not for them.

AI Makes Violations More Obvious

One of the sharpest insights from the panel was this:

AI does not change the natural frequency law — it only amplifies violations of it.

Because AI systems are more visible, more interactive, and more assertive, misalignment shows up faster.

Users don’t quietly tolerate friction.

They disengage.

What might have taken months to surface in traditional software becomes obvious in weeks — sometimes days — in AI products.

The Practical Takeaway

Retention doesn’t come from intelligence alone.

It comes from alignment.

Teams that succeed:

Identify the natural cadence of the problem.

Design engagement around that cadence.

Resist the urge to force frequency.

Measure success by consistency, not volume.

In an AI-first world, respecting user reality is the fastest path to durable retention.

3. Growth Comes From Solving Shared Problems, Not Isolated Ones

Another strong theme that emerged from the panel was the importance of multi-user relevance.

Many AI products begin by delivering clear value to an individual user. That’s often the right starting point. It simplifies onboarding, shortens time-to-value, and helps teams validate core utility quickly.

But as the panel made clear, durable growth rarely stops at the individual.

Individual Value Is Necessary — But Not Sufficient

AI products are especially good at creating powerful single-player experiences.

They help users:

Think faster.

Produce better outputs.

Automate personal workflows.

Feel individually empowered.

This often leads to strong early adoption.

But as Brian Balfour, Founder & CEO of Reforge, emphasized, products that remain purely individual struggle to compound. They grow linearly, not exponentially. Each new user must be acquired independently, and retention alone has to carry the entire growth story.

That’s a hard ceiling.

Shared Problems Unlock Compounding Growth

The most durable products discussed on the panel followed a different arc.

They:

Start with individual value: Solving a clear, personal pain point.

Expand into shared contexts: Teams, organizations, or communities.

Embed themselves into collaboration: Where work is coordinated, reviewed, or acted upon together.

This transition unlocks:

Natural network effects.

Lock-in through shared workflows.

Organic distribution via collaboration.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, explained, once a product becomes part of how people work together, switching costs become emotional and operational — not just technical.

AI Amplifies Collaboration — When Designed For It

AI has the potential to accelerate this transition, but only if products are designed intentionally.

When AI outputs are:

Easily shareable.

Reviewable by others.

Editable collaboratively.

Embedded in team workflows.

They create natural reasons for expansion.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, noted during the panel, many of the strongest AI companies see growth inflection not when the product gets smarter, but when it becomes socially necessary within a team.

Single-Player Products Hit a Wall

The panel was also clear about the risks of staying single-player for too long.

AI products that remain isolated experiences often:

Depend heavily on paid acquisition.

Struggle to create organic loops.

Face high churn when usage is optional.

Fail to embed themselves into daily work.

Even when the individual experience is strong, growth plateaus.

As Bryce Hunt, Founding GTM at Cognition, shared from the perspective of agent-native products, the moment AI systems begin influencing shared outcomes — codebases, decisions, deliverables — adoption dynamics change dramatically. Teams care. Conversations start. Distribution accelerates.

Collaboration Creates Accountability — and Stickiness

Another subtle benefit of shared problems is accountability.

When work is:

Visible to others.

Reviewed collaboratively.

Dependent on multiple stakeholders.

Usage becomes harder to abandon quietly.

Products that live inside shared workflows benefit from:

Social reinforcement.

Collective habit formation.

Stronger norms around usage.

This doesn’t require viral mechanics.

It requires relevance to how people already work together.

The Practical Takeaway

AI products don’t compound by being smarter alone.

They compound by becoming collectively useful.

The most durable growth comes from:

Starting with individual value.

Expanding into shared problems.

Embedding into collaboration.

Letting distribution emerge naturally.

In an AI-first world, growth follows shared utility — not isolated brilliance.

4. AI Raises the Bar for Onboarding — Not Lowers It

One of the more counterintuitive conclusions from the panel was this:

AI does not make products easier to adopt.

It often makes them harder.

Despite early expectations that intelligence would reduce friction, the opposite pattern has emerged in practice — especially once products reach real users.

AI Introduces New Kinds of Friction

Traditional software is predictable.

AI systems are not.

AI products introduced:

Nondeterministic behavior.

Unfamiliar mental models.

Probabilistic outcomes.

Workflows users haven’t seen before.

Even when the product is powerful, users often don’t know:

What to expect.

How to judge success.

When the system is confident.

When they should intervene.

As Brian Balfour, Founder & CEO of Reforge, emphasized, this creates a gap between capability and confidence. And without confidence, users don’t stick.

Self-Serve Onboarding Breaks Earlier Than Teams Expect

A recurring theme across the panel was that self-serve onboarding fails much earlier in AI products than in traditional SaaS.

Many teams assume that:

Users will experiment.

Value will reveal itself.

Intelligence will “sell” the product.

In reality, users often stall immediately.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, noted, AI products place a higher cognitive burden on users. When people don’t understand how to succeed quickly, they disengage — even if the product is technically impressive.

The failure isn’t loud.

It’s silent.

Early Handholding Accelerates Learning

The fastest-learning companies described on the panel didn’t avoid human involvement — they leaned into it.

They:

Onboarded users personally.

Walked them through first successes.

Observed where confusion emerged.

Adjusted workflows based on real behavior.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, explained, early handholding isn’t a scaling failure — it’s a learning accelerator. It shortens the feedback loop between what teams think users understand and what users actually experience.

Onboarding Is About Education, Not Explanation

A subtle but important distinction emerged around onboarding intent.

Onboarding isn’t about:

Explaining features.

Listing capabilities.

Documenting everything the system can do.

It’s about teaching users how to think with the product.

That means:

Showing what good usage looks like.

Defining boundaries clearly.

Guiding users through successful outcomes.

Correcting misuse early.

As Bryce Hunt, Founding GTM at Cognition, pointed out from the frontier of agent-based products, onboarding is often the moment where trust is either established or permanently lost.

Learning Speed Beats Go-To-Market Speed

Perhaps the most important reframe of the section was this:

The fastest-growing AI companies prioritize learning speed over go-to-market speed.

They don’t rush to scale acquisition before:

Understanding user confusion.

Clarifying workflows.

Stabilizing outcomes.

They accept slower early growth in exchange for:

Stronger retention.

Clearer value propositions.

More predictable expansion later.

In AI, onboarding is not a cost center.

It’s where product truth is discovered.

The Practical Takeaway

AI raises expectations — and uncertainty — at the same time.

That makes onboarding more important, not less.

Teams that succeed:

Invest heavily in early education.

Embrace guided experiences.

Treat onboarding as a product system.

Learn from confusion instead of ignoring it.

In an AI-first world, great onboarding isn’t about removing friction — it’s about removing uncertainty.

5. Product-Led ≠ Hands-Off

One of the clearest misconceptions surfaced on the panel was around what product-led actually means in an AI-first world.

Too often, product-led growth is interpreted as:

Zero human involvement.

Fully self-serve from day one.

No guidance or intervention.

No opinionated direction.

The panel was unequivocal: this interpretation breaks down quickly in AI products.

Product-Led Is About Where Value Is Created — Not Who’s Involved

At its core, product-led growth means that the product is the primary driver of value realization.

It does not mean:

Users are left alone to figure things out.

Teams remove themselves from the learning loop.

Human touch is a failure mode.

As Brian Balfour, Founder & CEO of Reforge, emphasized, product-led growth (PLG) is about value delivery, not absence of people. Confusing the two leads teams to optimize for scale before they’ve learned what actually works.

AI Products Need Human Scaffolding Early

AI introduces uncertainty in ways traditional software does not.

Users often:

Don’t know what’s possible.

Don’t know how to judge outputs.

Don’t know when they’re using the product “correctly”.

In this context, early human involvement is not optional.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, explained, the most effective AI companies use concierge onboarding early — not to sell, but to observe. Watching how users struggle, succeed, and misunderstand the product surfaces insights that no dashboard ever will.

Human Feedback Accelerates Product Discovery

Several speakers described how early human touch dramatically shortened product discovery cycles.

By staying close to users, teams were able to:

Identify confusing workflows quickly.

Understand which outputs actually mattered.

Separate novelty from real value.

Refine positioning before scaling acquisition.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, noted, this human feedback loop is often the difference between a product that feels impressive and one that earns trust.

Learning Cycles Matter More Than Scale at the Start

A recurring warning from the panel was about premature scaling.

AI products that rush to:

Remove human touch,

Automate everything, and

Maximize self-serve acquisition,

often do so before they’ve stabilized value delivery.

As Bryce Hunt, Founding GTM at Cognition, shared from the frontier of agent-based products, early scale amplifies misunderstanding just as fast as it amplifies success. If users are confused at small scale, they’ll be lost at large scale.

The Strategic Use of Human Touch

The panel offered a more nuanced model for PLG in AI:

Use human involvement intentionally early

To teach.

To observe.

To learn.

Identify repeatable patterns of value

Where users succeed without help.

Where workflows stabilize.

Where trust is earned.

Replace human touch deliberately

With product affordances.

With opinionated flows.

With automation that reflects real usage.

The goal is not to avoid human touch — it’s to earn the right to remove it.

The Practical Takeaway

In AI products, product-led does not mean hands-off.

It means:

The product leads value creation.

Humans accelerate learning.

Automation follows understanding.

Teams that treat PLG as an excuse to disengage learn slowly.

Teams that treat PLG as a system — with humans embedded early — learn fast.

In an AI-first world, strategic human involvement is not a growth liability. It’s a competitive advantage.

6. Motion Choice Is a Strategic Constraint, Not a Tactic

One of the most direct — and least hedged — messages from the panel was about go-to-market motion.

Being “in the middle” is the worst place to be.

This wasn’t framed as a tactical mistake.

It was framed as a structural one.

GTM Motion Shapes Everything That Follows

The panel was clear that GTM motion is not something you “optimize later.”

It determines:

How products are built.

How onboarding works.

How trust is earned.

How quickly deals close.

How economics scale.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, emphasized, motion choice constrains what’s possible long before it shows up in metrics. Teams that delay this decision often find themselves stuck with a product that doesn’t cleanly support any motion well.

Why the Middle Collapses

Several speakers described the same failure pattern:

The product isn’t self-serve enough to convert quickly.

It isn’t enterprise-ready enough to close confidently.

Sales cycles stretch.

Security reviews stall.

Legal friction increases.

Economics break.

This “hybrid by default” approach sounds flexible. In practice, it creates friction everywhere.

As Brian Balfour, Founder & CEO of Reforge, noted, ambiguity in motion leads to ambiguity in execution. Teams don’t know whether to optimize for conversion speed or deal size — and end up doing neither effectively.

Pure Sales-Led Is Fragile in AI

The panel was equally candid about the limits of traditional sales-led motions in AI.

Pure sales-led AI companies often struggle because:

Products evolve too quickly for long sales cycles.

Value is hard to fully demonstrate upfront.

Buyers want proof through usage, not promises.

Model behavior can’t be perfectly specified in contracts.

This doesn’t make sales irrelevant — but it makes sales-first strategies fragile, especially early.

Hybrid Motions Hit Real-World Friction

Hybrid motions — product-led entry with early sales involvement — sound attractive in theory.

In practice, the panel noted that they often collapse under:

Security reviews.

Legal scrutiny.

IT procurement processes.

Unclear ownership.

Without a clear product-led wedge or a true enterprise motion, teams get stuck negotiating before value is experienced.

The Two Motions That Actually Work

Across the discussion, the panel converged on two viable extremes:

1. Product-Led (Sales Layered Later)

Clear self-serve value.

Fast time-to-first-success.

Minimal friction to try.

Sales introduced after usage and trust are established.

2. Forward-Deployed Engineering

Deep customer involvement.

Hands-on implementation.

High-touch workflows.

Clear value before scale.

As Bryce Hunt, Founding GTM at Cognition, explained from the frontier of agent-native products, forward-deployed work isn’t a fallback — it’s often the fastest way to learn when problems are complex and trust is critical.

Ambiguity Is the Real Enemy

What failed consistently were companies that tried to keep all options open.

Ambiguous motion leads to:

Slow deals.

Broken economics.

Unclear product priorities.

Stalled growth.

Teams hesitate. Buyers hesitate. Momentum dies quietly.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, put it earlier in the panel, clarity — even when it limits options — is what enables speed.

The Practical Takeaway

GTM motion is not a growth hack. It’s a strategic constraint.

The companies that win:

Choose a clear motion early.

Design the product around it.

Accept the tradeoffs.

Execute decisively.

In an AI-first world, clarity beats flexibility.

Choosing the right motion doesn’t guarantee success — but avoiding the decision almost guarantees failure.

7. Trust Is the New Retention Loop

Across multiple threads of the conversation, one idea kept surfacing in different forms:

Trust is the real retention mechanism in AI products.

Not novelty.

Not intelligence.

Not even habit on its own.

Users return when they trust the system.

Trust Is Built on Predictability, Not Perfection

The panel was clear that users don’t expect AI systems to be perfect.

They expect them to be understandable.

Users return when:

Outputs are predictable.

Behavior is consistent.

Failure modes make sense.

The system feels aligned with their intent.

As Brian Balfour, Founder & CEO of Reforge, emphasized, predictability is what allows users to form mental models. Without a mental model, there is no habit — only hesitation.

Randomness Destroys Confidence Faster Than Errors

Several speakers noted that randomness is more damaging than being wrong.

AI systems lose users when:

Results feel inconsistent.

Success feels accidental.

Similar inputs produce wildly different outcomes.

Behavior changes without explanation.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, explained, users can forgive known limitations. What they can’t tolerate is uncertainty about whether the product will work this time.

In AI products, confusion doesn’t just slow adoption — it actively repels it.

Opaque Systems Feel Unaligned

A recurring theme was alignment.

Users trust systems that feel like they’re:

Working with them.

Respecting their intent.

Operating within understood boundaries.

When behavior is opaque, users assume misalignment — even if none exists.

As Bryce Hunt, Founding GTM at Cognition, described from the frontier of agent-based systems, trust collapses quickly when users don’t understand why the system acted the way it did. At that point, even good outcomes feel suspect.

Failure Modes Matter More Than Success Cases

One subtle but important insight from the panel was that users judge AI products by how they fail, not how they succeed.

When failure modes are:

Explainable,

Constrained, and

Recoverable,

trust grows.

When failures are:

Surprising,

Silent, and

Inconsistent,

users disengage.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, pointed out, trust isn’t built by eliminating failure — it’s built by making failure legible.

Trust Compounds Over Time

The panel repeatedly emphasized that trust behaves like a compounding asset.

Each predictable interaction:

Reinforces confidence.

Lowers cognitive load.

Increases willingness to rely on the system.

Over time, trust becomes the reason users return — even when alternatives exist.

Conversely, confusion compounds just as quickly.

Each unclear outcome:

Introduces doubt.

Raises friction.

Shortens patience.

Churn doesn’t usually happen after one bad experience.

It happens after several confusing ones.

The Core Retention Loop in AI

The panel implicitly described a new retention loop for AI products:

Predictability → Trust → Reuse → Deeper Reliance

Break that loop anywhere, and retention collapses.

As one speaker summarized succinctly:

Trust compounds.

Confusion churns.

The Practical Takeaway

In AI products, retention is not driven by how impressive the system is.

It’s driven by how safe it feels to rely on.

Teams that win:

Prioritize predictable behavior.

Surface boundaries clearly.

Design for understandable failure.

Align outputs with user intent.

In an AI-first world, trust isn’t a brand attribute — it’s a product property.

8. Onboarding Must Be Opinionated, Interruptive, and Interactive

One of the sharpest insights from the panel was that great AI onboarding behaves more like a game than a tutorial.

It doesn’t politely explain everything and hope users figure it out.

It actively guides behavior.

Neutral Onboarding Is a Silent Failure Mode

Many AI products default to neutral onboarding:

Feature tours.

Passive documentation.

Optional walkthroughs.

“Explore on your own” prompts.

The panel was blunt about the outcome: users fail silently.

As Brian Balfour, Founder & CEO of Reforge, noted, neutral onboarding shifts responsibility onto users at the exact moment they are least equipped to succeed. When users don’t know what “good usage” looks like, they hesitate — and hesitation kills momentum.

Opinionation Reduces Anxiety

Effective AI onboarding tells users exactly what to do.

It:

Prescribes the first action.

Narrows choices intentionally.

Removes ambiguity.

Defines success clearly.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, emphasized, opinionation reduces cognitive load. When users don’t have to decide how to start, they’re more likely to start at all.

In AI products, especially, clarity feels like competence.

Interruption Is a Feature, Not a Bug

The panel also reframed interruption as a positive design choice.

Great onboarding:

Interrupts users at the right moments.

Stops them before misconfiguration.

Corrects behavior early.

Enforces setup steps.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, explained, early interruption prevents downstream confusion. Fixing misuse later is far more expensive — and often impossible once trust is lost.

Interrupting early is an act of respect.

Interaction Beats Explanation

Another recurring theme was that users don’t learn AI products by reading about them.

They learn by:

Doing.

Seeing outcomes.

Correcting mistakes.

Receiving immediate feedback.

As Bryce Hunt, Founding GTM at Cognition, shared from agent-native systems, onboarding that rewards interaction — not passive consumption — accelerates understanding dramatically.

A successful first experience is worth more than a complete explanation.

Forcing Correct Setup Early Pays Dividends

Several speakers emphasized the importance of enforcing correct setup early — even if it feels restrictive.

Opinionated onboarding:

Blocks users from skipping critical steps.

Validates inputs.

Ensures prerequisites are met.

Prevents false negatives.

This reduces:

Misuse.

Early failure.

Frustration blamed on the product.

As the panel made clear, letting users “explore freely” often leads to bad conclusions about the product’s value.

Why Neutrality Fails in AI

Neutral onboarding assumes users:

Know what they want.

Understand system boundaries.

Can evaluate outputs accurately.

In AI products, these assumptions are almost always wrong.

Neutrality pushes responsibility onto users — and users fail silently.

Opinionation keeps responsibility where it belongs: with the product.

The Practical Takeaway

In AI onboarding:

Politeness is overrated.

Clarity is everything.

Guidance beats freedom early.

The best onboarding:

Tells users what to do.

Interrupts them when necessary.

Rewards correct interaction.

Teaches success through action.

In an AI-first world, great onboarding doesn’t wait for users to understand — it actively teaches them how to win.

9. Moats Are Shifting — Stacking Matters More Than Strength

One of the clearest rejections from the panel was the idea that AI companies can rely on a single, permanent moat.

That framing no longer holds.

Instead, the panel converged on a more nuanced — and more practical — view:

AI moats are time-bound.

They strengthen and weaken at different phases.

Durability comes from stacking and sequencing them.

The Myth of the Singular AI Moat

Early AI discourse often revolves around finding the moat:

Proprietary models.

Unique data.

Technical sophistication.

Speed of execution.

The panel was direct: no single advantage remains dominant for long.

As Brian Balfour, Founder & CEO of Reforge, noted, AI compresses competitive cycles. What feels defensible today often becomes baseline tomorrow. Teams that bet everything on one advantage eventually find themselves exposed.

Different Moats Peak at Different Times

Rather than dismissing moats entirely, the panel reframed them as phase-dependent.

Examples discussed included:

Data moats

Extremely strong once established.

Slow to build.

Often unusable early.

Most powerful after scale and repetition.

Brand moats

Can accelerate trust and adoption.

Fragile if product quality lags.

Difficult to repair once broken.

Distribution windows

Temporary but decisive.

Often tied to timing, channels, or platforms.

Missed windows rarely reopen.

Speed

No longer a differentiator.

Table stakes in AI.

Necessary but insufficient.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, emphasized, many AI companies fail not because they lack moats — but because they rely on the wrong one at the wrong time.

Stacking Creates Durability

The companies that endure don’t search for a silver bullet.

They stack advantages:

Speed early.

Distribution when available.

Brand as trust compounds.

Data as usage accumulates.

Each moat reinforces the others.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, explained, durability comes from overlap. When one advantage weakens, others compensate. This redundancy is what allows companies to survive competitive shocks.

Sequencing Matters as Much as Strength

Another subtle but important insight was that moats must be sequenced intentionally.

Building a data moat before you have distribution is pointless.

Pushing brand before reliability backfires.

Optimizing for speed without retention burns credibility.

As Bryce Hunt, Founding GTM at Cognition, shared, many AI startups mistake early momentum for defensibility — only to realize later that nothing was reinforcing it.

Momentum without structure decays quickly.

The Competitive Reality of AI

AI lowers the cost of imitation.

Features are copied faster.

Capabilities converge.

Execution gaps narrow.

In that environment, durability doesn’t come from being the strongest in one dimension.

It comes from being good enough across many — at the right times.

The Practical Takeaway

There is no permanent AI moat.

There are:

Temporary advantages.

Shifting strengths.

Strategic windows.

Compounding combinations.

The companies that win don’t chase the perfect moat.

They build a system of advantages that evolve as the market evolves.

In an AI-first world, stacking beats strength — and sequencing beats brilliance.

10. Brand Is Becoming Personal Again

One of the most striking themes to emerge near the end of the panel was a shift that’s easy to underestimate:

Brand is becoming personal again.

Not nostalgic.

Not performative.

Personal in a way that materially affects growth, trust, and retention.

Logos Don’t Carry Trust the Way They Used To

The panel noted that the environment around buyers and users has fundamentally changed.

Today:

Search is fragmented.

Feeds are noisy.

Information is overwhelming.

AI-generated content is everywhere.

In that world, traditional brand signals — logos, taglines, even company-level messaging — carry less weight than they used to.

Users don’t trust abstractions.

They trust people.

Trust Attaches to Opinionated Individuals

Across multiple threads, speakers pointed to the same pattern:

Users increasingly trust:

Individuals with clear points of view.

Builders who explain how they think.

Leaders who show up consistently over time.

People willing to be specific, not neutral.

As Brian Balfour, Founder & CEO of Reforge, noted, trust now accrues to those who reduce ambiguity. In a world of infinite answers, conviction becomes a signal.

Founder-Led Brand as a Growth Channel

The panel reframed founder-led (or leader-led) brand not as marketing — but as infrastructure.

When done well, personal brand becomes:

A distribution channel.

A trust shortcut.

A wedge into new audiences.

A retention lever for existing users.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, explained, many of the strongest AI companies today see disproportionate leverage from founders and leaders who actively articulate the product’s philosophy in public.

People don’t just buy the product — they buy the worldview.

Explanation Is the New Differentiator

AI products often struggle because users don’t understand why they work.

Founder-led brand helps close that gap.

When leaders:

Explain tradeoffs,

Share decisions,

Talk openly about constraints, and

Narrate progress and failure,

they make the product legible.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, pointed out earlier in the panel, explanation builds trust faster than polish. Users forgive imperfection when they understand intent.

Consistency Beats Virality

The panel was careful to separate personal brand from social performance.

This isn’t about:

Going viral.

Hot takes.

Constant posting.

It’s about:

Consistency.

Clarity.

Coherence over time.

As Bryce Hunt, Founding GTM at Cognition, noted, users don’t need constant visibility — they need repeated signals of alignment. Over time, that consistency compounds into trust.

The Most Defensible Layer Available

Perhaps the most important reframe of the section was this:

In an AI world where:

Features are copied.

Capabilities converge.

Moats shift quickly.

human trust compounds slowly — and decays slowly.

For many AI companies, especially early, a founder- or leader-led brand may be the most defensible layer available.

Not because it’s impossible to copy — but because it’s impossible to fake sustainably.

The Practical Takeaway

Brand is no longer just how a company looks.

It’s:

How clearly its leaders think.

How openly they explain.

How consistently they show up.

In an AI-first world flooded with answers, people follow judgment.

And judgment, increasingly, wears a human face.

11. Community Is a Growth Multiplier, Not a Feature

As the panel wrapped, one final idea brought many of the earlier themes together:

Community is not a feature.

It’s a growth multiplier.

And like any multiplier, it only works when the underlying system is sound.

Community Is Not a Container

The panel was explicit about what community is not.

It is not:

A Slack group.

A Discord server.

A forum.

A channel you “launch”.

Those are containers.

Community is what happens inside them — if anything happens at all.

Too many AI companies mistake presence for participation and confuse access with value.

Real Community Is Shared Learning

What actually worked, according to the panel, was community built around learning.

The strongest communities shared:

How people were using the product.

What worked and what didn’t.

Failure modes and recovery patterns.

Evolving best practices.

As Brian Balfour, Founder & CEO of Reforge, noted, learning compounds when users can see each other thinking. In AI products especially, this shared sensemaking reduces fear and accelerates confidence.

Identity Drives Contribution

Another recurring insight was that community only works when contribution is rewarded.

Healthy communities give members:

Status through insight.

Recognition through contribution.

Identity through participation.

As Aaron Cort, Growth & Marketing Partner at Craft Ventures, emphasized, community isn’t about broadcasting updates — it’s about creating a place where users feel ownership over collective progress.

When contribution is visible, learning accelerates.

The Product Must Reinforce Belonging

The panel also stressed that community cannot live outside the product.

The most effective AI communities were reinforced by:

Product language.

Shared workflows.

Common artifacts.

Visible usage patterns.

As Bryce Hunt, Founding GTM at Cognition, shared, when users see themselves reflected in how a product is built — not just how it’s marketed — community becomes self-sustaining.

Belonging doesn’t come from access.

It comes from relevance.

Why Community Matters More in AI

AI products introduce uncertainty by default.

Users often ask:

“Am I using this correctly?”

“Is this result trustworthy?”

“Is everyone else confused, too?”

Community normalizes that uncertainty.

As Gaurav Vohra, Advisor and Head of Growth at Superhuman, explained earlier, seeing others wrestle with the same questions reduces anxiety and builds confidence faster than documentation ever could.

Community turns uncertainty into momentum.

Organic Growth Emerges From Shared Progress

When done well, community quietly powers growth.

It:

Spreads best practices.

Accelerates onboarding.

Reinforces habit.

Drives organic distribution.

Users don’t just adopt the product — they advocate for it, teach it, and evolve with it.

That’s not a feature. That’s leverage.

The Final Takeaway

Community doesn’t create growth by itself.

But when paired with:

Trust,

Clarity,

Shared learning, and

Visible contribution,

it multiplies everything else.

In AI products, where understanding is as important as capability, community becomes the fastest way to scale trust.

Not by telling users what to do — but by letting them learn together.