How Open-Source & Open-Weight Models Push Product Development

Open models are shifting the stack—from tools we use to agents that build.

Aug 5, 2025, was quite an eventful day for major AI labs. Most of them shipped new features and updates:

Anthropic released Claude Opus 4.1

Google DeepMind released Genie 3

xAI Launches Grok Imagine

Alibaba Releases Qwen-Image and New API

Meta AI Releases Open Direct Air Capture Dataset

But the release that excited a lot of people, including me, was the release of OpenAI gpt-oss.

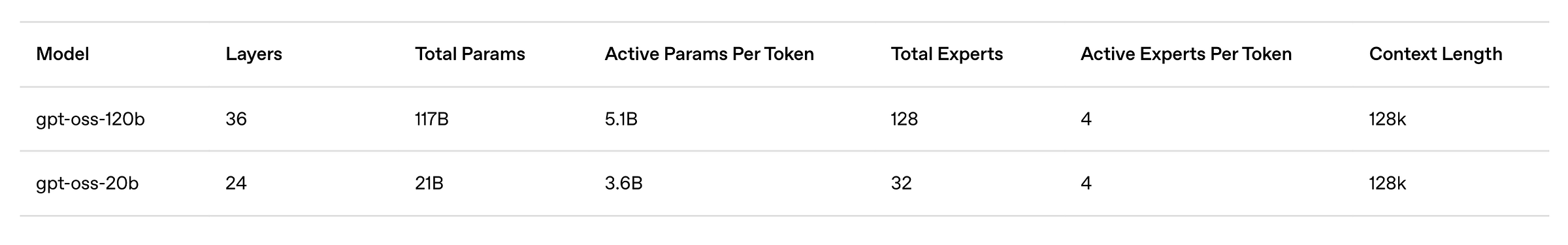

gpt-oss is a family of open-weight models that OpenAI released after GPT-2 in 2019. The model is under the Apache license, and it includes 20B and 120B variants. They are designed for agentic workflows, with strong support for reasoning, tool use, instruction following, and structured outputs. The models can be fine-tuned, run locally on consumer or enterprise-grade GPUs, and offer safety-aligned behavior by default.

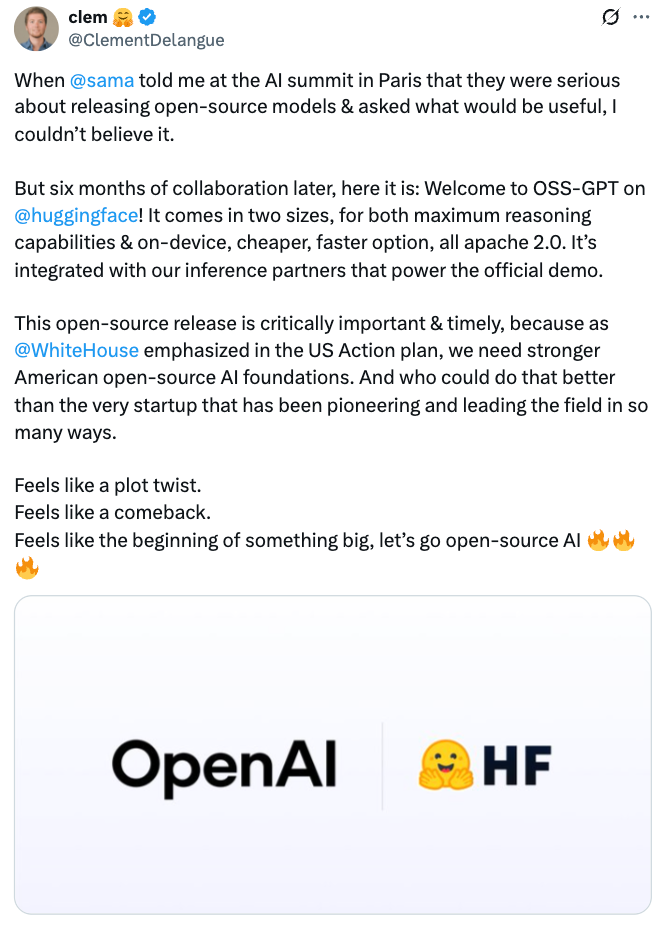

OpenAI collaborated with Hugging Face to release their model, something that Sam Altman (CEO of OpenAI) and Clement Delangue (CEO of Hugging Face) had planned at the AI summit in Paris in February of this year.

The gpt-oss (open-source software) relaese is a big deal for many individuals, teams, and engineers. After seeing the release, I wanted to discuss the scope of open models in terms of product development.

How will it affect the PMs? And how agentic workflows will transform product development.

But let me first tell you why 2025 is different from previous years when it comes to building products with AI.

Why 2025 Feels Different

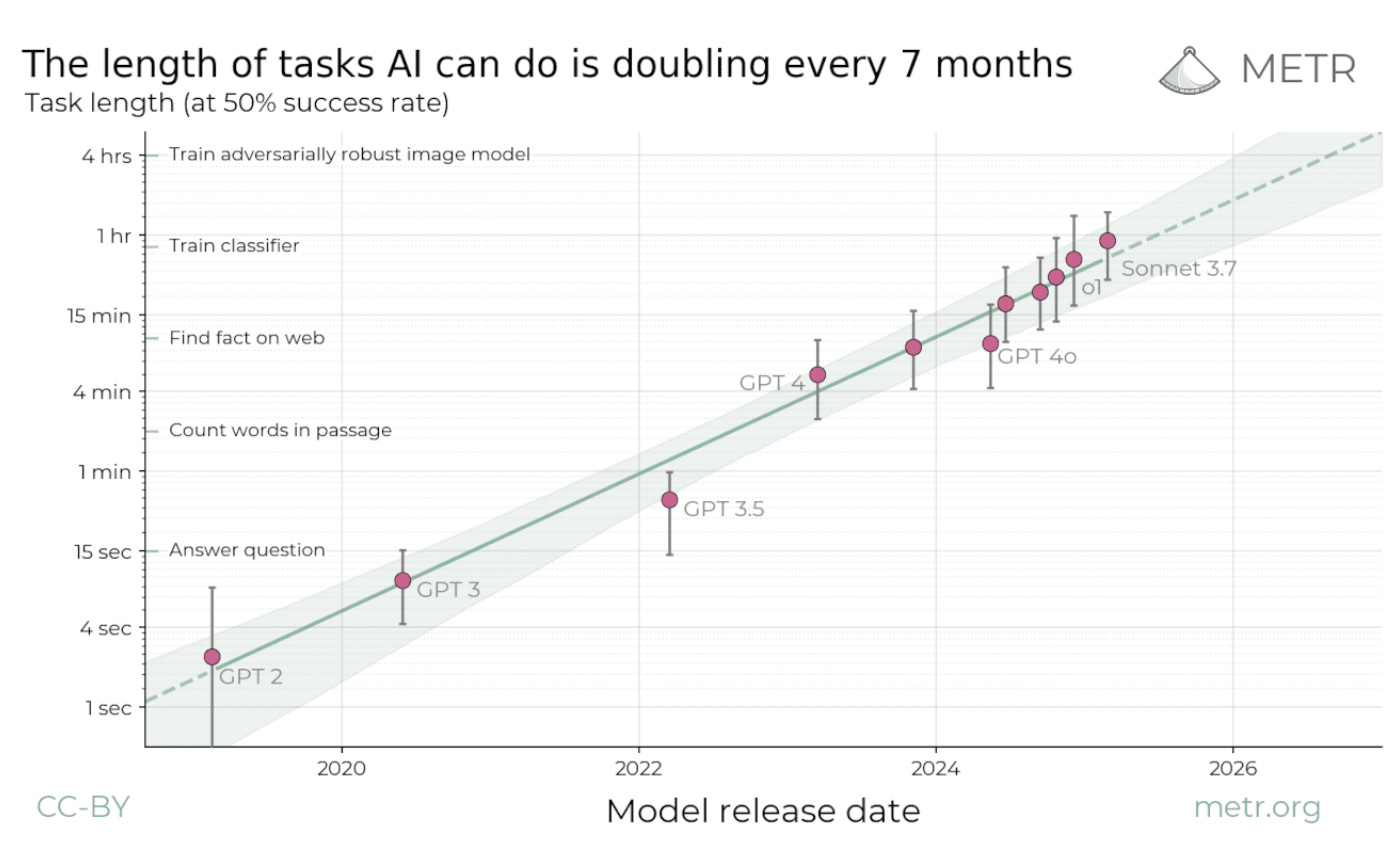

2025 isn’t just another year of model releases. It’s the year AI development crossed a threshold—and product teams are feeling the shift.

For starters, the economy broke.

Flat-rate pricing models are failing as token usage explodes. The more powerful the model, the more tokens it burns—often 100× more for the same task. For instance, o3 sometimes takes over 15 minutes to return even trivial responses like “hello there”—a sign of how context-heavy tasks can overload closed systems.

Subscription plans can’t keep up with power users who treat AI as infrastructure, not a chatbot.

Subscription models that served simple queries can’t support agent-based workflows that operate 24/7. These agents aren’t just generating answers; they’re running background tasks, chaining tools, and making decisions that burn through context windows like compute is free.

But it’s not.

And with new agents consuming compute 24/7, companies are being pushed toward vertical integration just to stay afloat.

At the same time, regulations are catching up. The EU AI Act and other global frameworks demand transparency.

That’s where “Open-weight” models help, but they don’t go far enough.

Without training data or methodology, compliance becomes guesswork. Only true open-source models offer the traceability needed for serious systems.

Meanwhile, new models drop weekly. The line between closed and open performance is blurring. Meta, Mistral, OpenAI—they’re all building in the open now.

Well, partially.

OpenAI hasn’t open-sourced GPT-4 or GPT-4o, but with gpt-oss, they have released open-weight models built for reasoning and tool use.

Meta’s Llama 3 and 4 are open-weight under a community license.

Mistral’s models are open-weights, too, though not always fully open-source. So the shift isn’t total—but it’s directional. The open foundation is forming.

Agents are no longer tools; they’re builders. Something I released after the release of Grok-4 heavy. I mean, it spins up multiple agents to get the job done.

Similarly, Vercel’s open SDKs let anyone spin up agents that write, deploy, and fix code. AI isn’t just writing code. It’s writing the infrastructure that writes code.

That’s why 2025 feels different. The stack is transforming, from humans using AI to AI building the stack itself.

The Vocabulary of Openness

Openness in AI isn’t a binary. It’s a spectrum with real consequences.

Whether you’re shipping a new feature, deploying an internal tool, or architecting agent systems, your choice between open-source, open-weight, and proprietary shapes what you can build, fix, or trust.

Open-source gives you the full recipe.

Model code, training pipeline, and sometimes even datasets—all public. This is where transparency lives. It’s reproducible, auditable, and flexible. You can retrain it, inspect every decision, and stay compliant. But it also asks more of you: infrastructure, expertise, maintenance. And in return, it removes ceilings.

Think: Mistral 7B.

Open-weight sits in the middle.

You get the trained model, not how it was made. It’s fast to deploy and cheaper than training from scratch. But it’s opaque. You can fine-tune, but not re-trace. You get a head start—but not full control.

Example: gpt-oss-20B or gpt-oss-120B and Scout or Llama 4 Maverick.

Proprietary models are locked boxes with polished APIs. You pay to query, and in exchange, you get safety features, support, and scale. But you’re trusting what you can’t see. You can’t shape the model—you can only route around its limits.

Claude 4.1 Opus, o3, Grok-4 are good examples.

Understanding this vocabulary isn’t academic. It’s foundational. The kind of openness you choose shapes your product stack, deployment strategy, and future defensibility.

Enter the Agents — GPT-OSS as Proof-of-Concept

We’ve entered an era where AI doesn’t just assist in building software—it builds it. Tools like gpt-oss, Grok-4, and Vercel’s V0 mark a shift from AI as an assistant to AI as a co-developer.

What’s emerging is a new loop: prompt → plan → pull request—where the person steers, and the agent drives.

Example: a developer prompts an agent to fix a UI bug. The agent reads the codebase, drafts a plan, and creates a pull request with proposed changes.

No back-and-forth.

No manual handoff.

Just outcome.

We are seeing that agents are becoming the primary users of your APIs. They read, reason, plan, and even ship.

gpt-oss, is designed with this in mind. It handles structured reasoning, tool use, and instruction-following with a level of autonomy that makes it ideal for agentic workflows.

And it’s open—meaning developers can customize, fine-tune using there own methods, and deploy it without waiting on API rate limits.

Why does this matter? Because it changes who your product is for.

Consider this: the “user” might now be an agent triggering workflows, generating UI, or fixing bugs in real time.

That’s exactly how Vercel thinks about V0: not as a tool for devs, but as an autonomous web builder that reflects years of learned best practices. It can deploy in seconds, fix issues, and scale across use cases—without a human writing a single line.

This is the generational leap. Agents aren’t assistants anymore. They’re infrastructure. And open models like gpt-oss are how they begin.

Open agents aren’t sidekicks; they’re the first consumers of your API.

Ripple Effects on Product Strategy

Openness isn’t just a technical choice. It’s a strategic lever. The kind that rewires how teams think about margins, compliance, and who they’re building with.

Start with cost.

Token prices are rising, but that’s not the real issue. The real shift is behavioral: users always want the best model, even if it costs more.

As users demand stronger models, token usage skyrockets. The cost structures of AI platforms buckle under the strain. Flat-rate pricing collapses when agents run 24/7, consuming hundreds of thousands of tokens per session.

Companies like Claude Code have already rolled back “unlimited” plans because they couldn’t keep up with power users running orchestrated workflows nonstop.

The result?

AI companies are forced into new business models—usage-based billing, deep enterprise hooks, or bundling AI into bigger stacks like hosting or observability.

If you're not open, you're probably subsidizing your own decline.

Closed providers offering generous plans often bleed cash to compete, while open-weight alternatives let teams self-host and manage compute on their own terms.

Then there’s governance.

You can’t audit what you can’t see. Open-weight models offer more insight than black-box APIs, but they’re still incomplete. True open-source models—the ones that expose the full pipeline—make it easier to prove fairness, trace decisions, and meet regulatory demands. Compliance moves from a performance to a capability.

And finally, talent.

The best people gravitate toward openness. They want to build, break, and improve things. A permissive license and transparent stack create not just adoption, but gravity.

Communities form. Contributions follow.

Vercel’s growth shows this: the more open they became, the faster the ecosystem expanded.

Openness compounds. Technically, yes—but strategically, even more so.

Openness turns margins, governance, and hiring into direct consequences of model choice.

The Next Frontier — Ephemeral Apps and Disposable UX

The future of software may not be something you install; it might be something spun up just for you, in the moment you need it.

AI is pushing product development toward ephemeral apps: one-off UIs, generated on demand, and discarded after use.

Instead of building static software, we’re generating tailored, temporary experiences—where a prompt becomes a product.

This shift lowers the bar to creation. No code, no boilerplate, no gatekeeping. In many cases, pitch decks are being replaced by live, interactive prototypes—because it’s faster to build the thing than explain it.

But the bigger change isn’t just who builds, it’s who consumes. As these tools evolve, they’re increasingly designed for agents, not just humans. The same interface that supports a marketer generating a landing page also supports an LLM agent automating that task in the background.

Tooling is becoming agent-native by default.

APIs are being redesigned for machines. llm.txt is an emerging spec that gives agents a machine-readable summary of a site.

MCP servers (Model Context Protocol) enable agent-to-agent coordination, letting LLMs run workflows autonomously.

And with continuous monitoring, these apps don’t just run, they evolve.

AI agents watch login flows, fix bugs, generate pull requests, and optimize performance automatically.

That’s the leap: software is no longer static.

It’s generative, disposable, and always improving.

Product leadership shifts from shipping features to orchestrating agents that never stop building.

Openness Isn’t a Feature; It’s the Substrate

Openness is no longer a feature request.

It’s the foundation.

The substrate.

The layer everything else builds on.

Open models like gpt-oss aren’t just cheaper or more flexible; they’re architected for the future. They support agentic workflows. They enable reproducibility. They embed safety policies while giving developers control.

And most importantly, they can be extended, debugged, and improved by the community that uses them.

The shift to ephemeral software—apps generated per task, per user—requires composability, transparency, and speed.

Product builders can now design APIs not just for human developers, but also for LLM agents.

For example, an LLM-friendly API might return structured JSON with explicit metadata, schema definitions, and embedded instructions—rather than a human-facing HTML response or UI-bound payload.

These protocols reduce ambiguity, making it easier for agents to reason, plan, and act.

Protocols like llm.txt and MCP are emerging to serve this new class of machine-native customers.

That’s why openness isn’t an edge case. It’s the environment where innovation happens, where trust is possible, and where AI evolves fast enough to meet reality.

Everything else? It’s dial-up.

Beautiful cover image -- did you create/generate it?