How to Ship Reliably With Claude Code When Your Engineers Are AI Agents

A PM-friendly playbook for plan-first agentic development using subagents, guardrails, and multi-model review to turn tickets into safe pull requests.

TLDR: PMs don’t need “AI that codes”; they need a delivery protocol. This blog explains how PMs can ship reliably with Claude Code by using plan-first gates, guardrails, Claude Code subagents, and multi-model review to turn messy tickets into clean, reviewable PRs. You’ll learn how to lead the gates and quality system so Claude Code ships safely and consistently.

Why PMs Need a Delivery Protocol for Agentic Engineering

Let me start off with a scenario.

Let’s assume yesterday’s ticket is three lines long and slightly wrong. The AI agent grabs it anyway, starts coding immediately, and opens a PR that “looks” complete. Then you find that the diff is noisy, the intent is unclear, and the tests are either missing or irrelevant. Engineering does what engineering always does: they don’t trust it, they ask for a rewrite, and you spend your afternoon translating ambiguity into something reviewable.

That failure mode is not about capability. It is about leadership. As an AI PM, it is okay not to be an expert at coding, but not being a good leader isn’t.

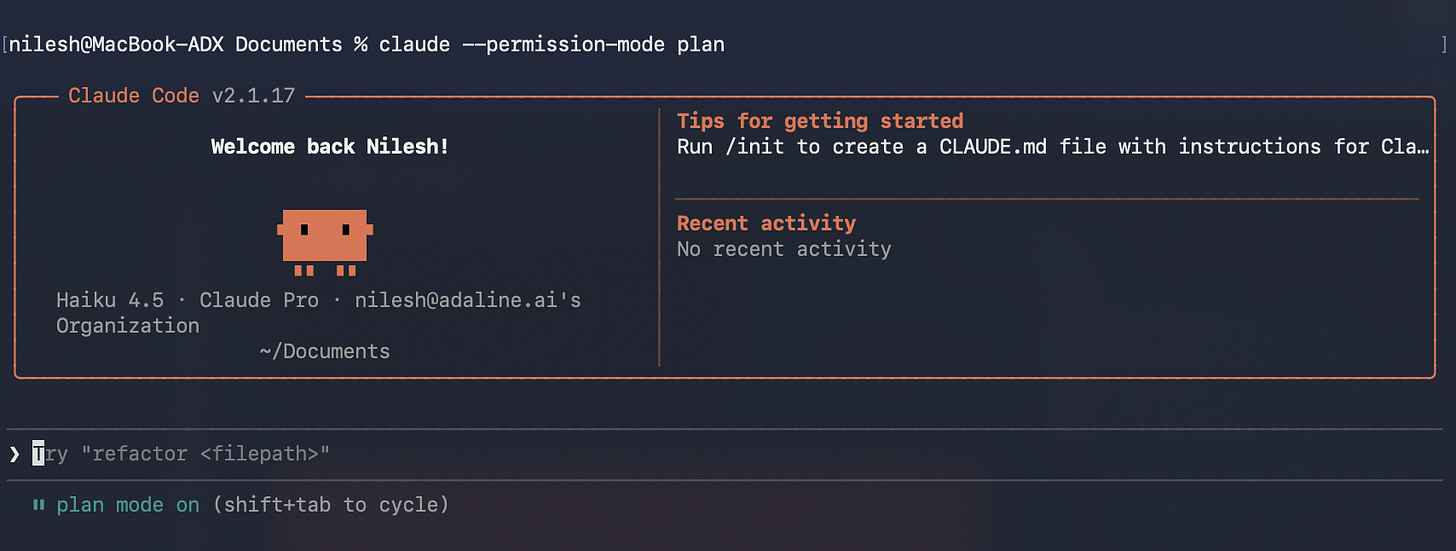

In agentic engineering, PMs are no longer just managing people’s throughput. You are managing a delivery system’s production reliability, i.e., how predictable, governable, and reviewable work is under speed. The fix is not “AI that codes.” It is the PM Build Protocol. A plan-first shipping workflow that turns ambiguous intent into structured execution. Plan Mode [in Claude Code] exists specifically to force safe analysis and requirement clarification before changes begin.

To enable plan use the following command:

claude --permission-mode plan

If you recognize these symptoms, you need a protocol, not more prompts:

PRs are large, noisy, and hard to review.

Engineers say, “This doesn’t match the ticket,” even when it compiles.

AI code review becomes a vibes debate instead of a checklist.

Reliability issues show up late because verification is not enforced.

PM time shifts from product decisions to cleanup and re-explaining intent.

The workflow is: start from the ticket, pass through a plan gate, apply guardrails, run subagent review, run multi-model review, and then open the PR.

Pull requests must be made only when the AI structures and aligns everything.

In the next sections, we will operationalize each gate—how to run Plan Mode as the approval boundary, how to encode guardrails, how to use Claude Code subagents for structured review, and how to add multi-model review so humans only see clean, trustworthy diffs.

Plan Mode With Claude Code to Turn a Ticket Into an Execution-Ready Plan

Plan Mode is the first place where agentic delivery becomes governable. It is your go/no-go gate: no code changes until the model can produce an execution-ready plan that a human can review and approve. Claude Code is explicitly designed to support plan-first behavior before taking actions.

In plain PM terms, plans are the unit of work. Tickets are intent. Diffs are output.

A plan is the path that makes intent legible and output reviewable. When you treat the plan as the artifact—especially when the input is a Linear issue—you stop “AI thrash” early, and you make engineering trust possible.

Plan Output Contract

Goals and non-goals are stated in one sentence each.

Scope boundaries that define what will not be changed.

Files or components likely to be touched and why.

Assumptions and open questions, labeled as blocking vs non-blocking.

Acceptance criteria rewritten as checkboxes that the PR must satisfy.

Test approach mapping each acceptance criterion to a test or verification step.

Rollout and rollback plan, including flags, monitoring, and safe failure behavior.

Copy/paste prompt box:

You are in Plan Mode. Do not modify code.

Use the Plan Output Contract format exactly (7 bullets).

Input: <paste Linear ticket + any constraints>.

Ask only blocking questions; if none, proceed to the plan.

Name files/components you expect to touch and why.

List tests and rollout/rollback steps tied to acceptance criteria.

For any Claude Code feature/workflow claim, cite an official Anthropic/Claude Code source.

Output: An execution-ready plan that can be approved like a spec, then handed to the agent to implement with guardrails.

Guardrails That Make AI Coding Reliable in Production

Guardrails are how you convert agent autonomy into production reliability. In practice, guardrails are concrete constraints—permissions, scoped access, allowed tools/commands, data-handling boundaries, and mandatory checks that must pass before work is considered done. I like the best practices for agentic coding from Anthropic. It’s worth checking out.

Guardrails Ladder

Tier 1: Read-only and analysis.

Agent can inspect, explain, and plan, but not write files or run risky commands. I saw this issue about CC in GitHub. Essentially, CC just ignored all instructions and “…modify files that should be blocked.”Tier 2: Controlled changes in scoped directories.

Agent can edit only within approved paths and use a pre-approved tool set, with prompts for anything outside the allowlist.Tier 3: PR-ready changes with enforced checks.

Agent can produce a PR candidate only after automated checks run via hooks and the workflow produces evidence (tests, lint, and a clear diff narrative).

Non-negotiables:

Secrets are never committed; keys and tokens must be handled via environment variables or a secrets manager, not files in the repo.

Directory boundaries are explicit; sensitive paths are disallowed, and the agent’s working scope is narrowed to the minimum viable surface.

Safe commands are pre-approved through Claude Code’s permissions system and shared via project settings to standardize behavior.

Tests and lint are mandatory; hooks should run checks automatically and fail fast when standards are not met.

Logging discipline is enforced; hooks can record tool activity so reviews have an audit trail of what ran and why.

Rollback is expected; every change carries a safe failure path, whether that is a flag, a revert strategy, or a limited rollout plan.

Engineers trust diffs that are bounded and verifiable. Guardrails make the PR smaller, the intent clearer, and the failure modes testable—so review becomes a checklist, not a debate.

Opt for a reusable Guardrails Ladder that your team can adopt to standardize autonomy without sacrificing compliance or speed.

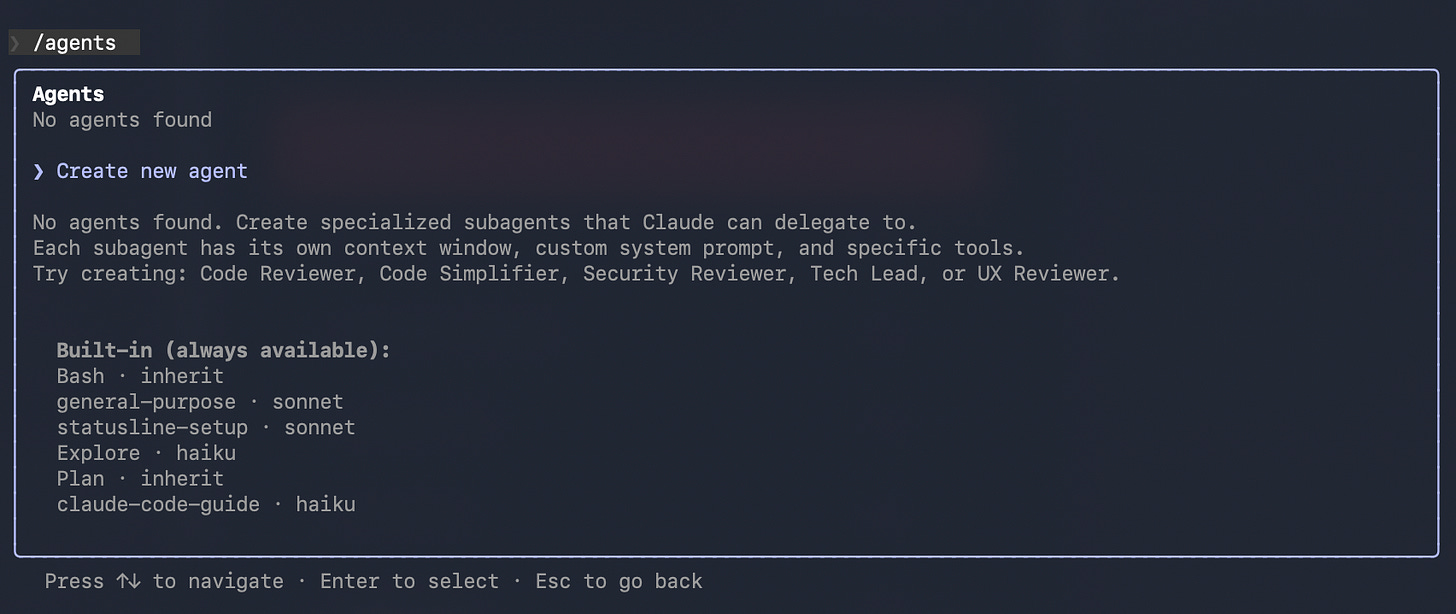

Subagents as Your Review Team for Spec Checks, Risk Discovery, and Test Design

A single general agent is good at momentum. It is bad at critique. When one model both proposes the approach and judges it, you get confident blind spots.

Claude Code subagents let you split “doing” and “reviewing” into specialized roles with narrow mandates, so critique becomes structured and repeatable instead of conversational. PMs can treat this like an AI review org chart: small teams, clear responsibilities, crisp outputs.

To create an agent in CC use “/agents”

Subagent Org Chart

Spec-to-AC (Acceptance Criteria) checker.

Mission: Verify the plan or diff satisfies every acceptance criterion and does not expand scope.

Output: A checklist of ACs marked Pass/Fail with one-line evidence per item.

Prompt snippet:

Compare this plan/diff to the ticket acceptance criteria.

Mark each AC Pass/Fail and cite the exact file/line or plan step.

List any scope creep as bullets.

Risk and edge-case hunter.

Mission: Surface failure modes, regressions, and operational risks before humans review.

Output: Top 5 risks with severity and the test or guardrail that would catch each.

Prompt snippet:

Enumerate edge cases and regression risks from this change.

Rank by severity and likelihood.

Propose one test per risk.

Test designer.

Mission: Translate acceptance criteria into a minimal test plan that proves behavior.

Output: A test matrix mapping AC to the test type and to target location.

Prompt snippet:

For each AC, propose the smallest test that would fail before this change.

Name the test type and likely file location.

Flag any gaps where behavior is untestable.

Security and privacy reviewer.

Mission: Identify risky data handling, injection surfaces, secrets exposure, and unsafe logging.

Output: Findings grouped by category with recommended mitigations.

Prompt snippet:

Scan for data ingress/egress, auth, secrets, and logging changes.

List issues by category and severity.

Suggest the minimal mitigation per issue.

Sequencing note: run subagents on the plan first (before execution), then rerun on the diff before PR creation to reduce noisy iterations and human review load.

Output: A copyable Subagent Org Chart that turns “AI review” into an internal review pipeline your engineers can trust.

Multi-Model Review to Catch Logic Gaps and Regressions Before Human Review

Multi-model review is a practical QA layer, not a philosophical stance. Different models carry different blind spots, so cross-model critique is a cheap way to catch logic gaps and regressions before a human ever opens the diff.

To make this repeatable, you do not “ask for a review.” You assemble a packet that reviewers can audit quickly, and you keep it consistent across PRs. Meaning, you put together the same set of review details or criteria every time, so reviewers can check it fast and know what to expect.

Claude Code is well-suited to generating this packet because it operates with direct repo context and workflows rather than detached chat snippets.

Check out this podcast from Lenny Rachitsky where he and Zevi Arnovitz talk a great on how to use Claude Code and how he uses it review code.

Below are examples of what to include in a review packet:

Plan summary.

Acceptance criteria.

Diff summary.

Test results.

Edge-case list.

Rollout/rollback.

Here are the examples of reviewer questions:

Does the change align with every acceptance criterion without scope creep?

Is the core logic correct under normal and edge-case paths?

Is error handling explicit, safe, and consistent with existing patterns?

Are there any security or privacy risks in data handling, secrets, or logging?

Are there performance footguns such as N+1 calls, expensive loops, or unbounded retries?

Are tests adequate, minimal, and clearly mapped to acceptance criteria?

Is rollback safe, fast, and realistic under incident pressure?

When reviewers disagree, the rule is simple: tests plus spec win. If the packet shows AC alignment and passing tests, prefer the path that preserves correctness and rollback safety. If risk is high or the change touches sensitive surfaces, escalate to a human reviewer immediately and narrow the scope rather than debating model opinions.

Output: A paste-ready Review Packet checklist you can drop into your PR template to make AI code review faster, safer, and more predictable for production reliability.

Conclusion

Start with the ticket, pass it through a plan gate, apply guardrails, run subagent review, run multi-model review, and then open the PR.

This is what reliable agentic engineering looks like in practice: not more output, but more control. PMs lead the gates and the quality system. You own the plan gate that converts ambiguity into an execution-ready spec. You define guardrails that bound autonomy into safe, verifiable changes. You design the Claude Code subagent reviewers so critique is structured and repeatable. You run a lightweight multi-model audit so humans see clean diffs, not surprises.

Tomorrow,

Pick one small ticket with clear acceptance criteria.

Run plan-first, apply your guardrails, then run subagent review and multi-model review before the PR.

Measure one outcome such as review time, rework cycles, or regression risk.

Protocol is better than heroics.

This article comes at the perfect time, and your incisive focus on a PM Build Protocol for agentic engineering is truly brilliant for navigating the current landscape. While the leadership aspect is paramount, I also wonder if exploring more formal specification methods or even enhanced DSLs could further refine the ambiguity translation proces, moving beyond just clear requirments into intrinsically structured execution definitions.

Really appreciate the plan gate framing here. The idea that plans are the unit of work and not just tickets or diffs is somethign I've struggled to articulate at my org. We had major headaches with agents just running ahead without alignment checks, and adding the plan review step as a mandatory gate fixed most of it. The subagent review org chart is clever too, splitting critique frm execution solves the confident blind spots issue.