The 5 Levels of Agentic AI

From Basic Automation to Autonomous Intelligence in 2025

Agentic workflows, such as Claude Code, have changed the way we build products. They allow direct access to local tools, frameworks, and information.

Similarly, coding agents like “Codex” and “MCP” have made prototyping faster.

Also, agentic research tools help by automating survey distribution. They also analyze sentiment patterns and turn feedback into useful insights. Teams used to spend weeks gathering and analyzing user data. Now, autonomous agents can finish detailed market analysis in just hours. This compression enables product development to shift from quarterly planning cycles to continuous iteration.

When we consider these innovations, we can categorize them into levels 3 and 4 of the agentic behaviour. Level 3 systems address our current automation needs. Level 4 systems enable sophisticated multi-agent workflows.

Knowing these differences helps avoid overthinking simple issues. It also ensures we have the necessary ability to meet more complex needs. In this article, I will provide the required details about the five different levels of Agentic AI.

Let’s get started.

Understanding Agentic AI Architecture and Core Components

Agentic AI marks a big change. It moves from passive language models to active systems that chase goals. Agentic AI systems operate in a manner distinct from traditional chatbots. They don’t just reply to prompts. Instead, they use continuous feedback loops to improve their responses.

What Makes AI "Agentic"?

The core distinction lies in the sense-think-act cycle. Traditional AI systems follow simple input-output patterns. Agentic systems maintain a persistent state across interactions. They recall past actions and test results. Then, they change their future behavior based on those experiences.

These systems exhibit three key characteristics.

Autonomy enables minimal human intervention after deployment.

Task specificity allows focus on well-defined domains like customer support or research assistance.

Reactivity provides real-time responses to environmental changes or user requests.

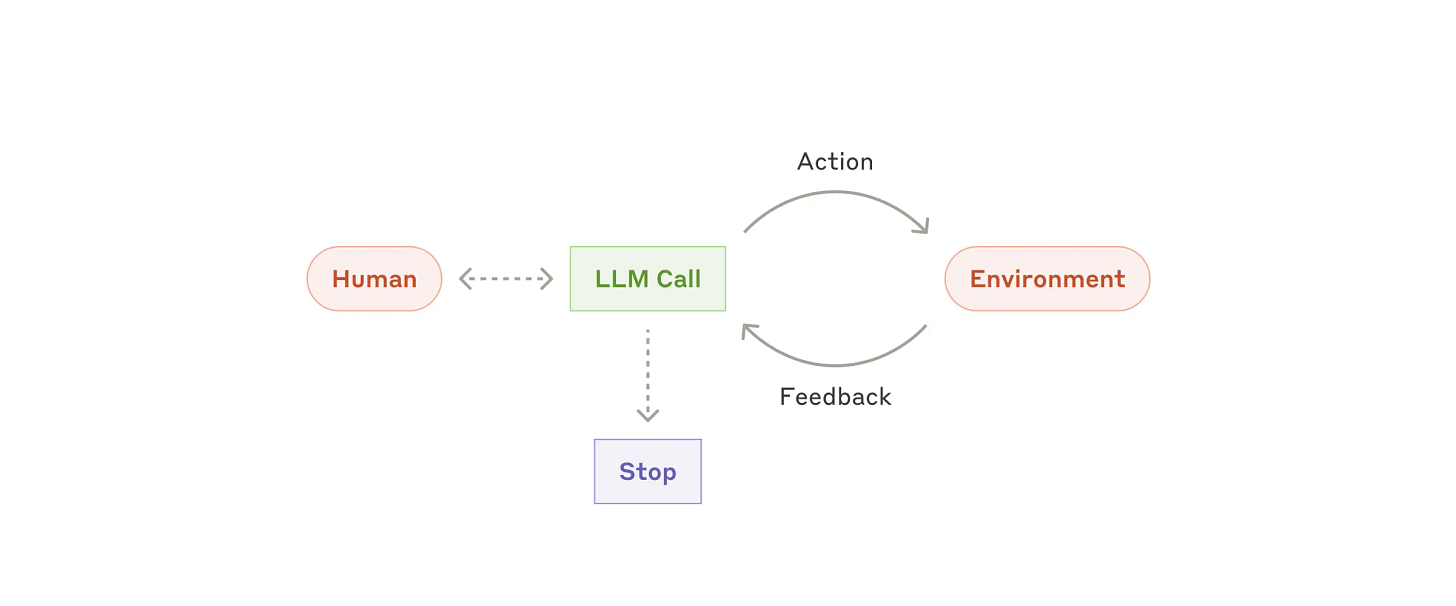

Essential Components: The Perception-Planning-Action Loop

Modern agentic architectures build on three foundational modules.

The perception module ingests inputs from users, APIs, or external systems. It preprocesses natural language prompts, file uploads, and sensor data into structured formats.

The reasoning module serves as the system’s intelligence center. The most common reasoning systems are LLMs. LLMs employ chain-of-thought reasoning and scaled reinforcement learning to break complex tasks into manageable subtasks. This enables step-by-step problem decomposition.

Action execution translates decisions into real-world effects. The module interfaces with external tools through structured APIs. Examples include database queries, web searches, or message sending.

Tool Calling and Function Execution

Tool integration connects AI reasoning with practical capabilities. LLMs define available functions using JSON schemas or a structured format. These schemas specify input parameters, expected outputs, and usage constraints.

Function calling follows explicit patterns. The LLM outputs commands that are formatted specifically to indicate tool invocation. The orchestration layer parses these commands and executes the corresponding functions. Results feed back into the reasoning loop for continued processing.

This architecture enables sophisticated workflows. An agent can search a knowledge base. It analyzes the documents it finds and automatically creates summaries. Each step relies on earlier results. This follows the ReAct way of reasoning and acting one after the other.

Level 1 & 2: From Rule-Based Automation to ML-Assisted Intelligence

Level 1: Basic Responder Systems | The Foundation of Automation

Level 1 agents represent the simplest form of AI automation levels. These systems execute predetermined scripts for specific inputs. They operate as enhanced versions of traditional automation tools with minimal intelligence.

Legacy chatbots exemplify Level 1 capabilities. They detect specific keywords and trigger pre-written responses. Simple reflex agents follow if-then rules without maintaining state information. Password reset systems and basic customer service bots operate at this level.

These systems offer clear advantages. Predictability ensures consistent outputs across interactions. Implementation costs remain low with straightforward development requirements. Computational efficiency makes them suitable for high-volume, low-complexity tasks.

However, limitations become apparent quickly. Rule-based systems cannot handle variations beyond programmed scenarios. They struggle with ambiguous inputs or unexpected user requests. Scalability issues emerge as rule complexity grows exponentially.

Level 2: Router and Co-Pilot AI | Statistical ML Augmentation

Level 2 systems introduce statistical machine learning for enhanced decision-making. They augment basic automation with probabilistic reasoning and pattern recognition capabilities. Microsoft Copilot and similar router systems exemplify this advancement.

ML-assisted automation enables smarter routing decisions. Customer support systems can classify incoming tickets and direct them appropriately. These systems learn from historical data to improve classification accuracy over time.

Co-pilot interfaces provide intelligent suggestions without full autonomy. They assist human operators by offering recommendations based on learned patterns. Document drafting tools and scheduling assistants operate in this collaborative mode.

The user maintains control as a collaborator rather than mere operator. Systems can transfer control between agent and human seamlessly. Shared progress representation allows both parties to understand current task status.

Real-World Applications and Current Market Adoption

Enterprise adoption focuses heavily on Level 1 and 2 systems due to manageable implementation costs. Telecommunications companies report 70% query automation rates with Level 1 chatbots.

Healthcare applications demonstrate rapid expansion. Clinical decision support systems improve diagnostic accuracy while significantly reducing diagnosis time. Administrative automation streamlines documentation and billing processes significantly.

Security vulnerabilities and context limitations pose key risks. Simple systems lack sophisticated authentication mechanisms. ML-assisted systems struggle with adversarial inputs and edge cases requiring human judgment.

Level 3: The Current Frontier | Partial Autonomy with LLM Planning

Level 3 agents represent the dominant paradigm in 2025's autonomous AI tools landscape. These systems use LLMs and intelligent orchestration frameworks. This allows them to run multi-step workflows on their own, without needing human help. They keep a steady context and change their method based on ongoing results.

Tool-Using Agents: How LLMs Enable Multi-Step Autonomous Workflows

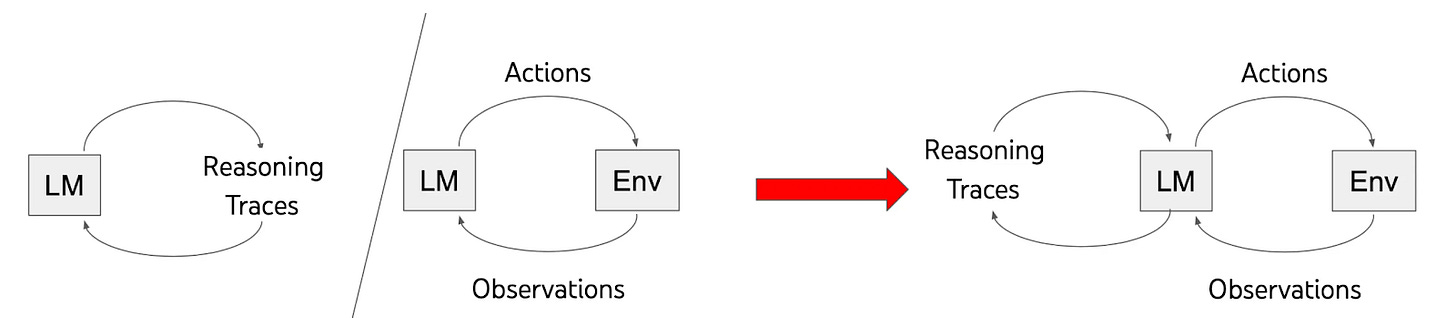

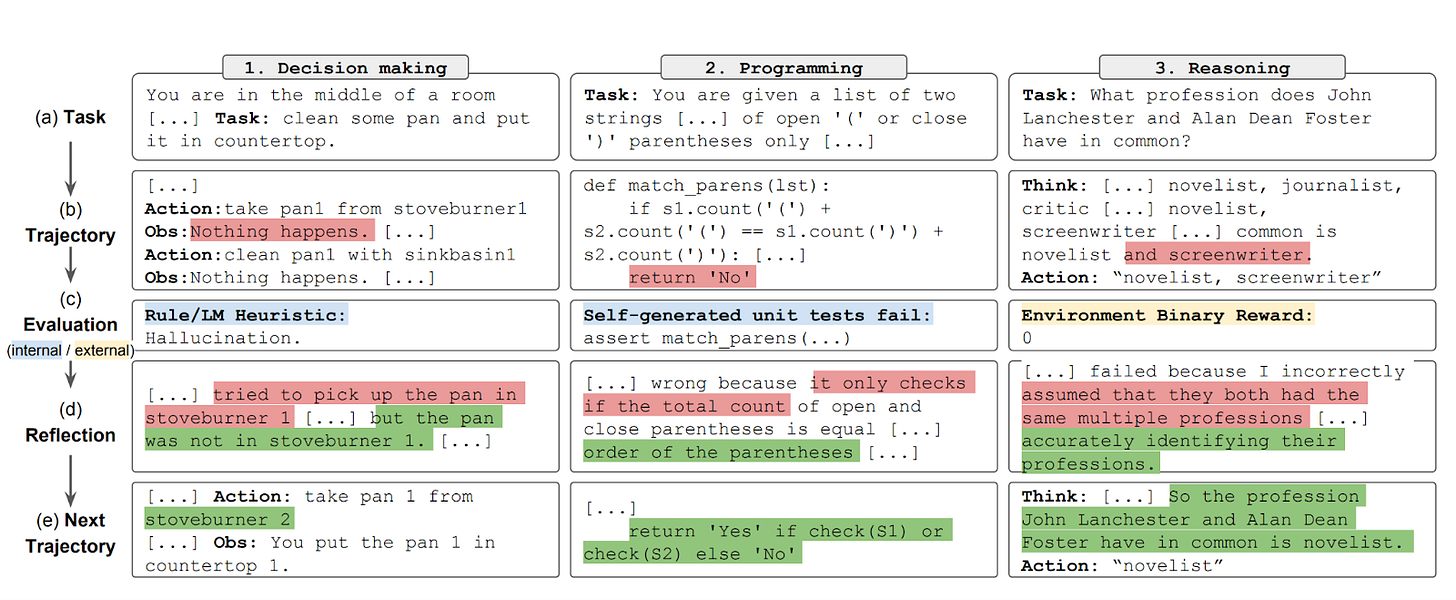

The architecture is based on the ReAct paradigm. Here, agents combine reasoning and action in structured loops.

AutoGPT and BabyAGI exemplify this evolution, using GPT-4 as both planner and executor. The agent sets big goals. Then, it breaks them into smaller tasks. It also utilizes external APIs and continually tracks progress.

Tool invocation follows standardized patterns using JSON schemas and function signatures. When agents find needs outside their knowledge, they create structured function calls. These calls go through orchestration layers. These calls use different services. They include web search, code execution, database queries, and specialized APIs.

Current implementations show remarkable sophistication. Microsoft's AutoGen framework allows structured interactions between agents. It uses set roles and teamwork in chat logic. LangChain provides comprehensive toolkits for single and multi-tool agents with memory persistence. These frameworks turn LLMs from static predictors into interactive problem-solvers. They can now reason dynamically over intermediate states.

Vector Databases and RAG: Grounding AI Agents in Factual Knowledge

Retrieval-augmented generation forms the reliability backbone of Level 3 systems. Vector databases like FAISS and Pinecone help find semantic similarities in big knowledge bases. This method solves issues like hallucinations, knowledge cutoffs, and context window limits in language models.

The RAG workflow has three phases:

Data preprocessing

Query processing

Generation conditioning

Documents and structured data turn into vector embeddings. This happens with models like OpenAI's text-embedding-ada-002. User queries undergo similar embedding, retrieving semantically relevant content. Finally, generation conditioning combines retrieved information with LLM outputs to produce grounded responses.

Enterprise implementations demonstrate substantial impact. This technology is great for products that need accurate facts and current information. It works well in customer support and enterprise search apps.

Memory architectures extend RAG through episodic, semantic, and vector-based persistence. These systems help agents remember past interactions. They keep things consistent across sessions and change behavior based on what they’ve learned.

Production Deployments: Code Interpreter, MCP, and Enterprise Solutions

2025 marks Level 3 systems' transition from experimentation to operational deployment. Deloitte predicts that 25% of companies using generative AI will begin agentic pilots in 2025. This is expected to rise to 50% by 2027. ChatGPT Code Interpreter shows advanced tool use. It runs Python scripts, analyzes data, and creates visualizations on its own.

OpenAI's recent Codex release demonstrates enterprise-ready orchestration. This cloud-based software agent manages several tasks at once. It handles feature development, bug fixes, and creates pull requests. Each task runs in separate sandbox environments. This shows the strong isolation needed for enterprise deployment.

Enterprise adoption reflects growing sophistication in model deployment. They match specific models to certain use cases instead of using general-purpose solutions. This specialization enables optimized performance across diverse operational requirements.

Not to forget, Anthropic’s Model Context Protocol (MCP) that allows users to locally connect to various tools and internal databases. This shows that Level 3 systems are gaining recognition. They are valuable for automating knowledge work. They can change the industry.

Level 4: High Automation with Self-Improvement and Multi-Agent Systems

Level 4 features multi-agent AI systems. These systems operate independently and coordinate specialized sub-agents. They show advanced self-improvement through reflection and shared learning. Unlike earlier levels, they handle open-ended tasks with little human help. They mainly need intervention for key approvals.

Reflective Multi-Agent Architectures: Coordination and Self-Learning

Advanced frameworks allow specialized agents to work together through clear communication. MetaGPT and ChatDev illustrate this by modeling agents like corporate departments. Agents take on roles such as CEO, engineer, or reviewer. Each agent has unique skills and communicates via message queues and shared memory.

The architecture includes reflexive mechanisms for self-criticism and improvement. After tasks, agents check their results. This secondary reasoning boosts accuracy and cuts down errors. A legal assistant checks drafted clauses against past case laws before submission. Verifier agents audit summaries for precision.

Memory designs evolve in Level 4 systems.

Episodic memory helps agents remember past actions and feedback.

Semantic memory stores structured knowledge for fact-checking.

Vector-based memory supports similarity-based retrieval for personalization and decision-making.

Distributed state management allows each agent to keep local memory and use shared global memory for coordination.

Learning methods expand beyond individual agents to improve the system as a whole. Agents use multi-agent reinforcement learning to share real-time experiences. This way, they avoid learning the same policies repeatedly. This teamwork speeds up overall intelligence while keeping agent specialization intact.

Computer-Using Agents (CUA): AI That Operates Software Like Humans

Level 4 also introduces computer-using agents that directly interact with graphical user interfaces. This universal interface allows agents to navigate any software meant for humans. This flexibility meets the various digital needs that traditional API systems can’t manage.

CUA technology uses smart vision to solve problems. It helps understand buttons, menus, and text fields. Agents break tasks into multi-step plans and self-correct when faced with challenges. This feature removes the need for custom integrations with each software platform.

Implementation involves training agents to recognize GUI elements accurately. It also ensures safety by detecting prompt injections and monitoring content. These systems work in isolated environments. This keeps unauthorized users out. They can still use popular business apps. These include spreadsheets, browsers, and communication tools.

Current systems are promising. They can automate complex tasks that previously required human assistance. Customer service agents utilize various software systems to address inquiries. Meanwhile, administrative agents handle tasks across various apps without needing API access.

OpenAI Operator and the Push Toward Greater Autonomy

OpenAI’s Operator represents a significant advancement in Level 4 capabilities. It uses Computer-Using Agent technology. It uses GPT-4o’s vision and reinforcement learning to explore websites. It can fill out forms and finish online tasks with greater independence. Still, it keeps human oversight for key decisions.

Operators highlight Level 4 by requesting user input, particularly during failures or risky times. Users can set approval conditions. This allows for flexible autonomy, depending on task importance and organizational risk.

Safety measures involve careful navigation. They use prompt injection detection and monitoring systems. These systems pause tasks if they spot suspicious content. Detection pipelines blend automated analysis with human review. These safeguards address risks linked to autonomous web interactions while keeping operations effective.

Level 5 and Beyond: The Path to Fully Autonomous Artificial General Intelligence (AGI)

Level 5 marks the peak of AGI autonomous agents. These systems would operate independently like human experts in any field. They would set their own goals and solve new problems. Also, show creativity, self-awareness, and emotional intelligence. In this case, users would just watch. They could only turn off the system in emergencies.

Defining Full Autonomy: Complete Independence in Open-World Contexts

True Level 5 autonomy goes far beyond what we have now. These agents would understand situations rather than just recognize patterns. This understanding would lead to creative problem-solving in any area. They would notice their thoughts and limits. This helps them grow by reflecting and learning from experiences.

Operating in open-world situations means dealing with unexpected events without human help. Level 5 agents would need strong causal reasoning skills to grasp cause-and-effect relationships. This skill would allow them to adapt safely to new situations. They need value alignment systems. This way, their goals can stay in line with human welfare as they change.

Technical needs include advanced memory architectures that cover episodic, semantic, and procedural knowledge. Managing emergent behavior is important. These systems can create unpredictable strategies while learning. Multi-modal understanding lets them blend text, visual, auditory, and maybe tactile information easily.

Current AGI benchmarks like ARC-AGI test generalization skills vital for Level 5 systems. The benchmark shows a success rate of just 75.7%. This is surprising given the large amount of research funding. In contrast, human performance stands at 98%, indicating a significant gap to close.

Current Limitations and the Reality Check for 2025

In 2025, we face major hurdles to achieving Level 5. Current systems struggle with causal understanding, often confusing correlation with causation. LLM-based agents often reflect biases from their training data. They may struggle in new situations.

Computational limits create immediate challenges. Every decision cycle for an agent needs several LLM calls. This causes latency and resource problems. Static knowledge cutoffs hinder dynamic information updates without specific retrieval methods. These factors confine current systems to narrow operational areas.

Key technical gaps include:

Lack of true understanding versus advanced pattern matching

Limited long-term planning and recovery skills

Surface-level semantic comprehension despite structured reasoning

Inability to learn new concepts without extensive retraining

Prompt sensitivity causing unpredictable behaviors

Research tends to focus on small improvements rather than major breakthroughs. Most systems today work at Level 3 or early Level 4. They need a lot of human oversight to function reliably.

Conclusion

The five levels of agentic AI create a roadmap for intelligent automation. Level 3 systems dominate current production environments. They offer reliable autonomous planning with human oversight.

Level 4 introduces multi-agent coordination and self-improvement. And, level 5 remains theoretical, requiring major safety breakthroughs.

Strategic Implementation for Product Leaders

Level 3 systems excel at automating research workflows. Deploy these agents for market research, survey analysis, and content generation. They reduce research cycles from weeks to hours.

Level 4 systems enable complex workflows through multi-agent teams. Research agents gather user insights. Design agents prototype interfaces. Testing agents validate functionality. This coordination accelerates iteration and improves team alignment.

Key Success Factors

Start with Level 3 for immediate productivity gains. Build expertise before advancing to Level 4. Focus on specific use cases rather than broad automation. Monitor performance continuously. Maintain human oversight for critical decisions.

Product leaders who understand these levels can automate workflows strategically. They accelerate development cycles. They deliver superior user experiences through intelligent agent deployment.

A fascinating framework that really captures the evolution of Agentic AI maturity. Moving from reactive automation to proactive, collaborative AI represents a huge leap for organizations. At Wavity, we’re focused on enabling Level 4 and 5 capabilities — where AI not only acts but collaborates and learns continuously across IT ecosystems.

A fascinating framework that really captures the evolution of Agentic AI maturity. Moving from reactive automation to proactive, collaborative AI represents a huge leap for organizations. At Wavity, we’re focused on enabling Level 4 and 5 capabilities — where AI not only acts but collaborates and learns continuously across IT ecosystems.