What is Test-time Scaling?

A Note on Test-time or Inference Scaling for Reasoning Models.

Foundations of Inference Scaling

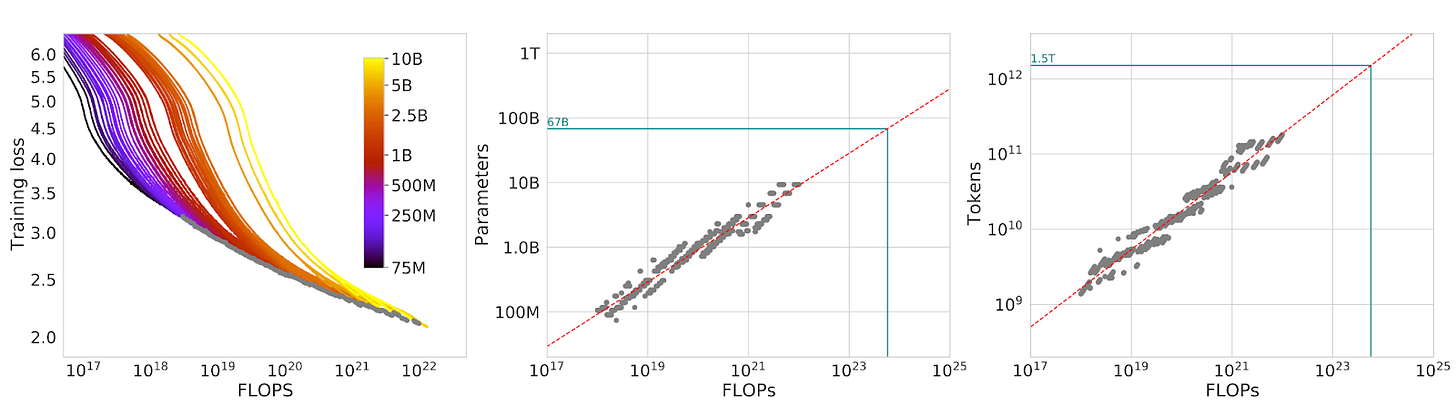

The scaling law from 2020 and 2022 has led to the development of LLMs that can extract patterns and understand large datasets quite effectively. Scaling laws have also shown that the training loss reduces when more training compute is provided to large models. As a result, the LLMs have shown emerging behaviour on tasks that they were not trained on.

Based on our estimated compute-optimal frontier, we predict that for the compute budget used to train Gopher, an optimal model should be 4 times smaller, while being training on 4 times more tokens. … The energy cost of a large language model is amortized through its usage for inference an fine-tuning. The benefits of a more optimally trained smaller model, therefore, extend beyond the immediate benefits of its improved performance. – Training Compute-Optimal Large Language Models

However, until mid-2024, the AI labs focused primarily on scaling the training phase by increasing compute resources and implementing parallelization. Although the models were becoming more intelligent, they were not able to perform quite well on downstream reasoning tasks and evaluations.

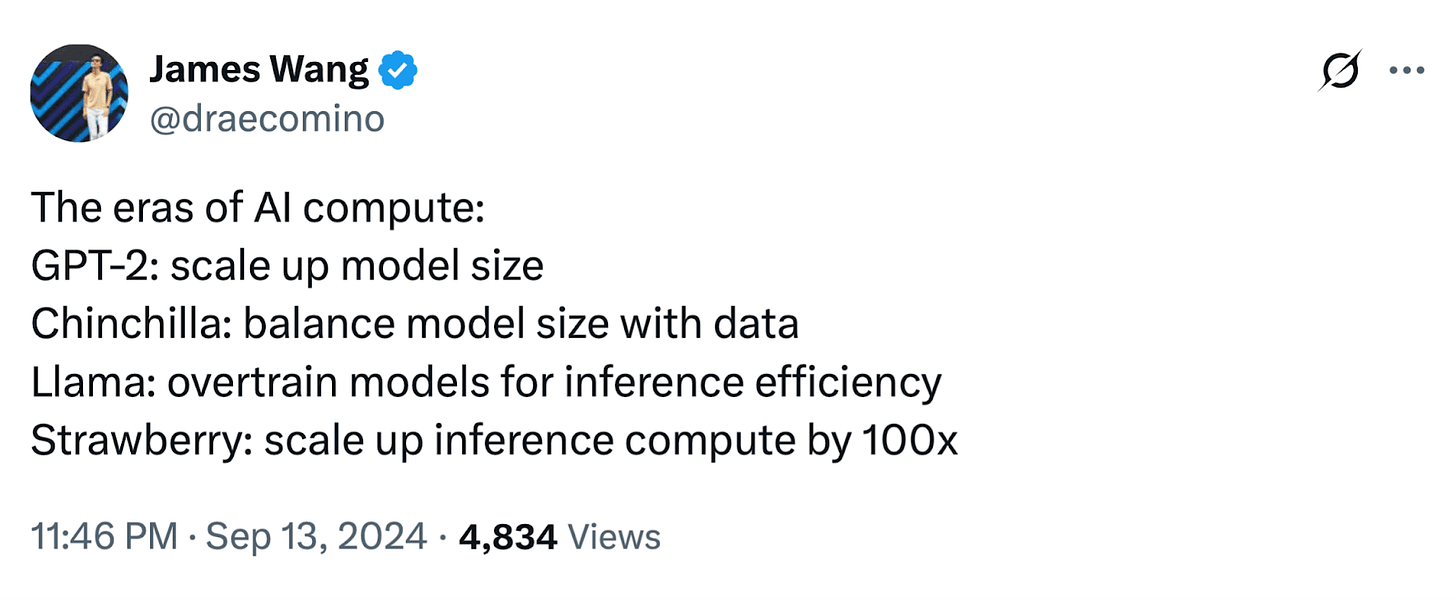

James Wang the Director of Product Marketing @CerebrasSystems tweeted the timeline of AI compute. It is no surprise that AI labs focused more on scaling the training phase. And for inference, mostly techniques like quantization were employed. Quantization reduces the memory consumption but often leads to a potential loss in the model’s performance, especially on reasoning tasks.

To improve the downstream performance of LLMs (on reasoning tasks), there has to be enough exploration space for the LLM to work and find better answers. When the exploration space itself is constrained, the LLM cannot find relevant answers, leading to suboptimal performance. Keeping this in mind, researcher started working on search-based techniques that the LLMs could use during inference to enhance their performance. This is known as inference search or test-time search.

Inference scaling, also known as test-time scaling by definition, is a process of allocating GPUs (computational resources) during the model’s runtime or deployment to enhance its output performance. The purpose is to increase the accuracy and reasoning quality of the LLMs.

Inference = Test-time

Inference scaling refers to techniques that optimize LLM performance during deployment.

For instance, Monte Carlo Tree Search, best-of-n, and majority voting are some of the search-based techniques. However, this would require more computation (measured in FLOPs) during inference, which would again increase the infrastructure cost and budget. The increase in computational usage by LLMs is known as test-time compute.

As such, you will see that the tasks themselves are divided into two categories:

General tasks that require no reasoning, therefore fewer GPUs or compute. This would include tasks such as text summarization, translation, and creative writing, among others. These tasks are straightforward and require little to no thinking.

Reasoning tasks require extensive reasoning or thinking, and more compute. This would include tasks such as advanced mathematics or providing a PhD-level solution, multimodal reasoning, etc. These tasks require a lot of thinking before answering.

In 2025, AI labs began following this paradigm of task-based large language model (LLM) development, where they are developing models for general-purpose and reasoning tasks. OpenAI is providing GPT-4 and GPT-4.5 for general-purpose tasks, and o1, o3, and o4 for reasoning tasks. Similarly, Google is providing Gemini Flash for general-purpose tasks and Gemini Pro for reasoning tasks.

In this article, we will be focusing on inference scaling or test-time scaling for reasoning models like the ‘o’ series from OpenAI and Gemini Pro from Google DeepMind. We will cover the fundamentals and learn how inference scaling offers better reasoning.

Why Inference Scaling Matters?

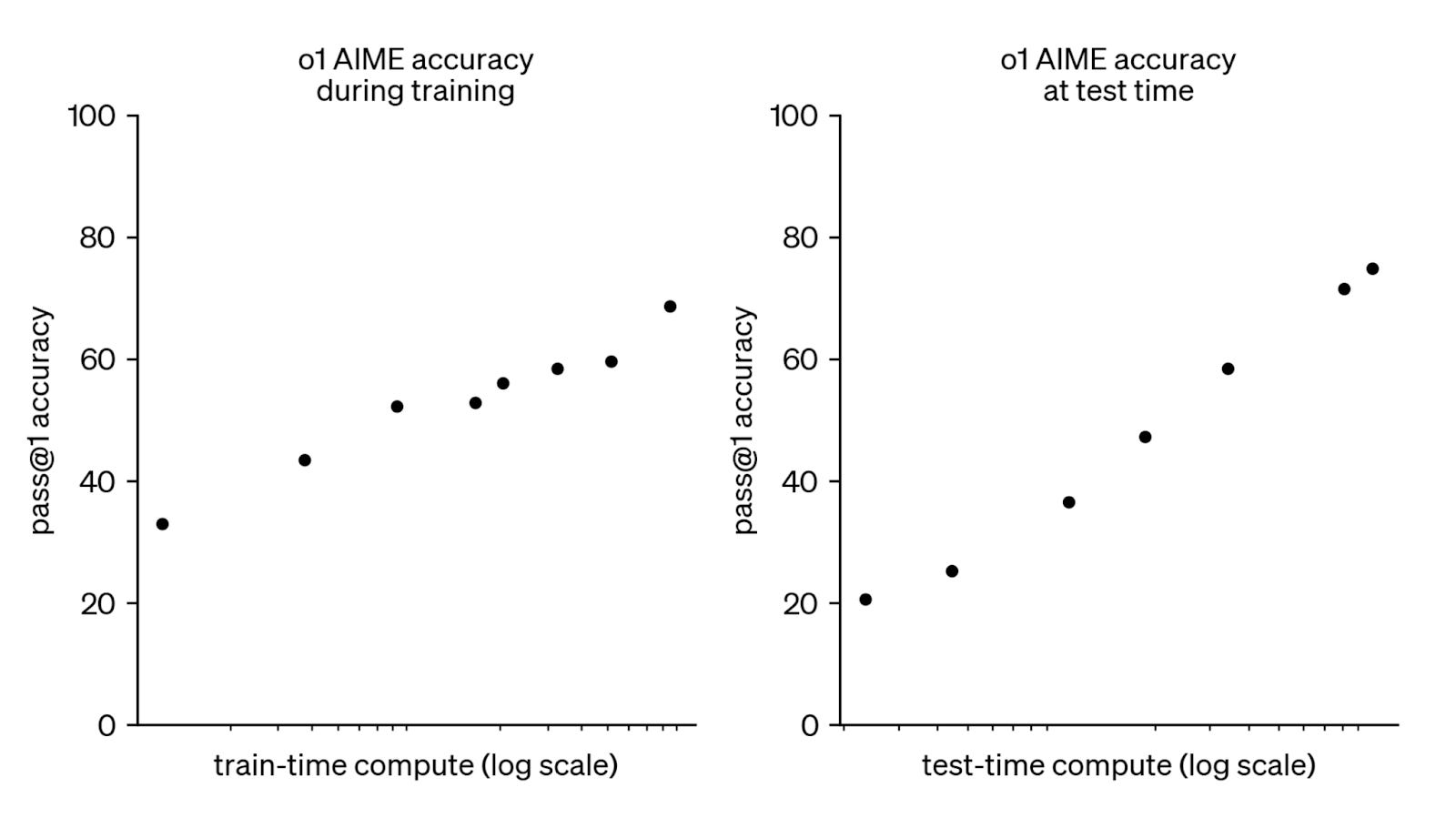

Inference scaling enables the model to allocate more compute resources to searching for the correct answer through intermediate reasoning steps or “thinking”. Models like the ‘o’ series from OpenAI spend more time thinking about the problem. During the thinking process, the model tends to generate multiple reasons or intermediate steps for a given problem.

Because the model now produces n number of responses for a single problem, it requires more computational resources. The more reasoning samples the models produce, the better it is for the model to select the most frequent answer as the final response.

This is essentially the crux of inference scaling, also known as test-time scaling.

What is Reasoning or Thinking for Inference?

We have established that few (compute) resources are required for general inference tasks, such as text summarization and creative writing. This is because such tasks are semantically and syntactically anchored. In other words, the outputs are based on the extracted pattern and the ordered sequence of the tokens. They are an end-to-end process – input in and output out. There is no intermediate process.

Reasoning, on the other hand, incorporates an intermediate process or the thinking process. It is where the model spends time processing and splitting out reasoning steps before providing the output.

Think of it as spending time planning a trip or vacation. You cannot simply take a handful of money and go on a vacation; you need to plan where you will stay, the places you will visit, the food you will eat, a shopping budget, and so on. Similarly, if you are solving a mathematical problem, you need to work out intermediate steps to find the correct answer.

In order for the model to reason properly, it needs three things:

Training on reasoning data to learn thinking or intermediate steps.

Reinforcement learning to identify the most suitable intermediate steps that lead to the final output.

Inference computing for thinking or producing intermediate steps before answering.

In this article, we will only focus on steps 1 and 3.

Chain-of-thoughts as Reasoning Data

When it comes to reasoning with data, the most common approach is to leverage the Chain-of-thought prompting-like dataset.

The CoT comprises input, steps, and output instead of input and output. When CoT-based data is provided to the LLM, the LLM tends to develop reasoning properties. And as the model scales in size according to the scaling laws, the model becomes more and more accurate in solving difficult reasoning problems.

To get an idea of what the CoT dataset looks like, check out NuminaMath-CoT in Huggingface here.

Training the LLM on the CoT prompting dataset enables the model to develop CoT reasoning capabilities, which is helpful in inference.

Test-time Scaling Methods

Let’s assume that the model has been trained and it is able to produce CoT, reasoning, or intermediate steps before providing the final answer. Now, the key thing to understand is that the model needs to produce various CoTs along with the final answer. This can be done using various approaches.

… OpenAI o1, a new large language model trained with reinforcement learning to perform complex reasoning. o1 thinks before it answers—it can produce a long internal chain of thought before responding to the user. – OpenAI

The most prominent models, such as the ‘o’ series from OpenAI, use scaled reinforcement learning to train their reasoning models. But there are other methods as well, like Tree-of-thought, Best-of-N, Beam search, etc. Let’s discuss some of these methods briefly. In this article, we will look at four methods used for test-time scaling.

1. Tree-of-Thought

Tree-of-Thought (ToT) extends Chain-of-Thought by exploring multiple reasoning paths simultaneously. This approach creates a branching structure of potential solutions.

Two primary search strategies exist for traversing the reasoning tree:

Depth-First Search (DFS): Explores a single reasoning path completely before backtracking

Breadth-First Search (BFS): Evaluates all possible next steps before proceeding deeper

DFS excels when:

The solution space has clear indicators of progress

Early detection of dead ends is possible

Memory constraints limit tracking multiple paths

BFS performs better when:

Multiple viable solution paths exist

The problem has misleading intermediate states

Computational resources allow parallel evaluation

ToT implementations have demonstrated significant improvements on complex reasoning tasks like mathematical problem-solving and logical puzzles.

2. Self-Consistency & Ensemble Voting

Self-consistency enhances reasoning by generating multiple independent solutions and selecting the most consistent answer. This approach compensates for the stochastic nature of language model outputs.

The process works as follows:

Generate N different reasoning chains for the same problem

Extract the final answer from each chain

Select the most frequent answer as the final response

For challenging problems, a weighted voting mechanism can improve results:

final_answer = argmax_a ∑(confidence(chain_i) × I(answer_i == a))

Where I is an indicator function that equals 1 when the condition is true.

Self-consistency methods have shown up to 30% improvement on complex reasoning benchmarks compared to single-pass approaches.

3. Monte Carlo Tree Search

Monte Carlo Tree Search (MCTS) is a powerful decision-making algorithm that helps language models improve their reasoning abilities. It works through four key phases:

Node Selection: The algorithm starts at the root and selects promising nodes to explore using strategies like Upper Confidence Bound on Trees (UCT).

Expansion: New child nodes are added to represent possible next steps in reasoning.

Simulation: The algorithm plays out potential solutions from the selected node.

Backpropagation: Results from simulations are used to update the value of previously visited nodes.

MCTS helps models balance:

Exploring new possibilities

Exploiting known good paths

Recent innovations like Speculative Contrastive-MCTS enhance this approach by adding:

Contrastive reward models to better evaluate node quality

Speculative decoding to speed up reasoning by 52%

Refined backpropagation that favors steady progress

This structured exploration approach has enabled smaller models to outperform larger ones on complex reasoning tasks, including surpassing OpenAI's o1-mini by 17.4% on multi-step planning problems.

4. s1: Simple test-time scaling

s1 presents a straightforward approach to improve language model reasoning with minimal resources. Unlike complex methods using millions of examples, s1 achieves impressive results through:

Training on just 1,000 carefully selected examples (s1K dataset)

Quick supervised fine-tuning (26 minutes on 16 H100 GPUs)

"Budget forcing" to control thinking time

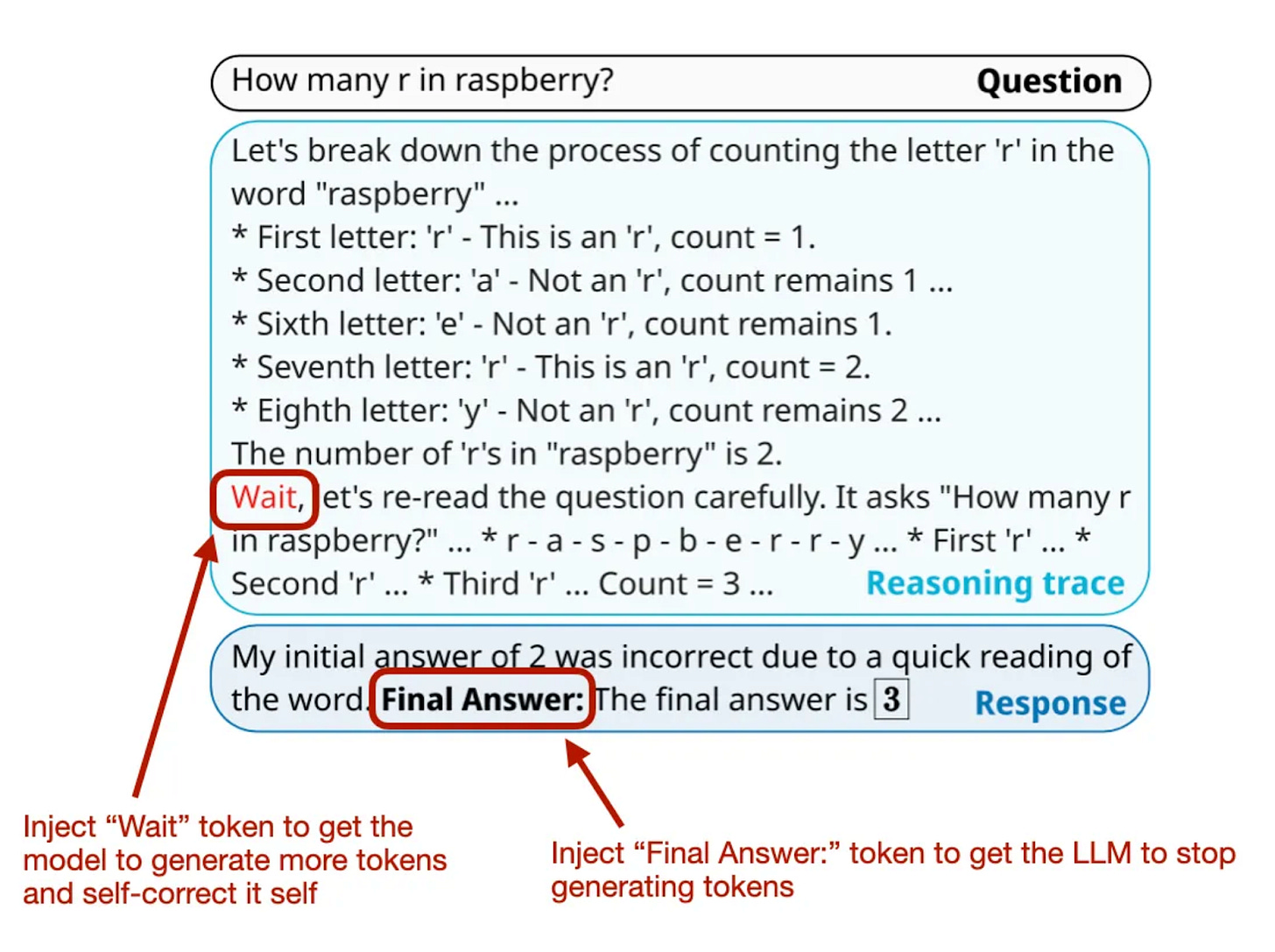

Budget forcing is key - it can limit thinking by forcefully ending the process after a set token count, or extend thinking by preventing stops and appending "Wait" prompts.

The results are remarkable - s1-32B shows clear test-time scaling behavior across benchmarks like MATH500 (93% accuracy), while being significantly more sample-efficient than competitors. This demonstrates that models already possess reasoning capabilities from pretraining, with fine-tuning merely activating these abilities.

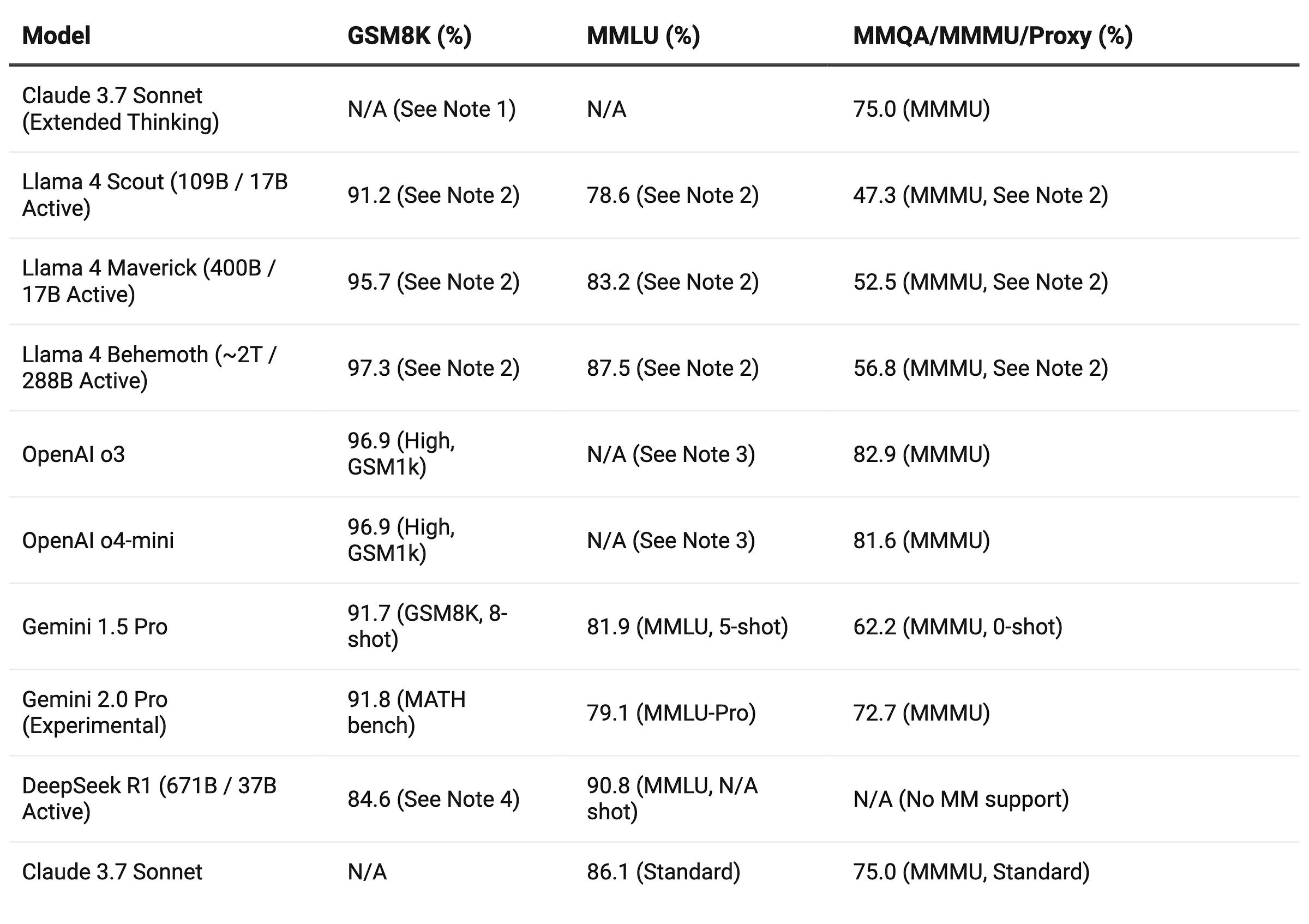

Benchmarking

Now, let’s see the benchmarking of some of the reasoning models in 2025.

In test-time scaling, models with extended reasoning capabilities show clear performance advantages. Claude 3.7 Sonnet with Extended Thinking demonstrates impressive results on specialized reasoning tasks (GPQA Diamond: 84.8%, MATH-500: 96.2%) while maintaining strong MMMU performance. The OpenAI o-series models excel across benchmarks, with o3 and o4-mini achieving near-perfect scores on GSM8K and leading MMMU performance. DeepSeek R1, while lacking multimodal support, shows outstanding performance on math-focused tests (MATH-500: 97.3%), highlighting how test-time scaling particularly benefits complex reasoning tasks that require multi-step thinking.

Conclusion

Inference scaling represents a significant advancement in how AI models reason through complex problems. Let's review the core concepts and highlights from our exploration.

Essential Definitions

⬩ Test-time scaling: Allocating more GPUs to an AI model during inference (when users are actually using it).

⬩ Test-time compute: The computational resources (measured in FLOPs) used during this phase.

⬩ Test-time search: The exploration process AI uses to find the right answer.

Critical Methods for Enhanced Reasoning

Several techniques have emerged to improve model reasoning capabilities:

Tree-of-Thought: Creates branching structures of potential solutions using either:

Self-Consistency: Generates multiple independent solutions and selects the most frequent answer.

Performance Impact

The benchmarking results demonstrate clear benefits:

OpenAI's o3 achieved 96.9% on GSM1k

Models with extended reasoning show remarkable improvements on specialized tasks

DeepSeek R1 reached 97.3% on MATH-500 benchmarks

Why This Matters

Inference scaling transforms how models approach reasoning tasks. By dedicating computational resources to "thinking" through problems step-by-step, models can:

Generate multiple reasoning paths

Evaluate different solution strategies

Select the most consistent answers

This mirrors human problem-solving more closely than traditional approaches. Rather than simply pattern-matching or providing direct answers, these models work through problems methodically.

The future of AI reasoning depends on balancing computational resources with effective reasoning strategies. As inference scaling techniques continue to evolve, we can expect even more impressive results on complex reasoning tasks.