ARC-AGI In 2026: Why Frontier Models Still Don’t Generalize

ARC-AGI-2 exposes the real gap: generalization efficiency under budget constraints, where refinement loops and test-time training matter more than raw model scores.

TLDR: This blog explains why ARC-AGI still matters in 2026—even as ARC-AGI-1 looks “solved” at the top end. You’ll get a clean history of the benchmark, a researcher-correct way to read the ARC Prize leaderboard (private vs semi-private, verification, cost-per-task), and a practical breakdown of what’s actually winning: refinement harnesses, program synthesis, and thinking budgets, not raw model weights alone. It also introduces ARC-AGI-3 and why interactive environments force agentic evaluation. You’ll leave with defensible takeaways, clearer score interpretation, and concrete research directions.

[Updated on 2026-01-22]

The “Solved” Paradox: When a Benchmark Stops Being a Benchmark

By early 2026, a strange tension defines the ARC-AGI landscape. On paper, top-tier systems now post accuracy scores that rival—or surpass—human baselines on ARC-AGI-1. And yet, no one seriously claims these models reason like humans. The benchmark is “solved,” but the problem isn’t.

What does this mean? This is the core paradox.

ARC-AGI-1, the original grid-based abstraction test, has yielded to systems that can brute-force their way to 85%+ accuracy using tightly engineered scaffolds, multi-shot refinement loops, and large thinking budgets. But ARC-AGI-2—its more brittle, contamination-resistant successor—still reveals what base models lack: efficient, structure-sensitive generalization from first principles.

To understand why, you need to know what ARC is actually testing.

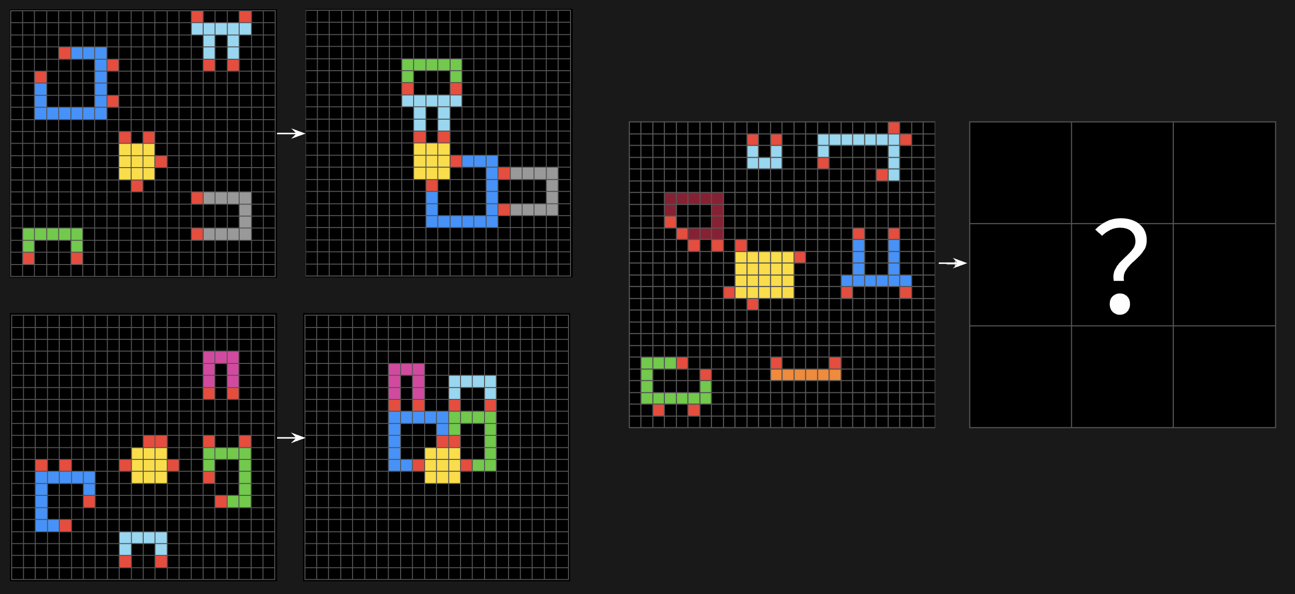

The Abstraction and Reasoning Corpus (ARC), introduced by François Chollet in 2019, was designed to probe a system’s ability to acquire novel skills from minimal examples. Not by gradient descent, but by analogical transfer and local abstraction. This principle survives in ARC-AGI today—but in a new operational framing: skill acquisition efficiency. Not “can it solve the task,” but how much scaffolding and compute does it require to get there?

The difference is really important today. A model’s capability refers to what its weights encode. A system’s capability refers to what it can achieve with orchestration: search procedures, memory augmentation, and refinement harnesses that adapt to the task at hand. And budget sensitivity—how score degrades under tighter compute or time constraints—is where the real separation happens.

This is what ARC has become: not a pass/fail IQ test for frontier models, but a stress test for generalization under resource constraints. A way to measure whether a system can acquire new reasoning procedures without over-relying on pretraining artifacts or excessive post hoc search.

ARC-AGI, in its current form, is no longer about raw intelligence. It’s about generalization efficiency—and the systems-level tradeoffs required to reach it.

A Short History of ARC: From Chollet’s Thesis to a Live Research Program

ARC didn’t begin as a leaderboard; it began as a thesis about intelligence. The Abstraction and Reasoning Corpus (ARC) was introduced in 2019 by François Chollet to operationalize a bold claim: intelligence is not pattern recognition at scale, but the efficiency with which a system can acquire new skills from sparse data. That framing remains the benchmark’s core.

2019: The ARC benchmark and “On the Measure of Intelligence.” Chollet’s paper defined intelligence as the efficiency of skill acquisition across a distribution of tasks. ARC instantiated this via a few-shot generalization challenge using abstract grid puzzles—each requiring the discovery of latent transformation rules without access to task families or training sets. This made ARC resistant to gradient descent and pretraining.

2020: The Kaggle ARC Challenge and the program search era. The first ARC competition revealed a dominant strategy: brute-force program synthesis, often with handcrafted DSLs. Winning entries hardcoded primitives, leveraged enumerative search, and exposed the difficulty of abstract generalization without symbolic scaffolding. The lesson: structure mattered more than the number of parameters.

2024–2025: The ARC Prize reframes ARC as a systems-level benchmark. Launched in 2024, the ARC Prize expanded ARC into a multi-tiered evaluation framework. ARC-AGI-1 and ARC-AGI-2 introduced public, semi-private, and private test splits to combat contamination; cost-per-task metrics to reward efficiency; and refinement loop categories to benchmark not just models, but systems. This shifted ARC from a static leaderboard to a live measurement infrastructure.

ARC-AGI-2, in particular, is a response to a known failure mode in benchmark culture: once scores climb, brute-force and leakage swamp insight. This issue is very concerning because labs can train a model to crush the benchmark. But in real life, users don’t get the advance they’re looking for. For instance, a model fails to understand a simple query to write an email based on the documentation and instead spends a lot of time reasoning over the document, which is not required.

By tightening task design and efficiency constraints, ARC-AGI-2 reclaims its role as a generalization benchmark—one that still bites.

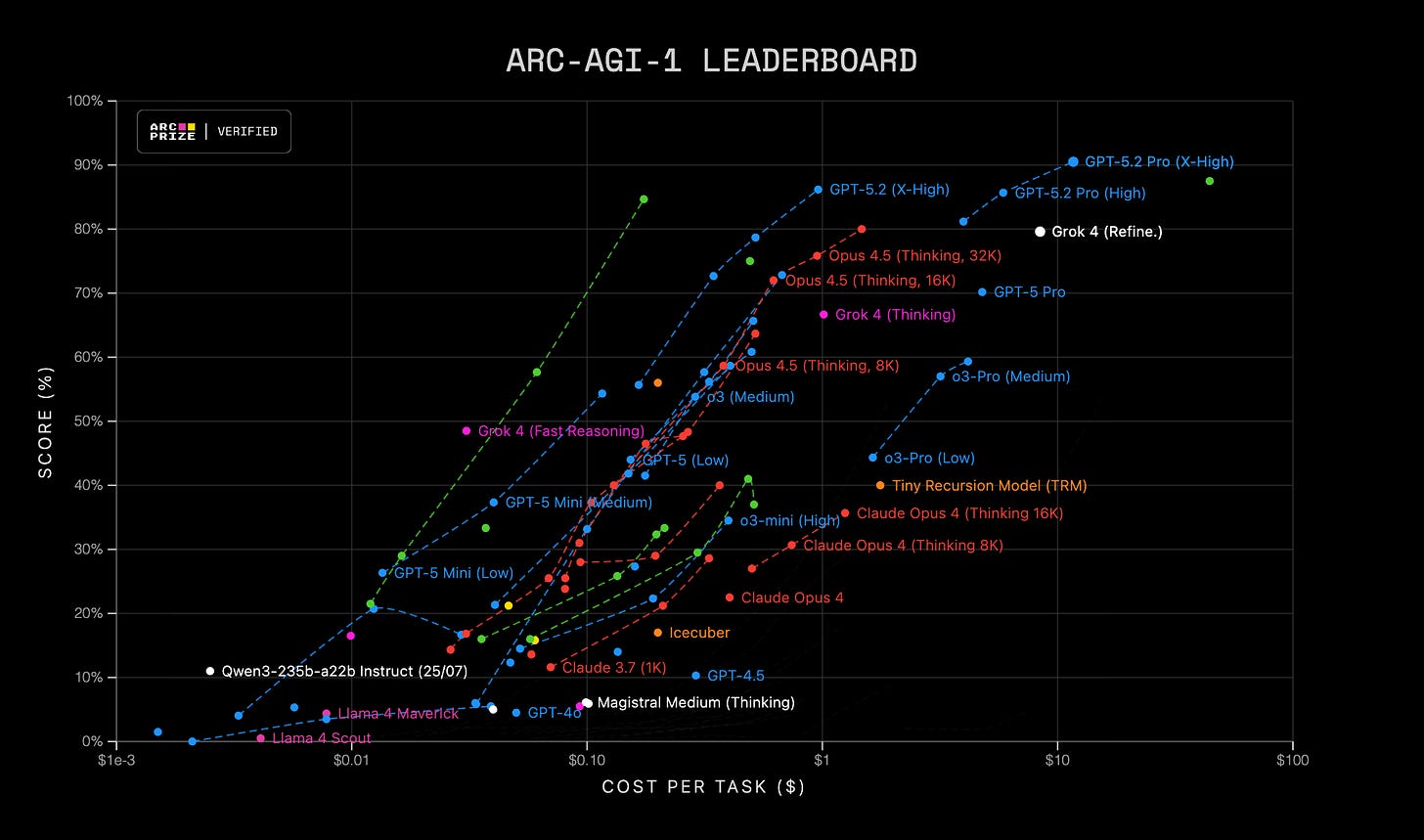

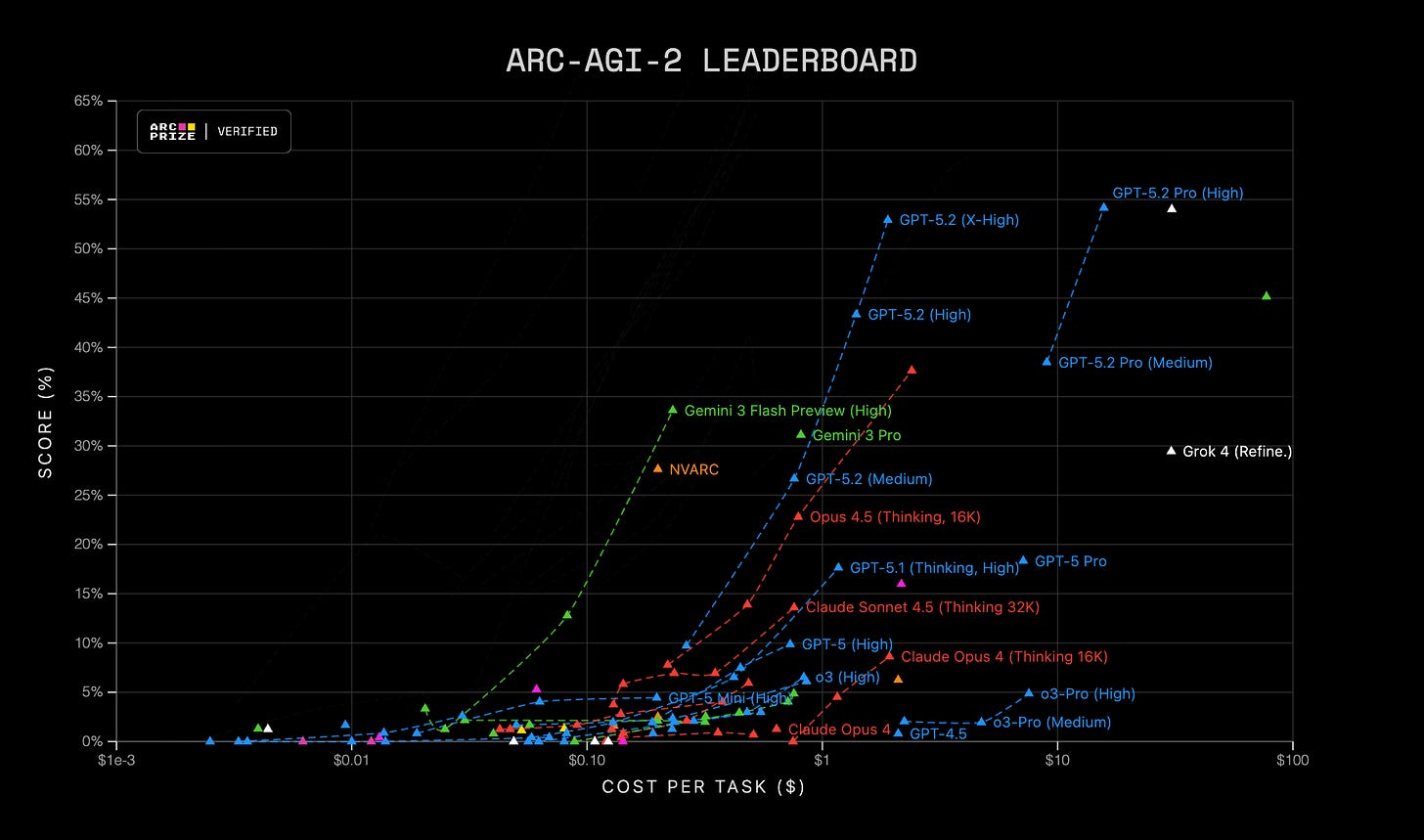

The January 2026 Leaderboard Reality

By January 2026, interpreting the ARC Prize leaderboard requires more than checking top-line accuracy. The ARC-AGI benchmark has evolved into a systems-level stress test, with budget constraints, evaluation rigor, and model-scaffold integration dominating. Accuracy alone doesn’t tell the story. It’s how that accuracy was achieved, under what conditions, and with what reproducibility guarantees.

How to read the leaderboard correctly:

ARC-AGI-1 vs ARC-AGI-2. ARC-AGI-1 has been effectively saturated at the top end, with multiple systems scoring >85%. ARC-AGI-2, released in 2024, introduced adversarial task construction and contamination resistance to measure generalization under abstraction pressure.

Private vs Semi-Private splits. ARC-AGI-2 uses three sets: public (for dev), semi-private (score visible but not prize-eligible), and private (final evaluation set). Only private scores determine prize standing.

Preview entries and cost tracking. Preview entries on the leaderboard may show high performance but remain unverified. Prize-eligible submissions must publish compute budgets and cost-per-task metrics, consistent with reproducibility policies.

Strict evaluation rules. Answers must match ground-truth output exactly. Any discrepancy in length, structure, or formatting fails the task. No partial credit is given, and attempts are limited to three per test input.

As of Jan 2026, three frontier model families are commonly used as reference points when reading the ARC Prize leaderboard:

GPT-5.2 (OpenAI): Listed as a leaderboard entry with published scores and $/task, but ARC Prize’s core framing emphasizes that system harnesses and budgets often drive the outcome.

Claude Opus 4.5 (Thinking, 64k) (Anthropic): Reported by ARC Prize (Dec 2025) as the top verified commercial model at the time (37.6% for $2.20/task), making it a solid baseline anchor for budgeted model-only performance.

Gemini 3 Deep Think (Google/DeepMind): Mentioned in ARC Prize’s Dec 2025 analysis in the context of “thinking” behavior (reasoning-token usage) and appears as a preview entry on the leaderboard.

ARC-AGI-2 scores hinge less on pretraining and more on orchestration—what search policies are used, how memory is handled, and whether compute is spent wisely.

In short, the ARC Prize leaderboard is no longer measuring “raw intelligence.” It’s measuring generalization efficiency under constraint—a more faithful signal of system-level reasoning.

The Technical Meta: How Systems Win ARC-AGI in 2026

By now, the winning pattern is clear. The top ARC-AGI systems don’t rely on base model predictions alone. They wrap models inside refinement loops: iterative, scaffolded processes that propose, verify, and correct until a passing output is found. This isn’t prompt engineering. It’s system engineering.

The field has started to distinguish between three modes of post-deployment adaptation:

Test-time training (TTT): Updates the model’s weights at inference, often using gradients or fast adapters. Disallowed in ARC competitions for fairness reasons.

Test-time search: Samples many completions from the model (via temperature sweeps, beam search, etc.) and selects the best one. It’s cheap, parallelizable, and increasingly baseline.

Test-time refinement: Builds an internal loop around the model—where outputs are evaluated, critiqued, and modified using auxiliary heuristics or verifier models. It’s slower, but more powerful.

The dominant technique across high-performing entries is program synthesis: rather than predicting the pixel-level output, systems write an explicit transformation program from input-output pairs, then execute it to generate the answer. This strategy enables better generalization and improves interpretability.

Typical ARC-AGI-2 system pipelines now look like this:

Step 1: The base model proposes a transformation rule, often in code or logic format.

Step 2: A verifier model simulates the result and compares it to ground truth.

Step 3: If it fails, a patch module refines the rule—via search, heuristics, or critique.

Step 4: The revised rule is re-evaluated and either accepted or looped again.

Step 5: Final outputs are only submitted when exact match is confirmed.

Step 6: All steps are subject to compute budget and attempt constraints.

This meta changes everything: model score is not portable without the harness. GPT-5.2, Claude Opus 4.5, and Gemini 3 all show variable performance depending on how refinement is engineered.

What ARC-AGI measures now is not model intelligence in isolation, but the quality of a system’s design under thinking budgets and feedback constraints.

What ARC-AGI-2 Measures That ARC-AGI-1 Could Not

ARC-AGI-2 didn’t just raise the difficulty—it redefined the benchmark’s purpose. Released in 2024 as the centerpiece of the ARC Prize framework, ARC-AGI-2 was designed to close loopholes exposed in ARC-AGI-1: brute-force pattern matching, overfitting to known grid motifs, and unrealistic inference-time compute budgets.

Its core aim is to test generalization efficiency. Meaning, how well a system can infer abstract rules from few-shot demonstrations under resource constraints. Instead of rewarding systems that explore every possibility, ARC-AGI-2 rewards systems that prioritize inference-time precision.

Key differences between ARC-AGI-1 and ARC-AGI-2:

Brute-force resistance. ARC-AGI-2 puzzles are selected adversarially to defeat large-scale sampling or search. Surface-level heuristics break more often, and success requires internal task modeling.

Contamination control. ARC-AGI-2’s semi-private and private splits are held out from all public training data and procedurally generated to reduce the risk of dataset memorization.

Generalization under budget. Each system is evaluated not just on accuracy but also on cost-per-task, attempt count, and compute multiplier. More thinking helps—but it’s expensive.

Scoring as a function of efficiency. A system scoring 85% by spending $200/task is not superior to one scoring 81% at $10/task. ARC-AGI-2 exposes this tradeoff via its leaderboard framing and prize rules.

Harder abstraction gaps. ARC-AGI-2 tasks often require compositional reasoning, global rule induction, and multi-step transformations—not just visual pattern inference.

The benchmark now enforces an implicit efficiency frontier: systems must improve accuracy without incurring higher costs. That’s the real signal. High performance without high discipline doesn’t qualify.

ARC-AGI-2 is no longer a benchmark of prediction. It’s a stress test for robust, budgeted generalization—and a forcing function for systems that learn to think under pressure.

ARC-AGI-3: Evolution From Static Grids to Interactive Environments

ARC-AGI-3 marks a turning point—not in difficulty, but in modality. Where ARC-AGI-1 and ARC-AGI-2 presented closed-grid tasks with fixed I/O pairs, ARC-AGI-3 introduces interactive reasoning environments that require agents to act, probe, and discover in real time.

This shift redefines what it means to “evaluate generalization.” Solving the task is no longer about identifying a pattern—it’s about navigating uncertainty, testing hypotheses, and selecting actions based on evolving beliefs. ARC-AGI-3 isn’t a puzzle bank. It’s a lab environment.

Key structural changes include:

Tasks are embedded in interactive environments with hidden rules.

Agents can perform actions to alter the state before producing an answer.

Success depends not on guessing the answer directly, but on figuring out what information to gather before guessing.

This makes ARC-AGI-3 a true agentic evaluation benchmark. Good performance now depends on capabilities traditionally outside the LLM fine-tuning loop:

Memory: Agents must remember what they’ve seen or tried at each step. Stateless models break down quickly.

Exploration policy: Random trial-and-error is inefficient. The agent must plan queries that reduce uncertainty.

Tool use: Some environments require invoking symbolic or procedural tools to simulate outcomes or track state.

Hypothesis testing: Reasoning becomes an experimental process: propose, test, revise.

Credit assignment: Success may hinge on a single correct decision five steps back. This demands planning over long horizons.

ARC-AGI-3 doesn’t replace ARC-AGI-2—it expands it into a different axis. Static benchmarks test extrapolation. Interactive benchmarks test adaptive behavior.

As of January 2026, ARC-AGI-3 remains in preview with limited public testbeds, but early results show one thing clearly: agentic evaluation is no longer optional. ARC has entered the exploration era.

Research Takeaways: Claims You Can Defend in a Lab Meeting

By early 2026, the ARC benchmark family will offer a well-structured lens on generalization under constraints. The following takeaways represent stable, low-controversy conclusions—grounded in evaluation design, leaderboard data, and ARC Prize documentation.

ARC-AGI-2 is the most defensible generalization benchmark for systems today. Its semi-private and private splits, contamination safeguards, and adversarial task construction mitigate many legacy failure modes.

Refinement loops—not base models—are the dominant axis of progress. Most leaderboard-leading entries use structured, multi-step refinement policies around model predictions, not single-shot completions.

Harness design matters as much as pretraining scale. Identical models show ±20% variance depending on the scaffolding, search heuristics, and verification tools used at inference time.

Cost-per-task and compute multipliers are part of the result. ARC-AGI-2 explicitly evaluates systems under budget constraints; high scores at unbounded cost are not eligible for the prize.

Program synthesis remains the most interpretable and scalable interface. Systems that generate transformation rules—not pixel guesses—generalize better and are easier to debug.

Test-time search is now baseline; test-time refinement is the new ceiling. Sampling many completions works, but iterative repair loops win under strict evaluation rules.

ARC-AGI-3 will force evaluation into the agent domain. Interactive tasks with stateful environments, hypothesis probes, and credit assignment challenges require memory and long-horizon reasoning.

The benchmark is evolving toward system-level efficiency, not model-level cleverness. As ARC shifts toward active inference and tool use, single-model evaluations become less informative.

These takeaways frame ARC not as a “final exam,” but as a structured audit of generalization efficiency under pressure.

FAQs

These frequently asked questions are designed for technical clarity and search discoverability. Each answer restates the core question, defines key concepts, and offers standalone insight into the ARC benchmark ecosystem as of January 2026.

What is ARC-AGI, and what does it measure?

ARC-AGI is a benchmark series designed to measure generalization efficiency in artificial systems. It evaluates whether a model or system can infer abstract rules from minimal demonstrations, under strict computational and attempt constraints. Unlike task-specific benchmarks, ARC-AGI covers novel reasoning puzzles with no shared ontology. The core metric is not just accuracy, but accuracy under budgeted inference, making it a signal for reasoning under pressure rather than memorization or brute force.

What is the difference between ARC-AGI-1 and ARC-AGI-2?

ARC-AGI-1 and ARC-AGI-2 differ in construction, evaluation policy, and robustness. ARC-AGI-1 uses a static, hand-curated dataset released in 2019, now heavily saturated by training exposure and brute-force solutions. ARC-AGI-2, launched with the ARC Prize in 2024, introduces private and semi-private splits, adversarial task design, and cost-per-task evaluation. Its goal is to test generalization under abstraction, not just pattern completion. Scores on ARC-AGI-2 are generally lower but more meaningful.

Why did ARC-AGI-1 stop being the frontier stress test?

ARC-AGI-1 stopped being a reliable stress test because leading systems began to overfit. The dataset became widely used in pretraining, and brute-force program search could solve many puzzles without true understanding. As a result, scores above 85% became common—but without proof of reasoning. ARC-AGI-2 was introduced to reassert difficulty via holdout sets, compute restrictions, and adversarial puzzle construction, restoring the benchmark’s diagnostic value.

What is a refinement harness/refinement loop?

A refinement harness is a structured wrapper that iteratively improves a model’s initial guess. A refinement loop typically includes components like a proposer (model), a verifier, and a repair mechanism. These systems don’t rely on the first output—they check, patch, and re-evaluate until a solution is found. Refinement harnesses are dominant in ARC Prize submissions because the benchmark rewards exact matches and allows multiple attempts. This setup enables systems to trade time and compute for accuracy.

Test-time training vs test-time search vs test-time refinement: what’s the difference?

Test-time training (TTT) modifies model weights during inference, typically via gradients. It’s disallowed in ARC Prize settings due to leakage risks.

Test-time search samples multiple completions from a frozen model and selects the best.

Test-time refinement builds an explicit loop that critiques and revises outputs—often using external tools or auxiliary models. While TTT is powerful, test-time refinement is currently the most reliable and rule-compliant method for boosting ARC performance.

Why does program synthesis work so well on ARC-style tasks?

Program synthesis outperforms direct prediction on ARC tasks because it mimics how humans solve rule-based puzzles: by constructing transformation procedures. Instead of predicting outputs token-by-token or pixel-by-pixel, systems write a symbolic function that explains input-output mappings. This abstraction improves generalization, debuggability, and alignment with task intent. It also supports verification, since the proposed program can be executed and compared to ground truth deterministically.

What do “thinking budgets” mean, and how do they affect scores?

Thinking budgets refer to constraints on how much compute or how many steps a system can use per task. In ARC-AGI-2, this appears as cost-per-task (e.g., dollars, FLOPs, or model calls). Systems with unlimited budgets can brute-force many puzzles, but ARC-AGI penalizes that. The result is an “efficiency frontier”: a system is evaluated not just on accuracy, but on how economically that accuracy is achieved. Longer thinking can improve scores—but only up to a point.

How should researchers interpret cost-per-task and compute multipliers?

Cost-per-task is a central metric in ARC-AGI-2. It reflects how much compute or money a system spends per successful solution. Compute multipliers indicate how many model calls or iterations were required compared to a baseline. A system that solves 85% of puzzles by spending $200/task is less competitive than one that scores 81% at $10/task. These metrics shift focus from raw accuracy to generalization efficiency, encouraging realistic deployment considerations.

Why do base LLMs stall near noise-level on ARC-AGI-2 without scaffolding?

Base large language models often score <25% on ARC-AGI-2 tasks without scaffolding because the tasks require symbolic manipulation, rule inference, and abstraction—skills not strongly represented in next-token prediction. Without a harness to structure reasoning, these models revert to pattern recall. ARC-AGI-2’s adversarial puzzles break shallow correlations, exposing the limits of pure scaling. Only when wrapped in refinement loops or synthesis scaffolds do these models begin to approach competitive performance.

What is ARC-AGI-3, and why does “interactive” change evaluation?

ARC-AGI-3 is an interactive reasoning benchmark that evaluates agents instead of static predictors. Unlike earlier versions, it presents environments where rules must be discovered through actions. Agents can probe, track state, and experiment to learn task dynamics. This shift tests memory, exploration policy, and credit assignment—capabilities beyond what static I/O evaluation captures. ARC-AGI-3 represents the future of agentic evaluation: systems that don’t just infer, but learn through doing.