Claude Sonnet 4 Vs Opus 4.1: Which Model To Use For Coding

A small study on the Claude Opus 4.1 and Claude Sonnet 4 to help you select the appropriate model for your use case.

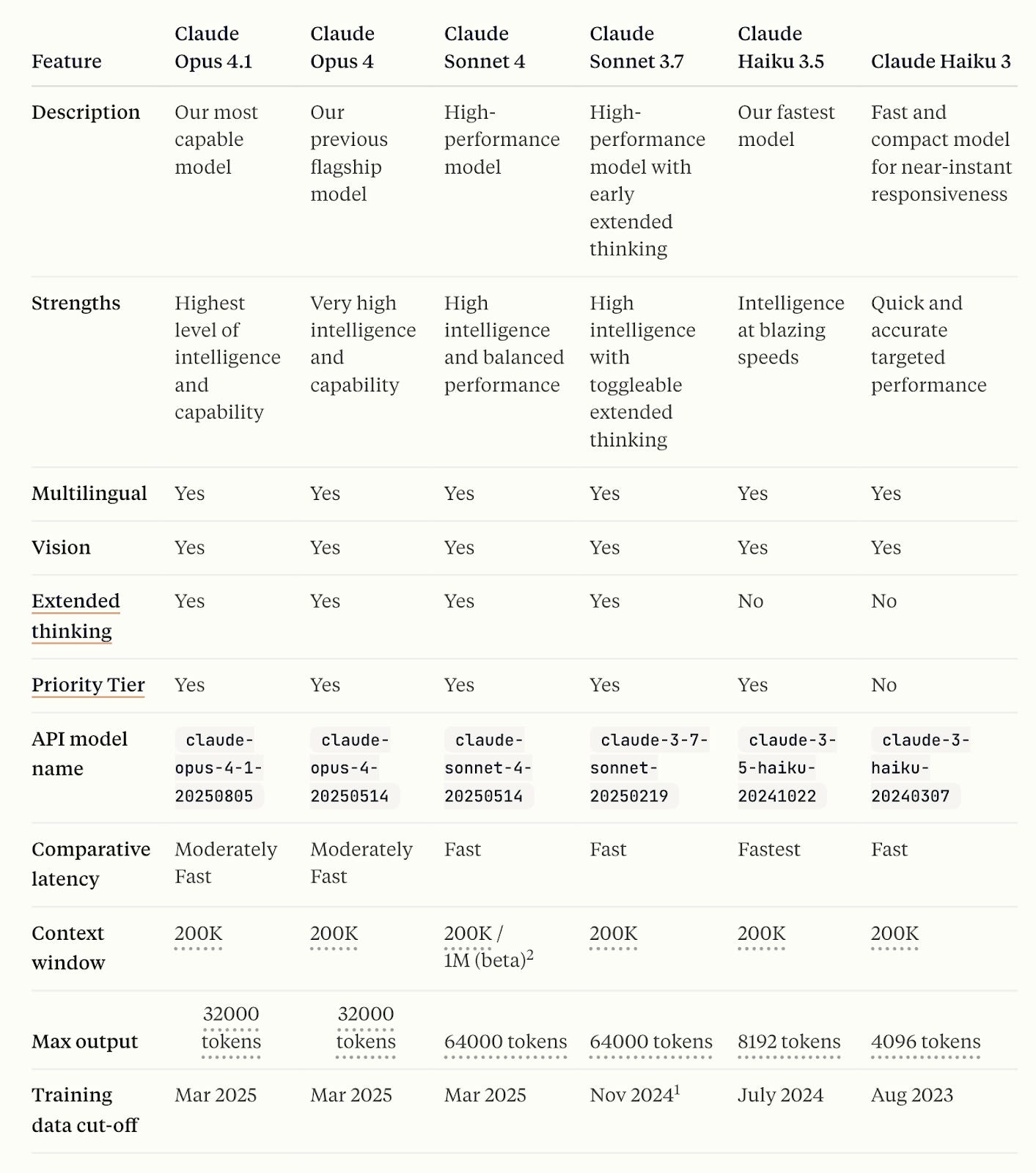

In the previous two blogs, I shared about Claude Code for Productivity Workflow and three best practices for using ClaudeCode. Today I wanted to share about the intelligence behind Claude Code, i.e., the LLMs. Claude Code uses three models: Haiku, Sonnet, and Opus. While Haiku, being the cheapest and fastest model, is good for non-intelligent workloads, such as text summarisation, formatting, etc.

For a slightly complex and agentic workflow, Claude Sonnet 4 and Claude Opus 4.1 are to be preferred.

These two Claude 4 series have been widely adopted in the enterprise and personal level assistance. These models are well-trusted, and in the blog, I wanted to touch upon why these two models are good for prototyping, product development, and coding.

Why Claude 4 Dominates AI Coding Benchmarks

Product teams struggle to justify AI coding investments when most models deliver inconsistent results and unclear ROI compared to human developers.

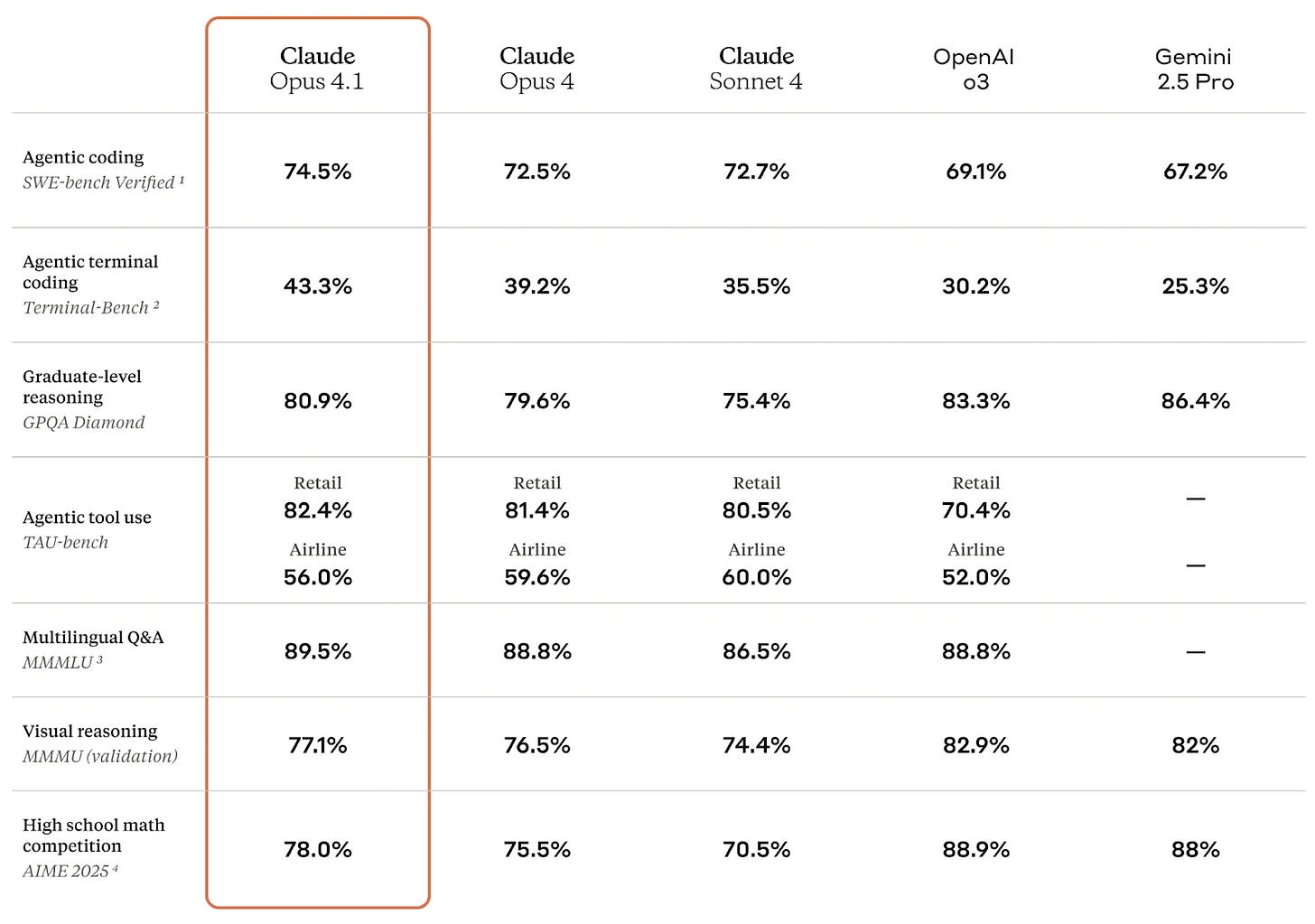

Claude 4 models solve this by delivering 74.5% accuracy on real-world coding tasks, with documented enterprise ROI and strategic adoption by GitHub.

Why does benchmark dominance matter for product teams?

In my opinion, when you face tight deadlines and need AI that performs like a senior engineer, not a junior one, who makes errors.

Claude Opus 4.1 achieves 74.5% accuracy on SWE-bench Verified. It also hits 43.2% on Terminal-bench, simulating command-line workflows that stump competitors.

In my tests, Claude 4 handled full repositories without losing context, unlike earlier models that fragmented on long tasks.

What if you could measure ROI directly?

GitHub integrated Claude Sonnet 4 as the default for Copilot, citing its edge in agentic coding for productivity gains.

Replit's Matt Palmer said, "Replit Agent is now powered by Claude Sonnet 4.0, bringing you the latest AI capabilities to help you build even better projects."

Cursor users call it a "cheat code" for coding speed.

Developers claim 40% faster development cycles.

How do these translate to action?

Pilot Claude 4 on one prototype: Compare time spent versus your baseline human process.

Track key metrics: Measure error rates and iteration speed over a sprint.

Scale based on data: If ROI hits 2x, integrate into daily workflows.

As platforms like GitHub lead adoption, expect Claude 4 to redefine team workflows industry-wide.

Sonnet 4 vs Opus 4.1: Strategic Model Selection

Development teams waste budget and performance by using the wrong Claude model for their specific use cases. This leads to either overspending or underwhelming results.

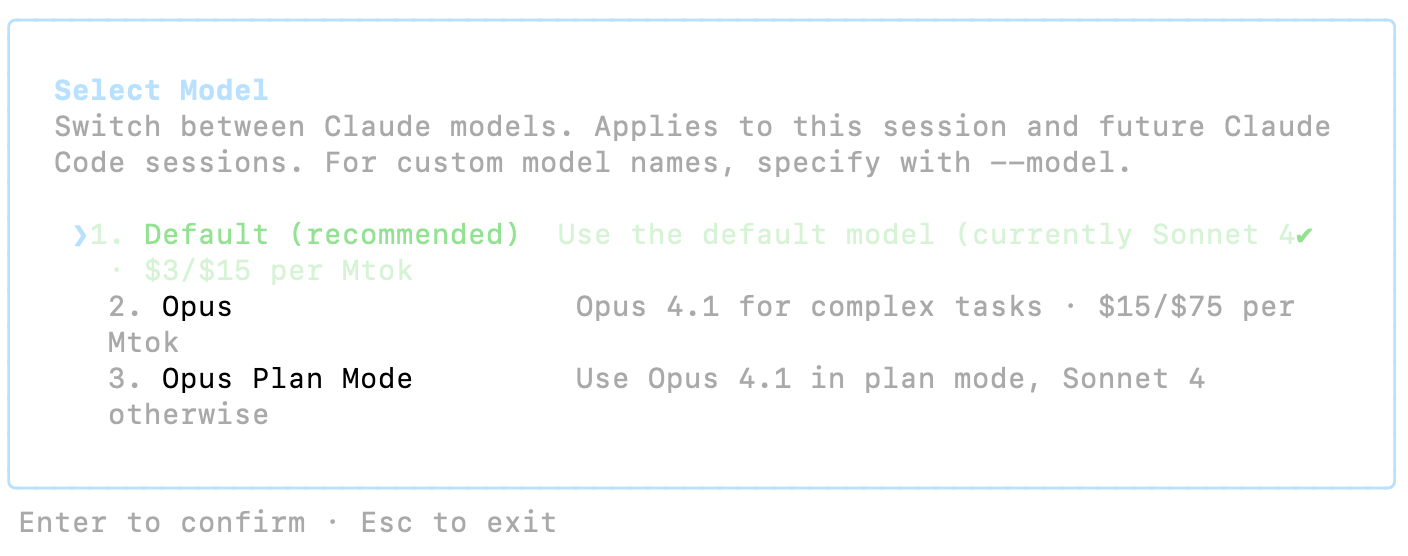

Choose Sonnet 4 for daily development at $3/$15 per million tokens, and Opus 4.1 for complex reasoning at $15/$75 when accuracy justifies 5x cost. Check out the pricing doc.

Why does model selection matter in fast-paced product work? Sometimes we tend to have a notion that often defaults to the priciest option, thinking it guarantees results. But in reality, that drains budgets without matching needs.

Sonnet 4 handles most tasks with speed and efficiency, scoring close to Opus 4.1 on benchmarks like 72.7% on SWE-bench Verified. Opus 4.1 pulls ahead at 74.5% for intricate problems, per Anthropic specs.

In my observations, startups using Sonnet for prototyping cut costs by 80% while maintaining output quality.

What separates the two in practice?

Use Sonnet 4 for:

Quick iterations: Code reviews, bug fixes, simple agents.

High-volume work: Daily scripting, data analysis.

Cost-sensitive projects: Where speed trumps marginal accuracy.

Switch to Opus 4.1 for:

Deep reasoning: Multi-step planning, large repos.

High-stakes tasks: Where errors cost more than the premium.

Agent orchestration: Leading sub-agents in complex flows.

Teams report 2x productivity with this mix, like in Continue.dev where Opus plans and Sonnet builds.

How do you decide the ROI threshold?

You need to calculate. If Opus reduces errors by 25%, and your error fixes cost $100/hour, justify the 5x premium above 4 hours saved per million tokens.

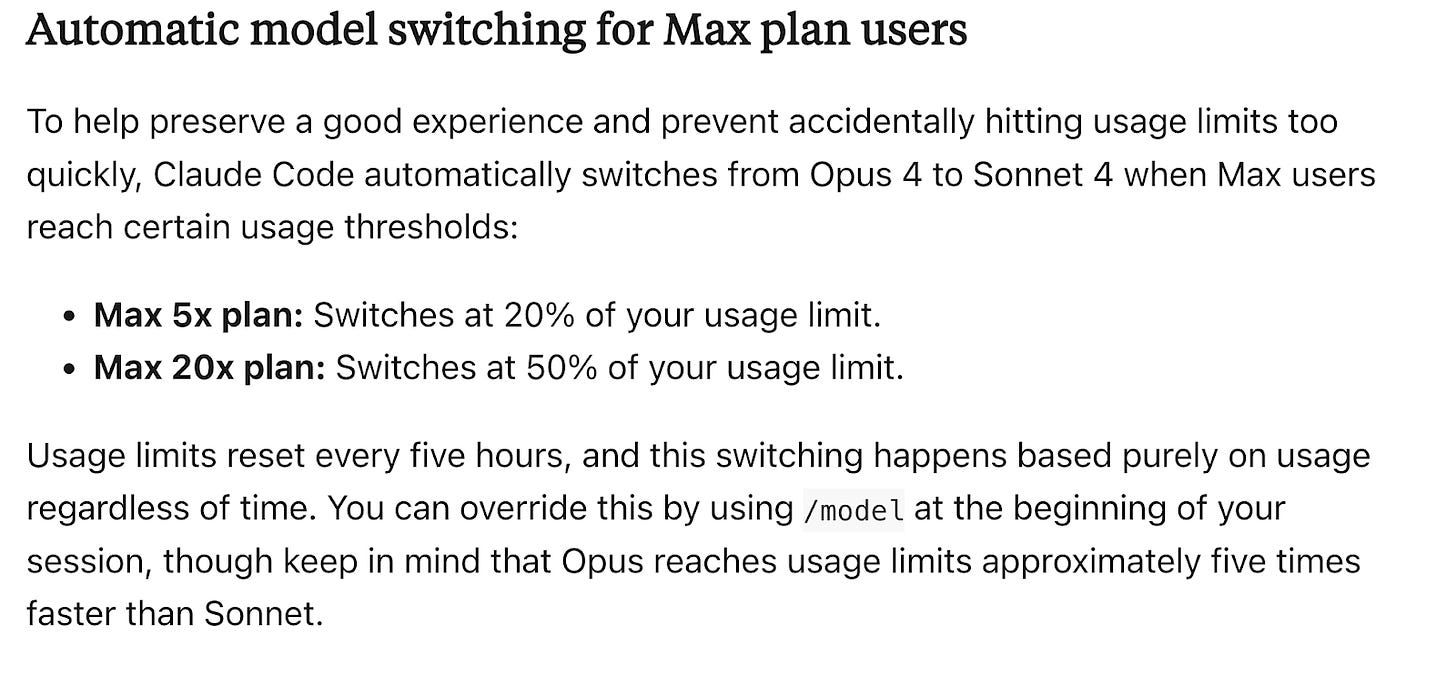

In Claude Code, enable auto-switching: It detects complexity and routes to Opus only when needed.

Test this. Run a sprint with hybrid mode and log savings.

Prototyping Success Stories: Whiteboard to Production

Product teams lose competitive advantage when prototyping takes weeks instead of hours, delaying market validation and investor presentations.

Claude 4 reduces prototyping time significantly through autonomous coding. It enables teams to move from concept sketches to working demos in single sessions.

Why do long prototyping cycles hurt innovation?

I watch founders sketch ideas on whiteboards, then wait days for code, missing chances to test with users.

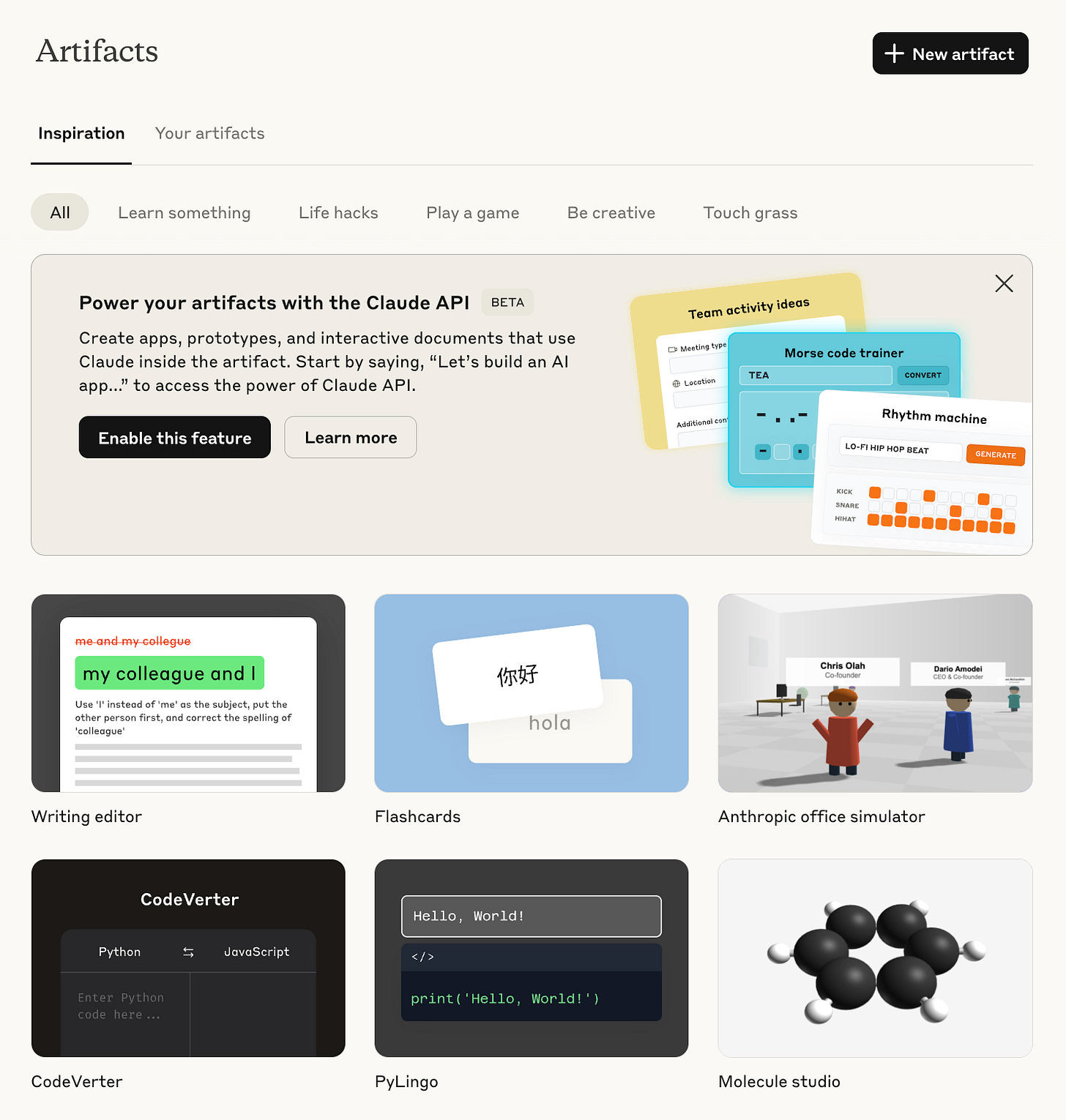

Claude 4 delivers faster development cycles, turning whiteboard concepts into working apps via its Artifacts system for instant previews.

In my view, this allows non-coders build MVPs pretty fast.

How does this scale across stages?

From seed ideas to enterprise tools, Claude handles multi-stage growth. It can prototype UIs for startups or full systems for teams.

Ask yourself. Can AI code a dashboard from a screenshot? Yes. Check out this post from Julian Goldie.

What steps make this real?

Sketch your concept: Upload to Claude for initial code generation.

Design your prompt: Write your prompts. Evaluate them on various use cases and iterate on them. Try Adaline.

Iterate with Artifacts: Preview and refine in one session.

Validate fast: Deploy the demo and gather user feedback the same day.

This builds trust through quick wins, much like validating assumptions in a lean loop. Check out Lenny’s Newsletter on AI prototyping.

Claude Code: Your Agentic Teammate in the Command Line

Developers lose productivity switching between AI chat interfaces and their actual development environment, breaking focus and slowing iteration cycles.

Claude Code integrates directly into terminal workflows, providing autonomous coding assistance without context switching, maintaining flow state during development.

Why does context switching kill developer momentum?

I see engineers copy-pasting code from browsers back to terminals, losing precious minutes each time.

Claude Code eliminates this by living in your terminal, with git integration for commits and full file system access for edits. It maintains multi-file awareness across your project structure, understanding dependencies without manual uploads.

In my experience, this setup turns AI into a true teammate that handles full loops autonomously.

Check out these blogs/resources on Claude Code: