Do We Have Data to Train New AI?

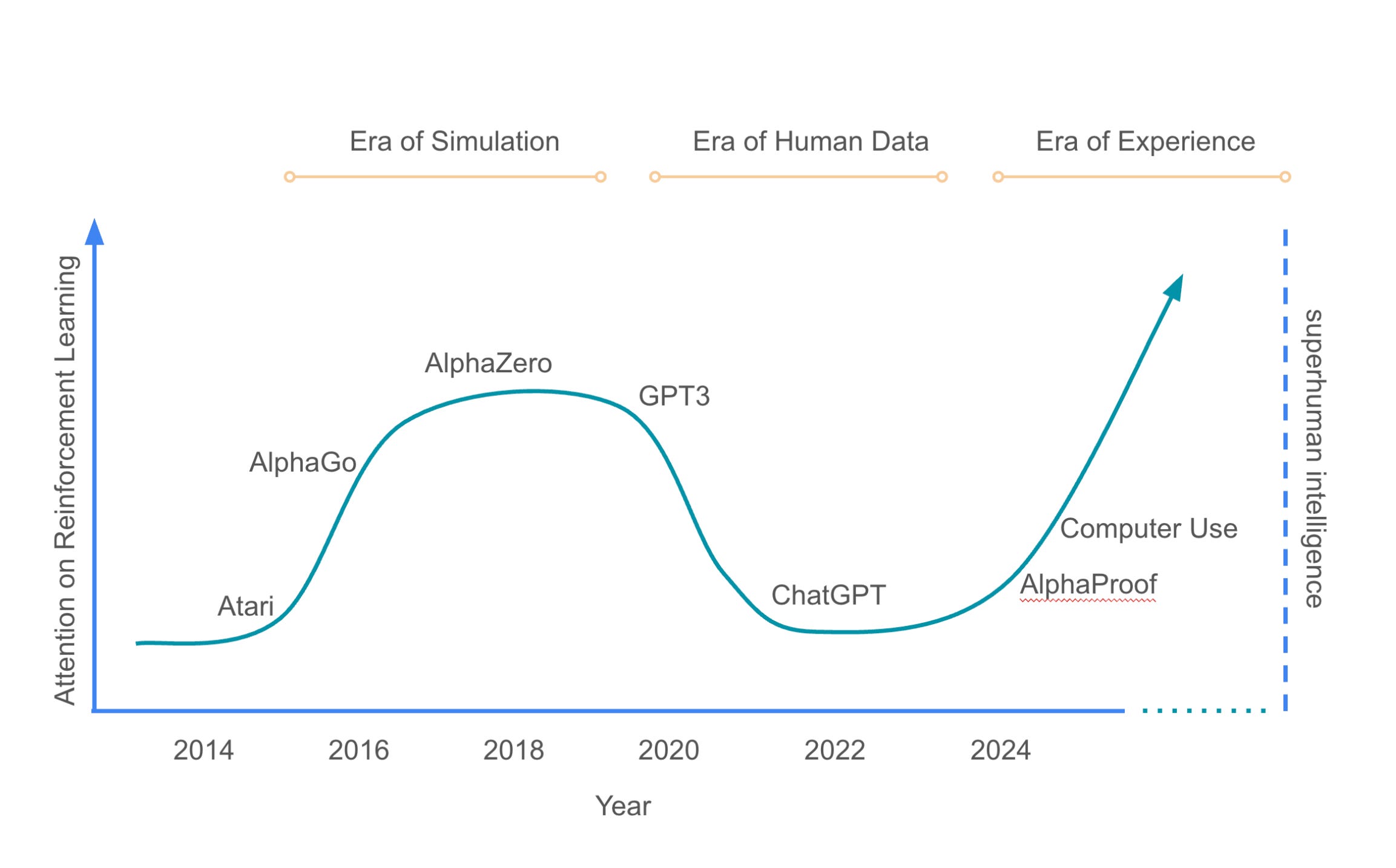

From the era of simulation to the era of human data to the era of experience.

The Data Well Isn’t Dry, but the Pump Must Change

AI systems have grown by consuming nearly everything humans have written, said, or posted—but that supply is flattening. To go beyond replication, models must now learn from experience, not just data scraped from the past.

Most AI products today are trained on the “era of human data.”

This worked well when the internet was fresh fuel. But the rate of human content generation is slower than the pace at which models consume it. That means we’re approaching a ceiling—not just in volume, but in innovation, as human innovation takes years to come.

The next wave will be built on experience: AI systems learning through interaction, not imitation.

For product teams, this changes how we think about data collection, training, and user interfaces. It also changes how we define progress.

Brad Lightcap, COO at OpenAI, emphasizes deployment—building tools people actually want, based on real usage signals. It is more than training better models; it’s about aligning product goals with real-world outcomes.

This matters because the tools you ship now will shape how your users—humans and machines—learn, adapt, and make decisions.

Hitting the “Human Data Ceiling”

Most modern LLMs are trained on a simple premise: absorb everything humans have ever written—books, articles, transcripts, code. This “era of human data” gave us GPT-3, GPT-4, and massive progress. But the pipeline is flattening.

Demis Hassabis says he’s “not very worried” about running out of data—but adds a condition: future models will need real-world grounding to make synthetic data useful. In other words, models must go beyond imitation and start learning from consequences.

That means interacting with the world, generating their own experiences, and adjusting based on what actually works—not what humans think might work.

David Silver argues the same. Even high-quality synthetic data hits a ceiling if it’s rooted in the same human-origin corpus. A model that’s anchored in imitation will plateau. You need feedback loops grounded in reality.

Think AlphaZero—not trained on human games, not limited by them. It learned Go and Chess from scratch through self-play, surpassing even AlphaGo.

Essentially, human data wasn’t just unnecessary—it was actively limiting.

The Bitter Lesson: Human Labels can Cap Performance

RLHF made LLMs more helpful—but it’s shallow grounding.

A shallow grounding is where a human raters judge outputs before consequences. Like David Silver says, “... rating a recipe without tasting the cake”.

That feedback is safe, but misleading.

Think for a moment, how can we judge a recipe (just by seeing or reading) unless and until we taste it?

It’s why AlphaProof works. It only gets a reward when the proof is verified—not when it “sounds right.” Grounded feedback changes the learning loop. It helps systems discover “alien ideas” like AlphaGo’s Move 37—solutions no human saw coming.

If you have watched the AlphaGo documentary, you know what I am talking about.

Move 37 wasn’t just surprising—it broke convention.

AlphaGo placed a stone on the fifth line of the board, a move that defied Go tradition and human logic. No professional player would have considered it. But it worked. It reorganized the board in a way that changed the trajectory of the match and ultimately led to AlphaGo’s win.

If AlphaGo had been trained on human preferences—judging only what looked plausible to a Go master—it likely never would have discovered it.

Move 37 is the symbol of what becomes possible when systems learn from their own experience rather than human imitation. It didn’t just challenge human intuition—it surpassed it.

Visible Symptoms in Today’s LLM Roadmaps

Scaling up isn’t scaling returns.

Training costs are rising.

Performance gains are tapering.

What’s unlocking usage? Not GPT-4’s IQ—ChatGPT’s UX. The interface shift mattered more than the model upgrade.

Before ChatGPT, OpenAI’s primary interface was an API and a completions-based playground. Users had to figure out how to prompt it, and many tried to “hack” it into a conversation. That behavior was the signal. It revealed an unstated desire: users didn’t just want predictions—they wanted to talk.

So, OpenAI responded by building ChatGPT, the first real conversational interface. They taught the model to follow instructions and respond naturally. The result wasn’t just a usability improvement.

It was the unlock.

Adoption surged not because GPT-4 was smarter—but because ChatGPT was more usable. It reframed what AI could be for most people. As Brad Lightcap put it, this was the moment AI arrived at scale—not in theory, but in product.

In regulated industries like health and education, red tape slows deployment—regardless of model power. Meanwhile, OpenAI saw a disproportionate usage spike after cutting prices. Intelligence is becoming “too cheap to meter,” but demand is now outpacing the human data supply.

The takeaway is that we’ve maxed out what imitation alone can give us. It’s time to build systems that learn by doing. Systems that generate their own data, verify it through real-world impact, and never stop improving.

New Fuel Sources Emerging

AI is transitioning from static data to dynamic experience. Instead of scraping the past, we’re starting to generate the future.

Synthetic text: Self-Play, Data Augmentation, & Copy-Editing Risks

Synthetic data has value—but it’s not infinite.

AlphaZero showed that self-play, where an agent learns by interacting with itself, can drive performance far beyond what human examples alone can teach. These systems create data “appropriate to the level they’re at,” which means they continuously scale with skill. That’s powerful.

But there’s a catch. There always is.

Synthetic data that mimics human output still inherits human blind spots. As Demis Hassabis notes, if models are anchored in human language, they’ll mostly produce “humanlike responses.”

That’s fine for fluency—but not for discovery.

The goal is not just more text, but experience-based data that reflects exploration and adaptation, not copy-editing.

Research can only move forward if we’re willing to leave the comfort of convention. The “era of human data” gave us the base. But to go further—to reach superintelligence—we need to enter what David Silver calls the “era of experience.”

AlphaZero didn’t learn chess by studying grandmasters. It learned through trial and error—millions of games against itself. No tutorials. No examples. Just outcomes. It surpassed every system trained on human data.

Why? Because the human data was actively limiting performance.

This is the bitter lesson of AI: brute-force learning from real-world consequences works better than carefully encoding human knowledge.

You don’t get Move 37—a creative, alien, fifth-line Go move—by imitating a master. You get it by letting the machine discover what we never would have tried.

Experience-first research doesn’t take decades. It scales. It iterates. It surprises us. But only if we’re willing to stop asking, “Does this look right to a human?” and start asking, “Did this actually work?”

Multimodal Torrents: Images, Code, Speech, Sensor logs

Multimodal models are moving fast. Tools like Windsurf and Cursor are already making software engineers 10× more productive.

And I would like to emphasize the three pillars that will make you productive as you use AI:

Knowing your domain

Prompt engineering

Aligning AI to your goals

Especially, if you know your domain, you can ask questions to AI. This enhances your critical thinking.

As these models take on more cognitive tasks, something else becomes more important—not less: human judgment. The ability to decide what problem to solve, not just how to solve it, is now the differentiator.

Brad Lightcap points out that the rewards increasingly go to those who can figure out “what good looks like” before the AI even starts optimizing.

That’s where critical thinking comes in.

Multimodal systems can process information across channels—but they don’t know which questions matter. Humans do. It takes judgment to filter noise, frame problems, and set meaningful goals. The role of the product leader or engineer isn’t disappearing—it’s evolving into something sharper: a navigator, not just an operator.

Ronnie Chatterjee puts it plainly:

Emotional Quotient (EQ), decision-making, and clear thinking will be at a premium. These are the skills that can’t be fine-tuned into a model. You need to practice them.

What Product & Engineering Teams Should Do Right Now

AI is shifting fast.

Product and engineering teams need to adjust now to stay relevant.

Audit: Map Your Data Dependencies and Expiry Dates

Start by asking: what data do we rely on, and how long will it stay useful?

Usage patterns evolve. So do user needs.

OpenAI constantly studies how people use its products—and how those patterns shift as the tech advances. Your product roadmap should do the same.

They started with ChatGPT, where the sole idea was to give a conversational interface to the users. Now, they have an interface and all they do is integrate new and better models into the interface that gives users,

Intelligence into their hands to explore new things.

Opportunity to learn.

Opportunity to build products.

Opportunity to create wealth.

Opportunity to solve unsolved and unseen problems.

Also, zoom out.

AI is not just a product shift—it’s an economic shift.

OpenAI’s Chief Economist tracks how AI affects labor markets and firm productivity. Teams that understand these trends can build tools that move the needle, not just chase hype.

Design: Bake Continual-Learning Loops into Product UX

If AI is to learn from experience, your product must let it experience things.

Build interfaces where agents can operate like teammates—whether it’s inside an IDE, a document, or a support ticket. These loops let models adapt in real time, not wait for retraining.

Good UX here is simple: shorten the path from idea to outcome. Make room for user input and feedback.

Systems that learn continuously improve continuously.

Collect user feedback and pick out important cues and features that represent users’ experience in your product. This isn’t just about form fields or five-star ratings—it’s about grounding the system in real-world consequences. Did the recommendation lead to a conversion? Did the generated content improve engagement? That’s grounded feedback.

To enable this, identify signals that reflect actual outcomes—click-through rates, time on page, successful task completion, even user frustration or delight. These can be mapped to reward signals for the system to optimize against. A user saying “make this better” might become a shift in metric weight—from speed to quality, or from relevance to novelty.

Start small: a clearly defined goal like “help users finish signup faster” can be translated into verifiable metrics like drop-off rate or average time-to-convert. Then, observe how those change as the model interacts with users. This is how you turn passive telemetry into active learning fuel.

Like AlphaZero playing millions of games, your system improves when it's allowed to experiment—and knows when it wins. Real learning happens when the model doesn't just complete a task, but sees what worked, what failed, and adapts accordingly. That’s experience worth collecting.

Guardrail: Reward Shaping & Safety Reviews for Synthetic/agent data

With autonomy comes risk. Reward functions must adapt. Human input is key—not just for rating outputs, but for flagging misalignment early. If a metric drives distress, the system should shift course.

Safety isn’t a feature. It’s the foundation.

The Era of Experience: Beyond Human Data Limitations

AI has reached the ceiling of human-generated data. While models like GPT-4 were trained on everything humans have written, this approach is now plateauing—human content creation can't keep pace with AI consumption. The breakthrough lies in transitioning to an "era of experience".

AI systems learn through real-world interaction rather than imitation.

AlphaZero exemplifies this shift. It mastered chess and Go without human training data. It discovered moves like AlphaGo's revolutionary "Move 37" that no human would attempt. For product teams, this means building systems with continuous feedback loops, grounded rewards based on actual outcomes, and interfaces that enable AI to learn from consequences.

Not just preferences.

Experience-driven AI doesn't just replicate; it innovates.