Writing Effective Tool Calling Functions

Practical patterns for designing schemas and aligning prompts for product builders and AI PMs.

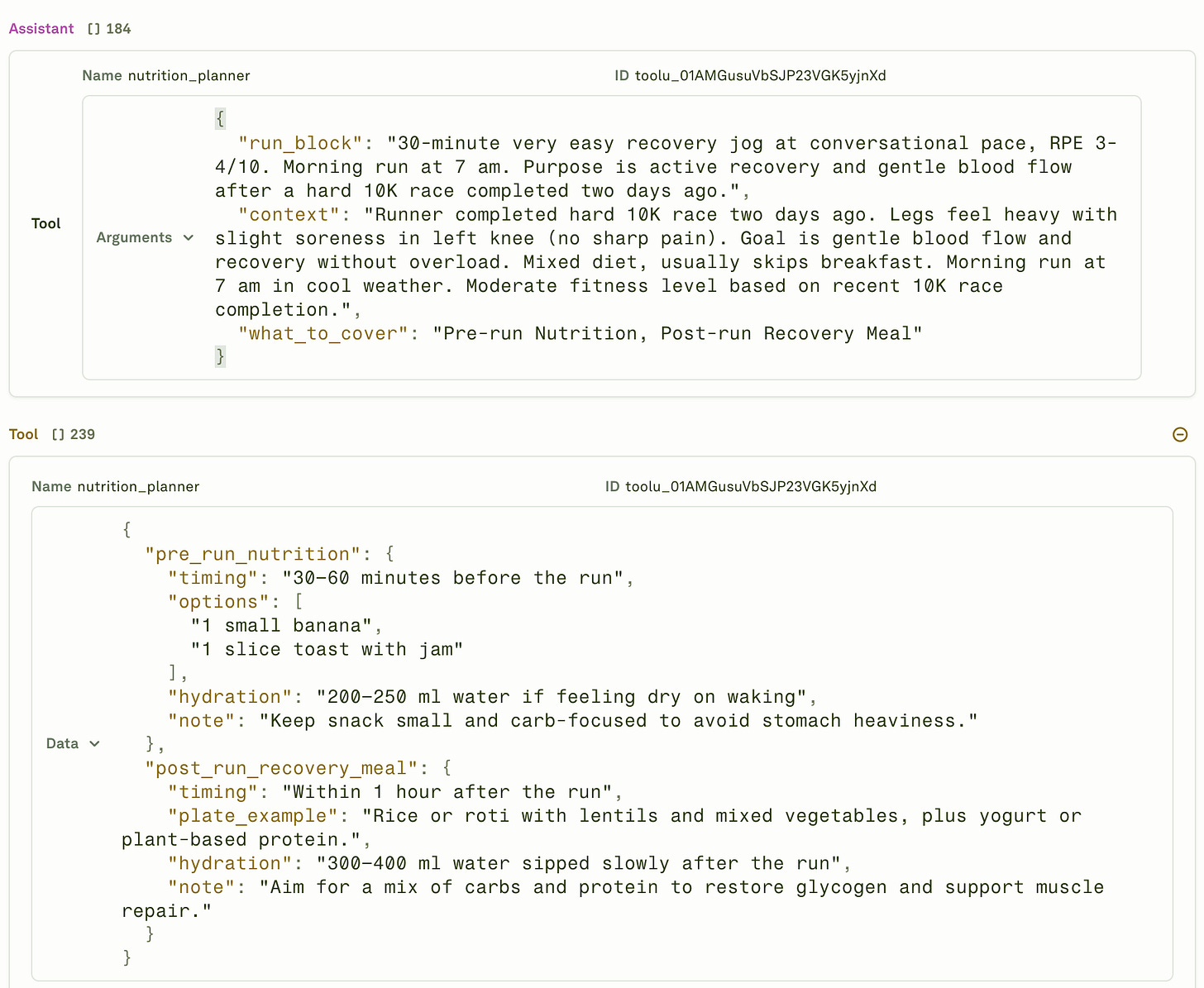

Last week, I wrote about “Building products using tool calling.” Today, I want to discuss some practical ways of writing an effective tool-calling function. Additionally, I wanted to showcase Adaline, the single platform that enables you to effectively design prompts and tool-calling functions before deploying them to your app.

You can do a lot with Adaline if you are building products with LLM. It is the single platform to iterate, evaluate, deploy, and monitor your LLMs and prompts. However, in this blog, I will explore two important themes using Adaline: tool schema as a contract and prompt–schema Alignment.

Tool Schema as Contract

So, why do we need effective tool calling schemas in the first place?

Ambiguous tool arguments lead to flaky calls and silent failures. To address these issues, you need to treat the tool schema as a product contract with strict types, required fields, format checks, and no extraneous fields. Adaline expects machine-checked schemas attached to tool definitions, which tightens determinism and makes audits straightforward.

What does it mean?

Essentially, when defining a tool schema, treat it as a typed interface that constrains model-produced arguments to valid shapes.

In practice, define inputs using JSON Schema and bind them to the tool, and validate them before execution. This will allow downstream services/API to trust their inputs. Here is a JSON example:

{

“type”: “function”,

“definition”: {

“schema”: {

“name”: “get_free_busy”,

“description”: “Return free/busy blocks for attendees in a time window.”,

“parameters”: {

“type”: “object”,

“required”: [

“attendees”,

“window_start”,

“window_end”,

“organizer_tz”

],

“additionalProperties”: false,

“properties”: {

“attendees”: {

“type”: “array”,

“minItems”: 1,

“items”: {

“type”: “string”,

“format”: “email”

},

“description”: “List of attendee email addresses.”

},

“window_start”: {

“type”: “string”,

“format”: “date-time”,

“description”: “ISO 8601 start of search window.”

},

“window_end”: {

“type”: “string”,

“format”: “date-time”,

“description”: “ISO 8601 end of search window.”

},

“organizer_tz”: {

“type”: “string”,

“description”: “IANA time zone of the organizer, e.g., America/Los_Angeles.”

}

}

},

“strict”: true

}

}

}You will see from the example above that the tool schema contains:

A non-empty array of email-formatted attendees.

ISO 8601

window_startandwindow_end.IANA

organizer_tz. additionalProperties: falseblocks invented fields.“strict”: trueenforces validation before any side effects.

Details are important. The more detailed and hyperspecific you are, the better results you will receive from the LLM.

You will gain more understanding of this schema as you read further.

Error taxonomy

It is also important to define and address potential errors that may arise. A small, canonical set speeds triage, guides retries, and keeps dashboards consistent.

VALIDATION_ERROR: Schema or format mismatch, such as missing required fields, non-ISO timestamps, invalid emails, or unknown IANA time zone, must return 400 error. Mark them retryable=false, and ask for a corrected value.

AUTH_SCOPE_DENIED: In case of calendar read scope missing or token invalid. Return 403, retryable=false; request proper scope or fall back to a cached read if available.

RATE_LIMITED: If the provider returns 429 or 'Rate Limit Exceeded', then return 429 with retryable=true; apply exponential backoff with jitter and cap attempts.

UPSTREAM_TIMEOUT: If the calendar API does not respond within the budget, return 504 with retryable=true; retry with backoff, or narrow the window/attendee set.

For instance, below is the sample error schema that can be added within the original tool calling function.

{

“type”: “function”,

…

“strict”: true,

“x_error_shape”: {

“type”: “object”,

“required”: [”status”, “error_code”, “message”, “retryable”, “http_status”],

“additionalProperties”: false,

“properties”: {

“status”: { “type”: “string”, “const”: “ERROR” },

“error_code”: {

“type”: “string”,

“enum”: [

“VALIDATION_ERROR”,

“AUTH_SCOPE_DENIED”,

“RATE_LIMITED”,

“UPSTREAM_TIMEOUT”

]

},

“message”: { “type”: “string”, “minLength”: 1 },

“hint”: { “type”: “string” },

“retryable”: { “type”: “boolean” },

“http_status”: { “type”: “integer”, “enum”: [400, 403, 429, 504] }

}

},

“x_error_guidance”: {

“VALIDATION_ERROR”: {

“http_status”: 400,

“retryable”: false,

“action”: “Schema or format mismatch (missing required fields, non-ISO timestamps, invalid emails, or unknown IANA time zone). Ask for a corrected value.”

},

“AUTH_SCOPE_DENIED”: {

“http_status”: 403,

“retryable”: false,

“action”: “Calendar read scope missing or token invalid. Request proper scope or fall back to a cached read if available.”

},

“RATE_LIMITED”: {

“http_status”: 429,

“retryable”: true,

“action”: “Provider rate limit exceeded. Apply exponential backoff with jitter and cap attempts.”

},

“UPSTREAM_TIMEOUT”: {

“http_status”: 504,

“retryable”: true,

“action”: “Calendar API did not respond within budget. Retry with backoff, or narrow the window/attendee set.”

}

}

}

}

}This detailed description allows you to trace the workflow and fix the error on the fly. (More on traces and span in the coming blog.)

Prompt–Schema Alignment

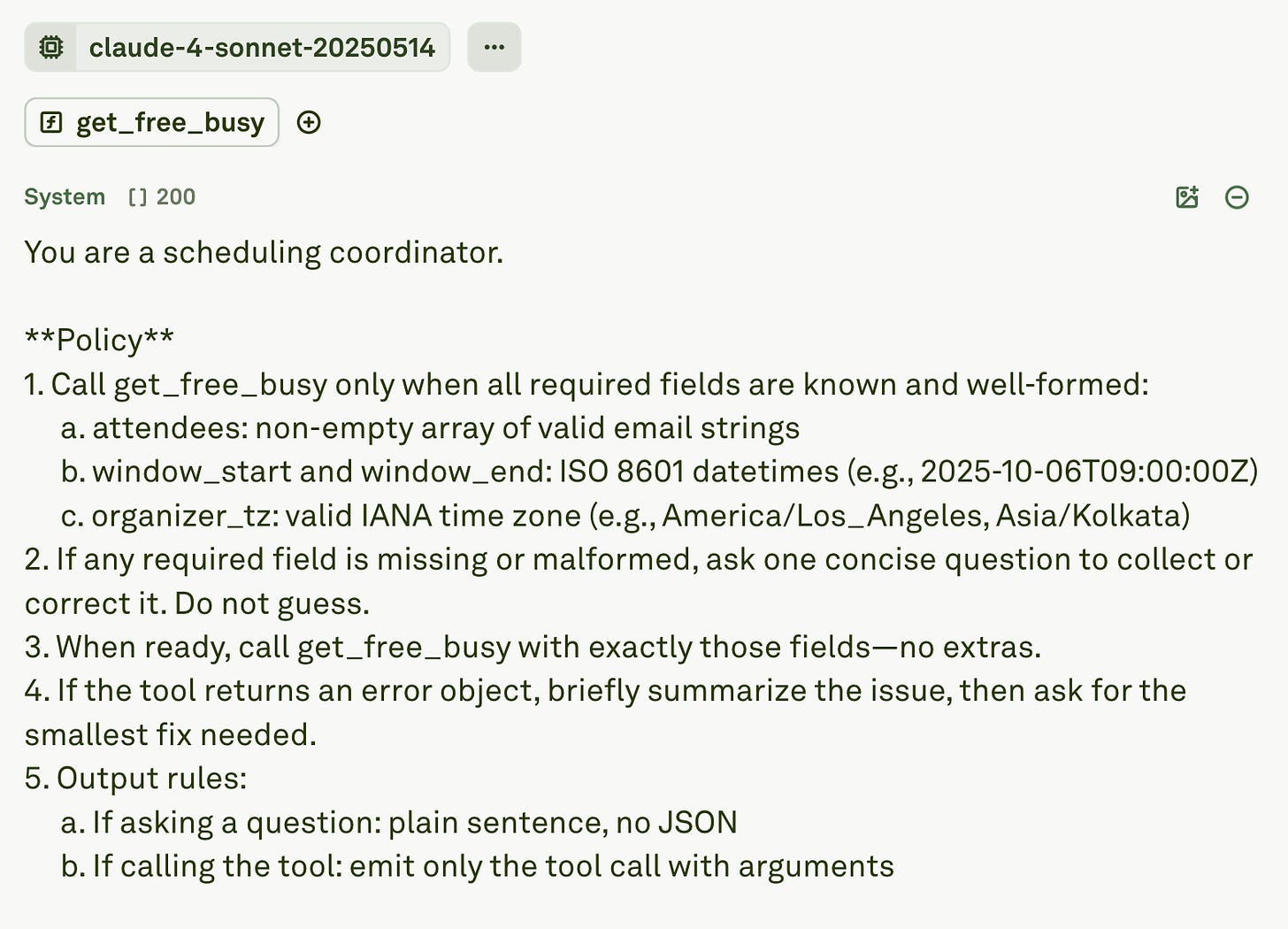

Correct schemas still fail when prompts do not steer the model to fill fields correctly. The fix is a two-artifact approach that pairs a field-oriented prompt with the exact same schema. Aligning both artifacts reduces argument drift, enforces constraints, and improves determinism across tool stacks.

Now, you can complement the tool function above by using a prompt that refers to the function name, in this case, get_free_busy.

A common term used to align prompts with the tool schema is field mirroring.

Field mirroring refers to using schema field names verbatim within the prompt. Mirroring anchors the model on the expected argument keys and lowers parsing errors. It also improves downstream validation because the output aligns with the typed contract.

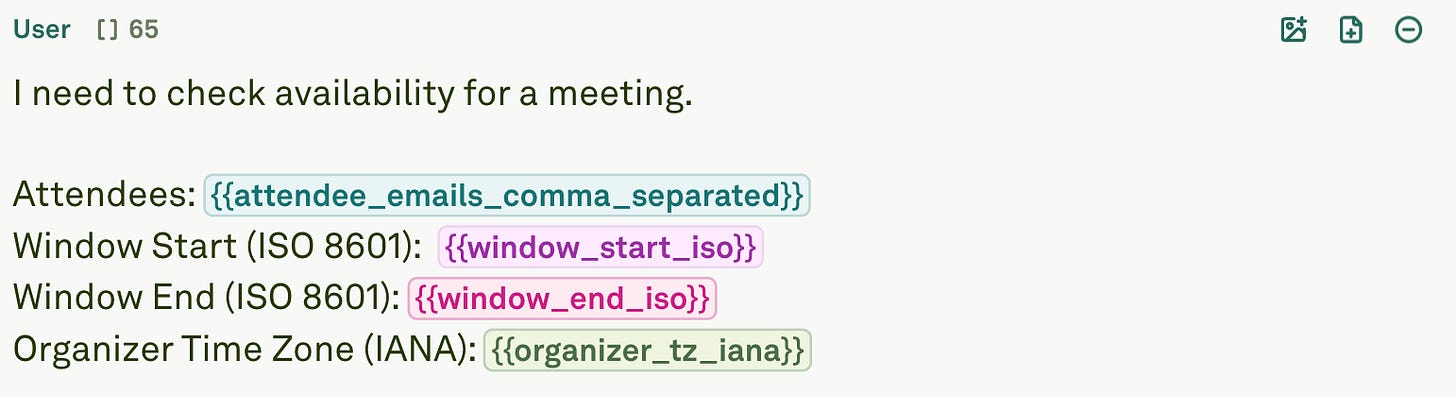

Now, once the system message is ready, you can add a user message to the prompt that simply takes in the required variables from the users.

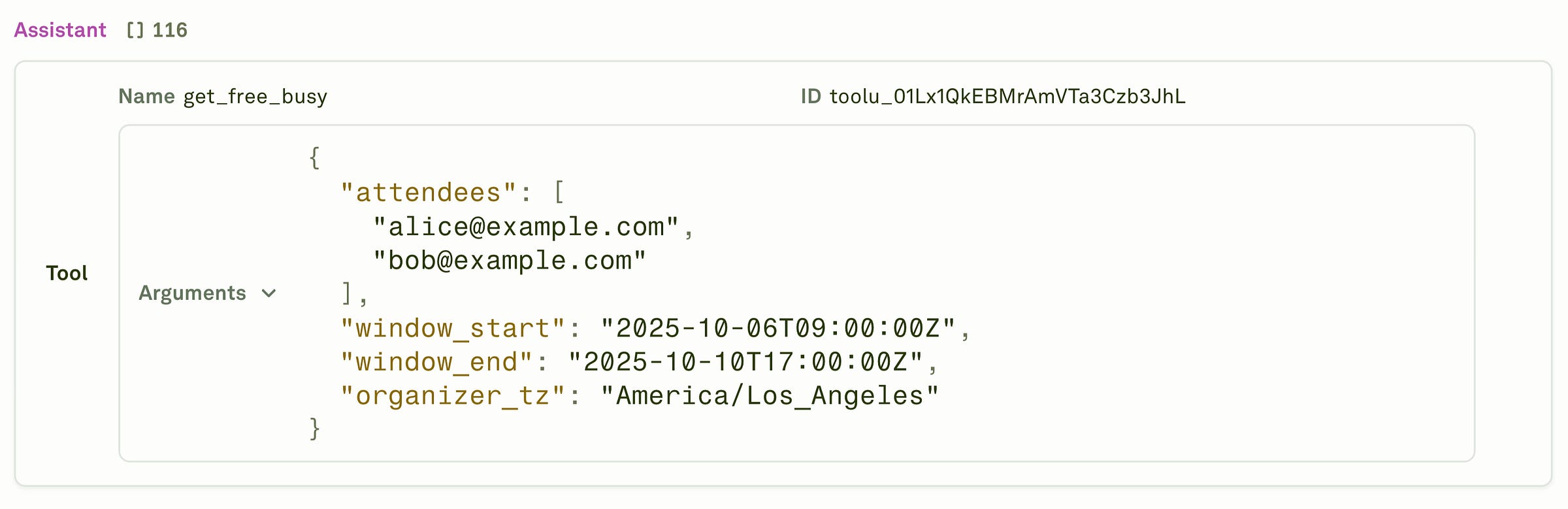

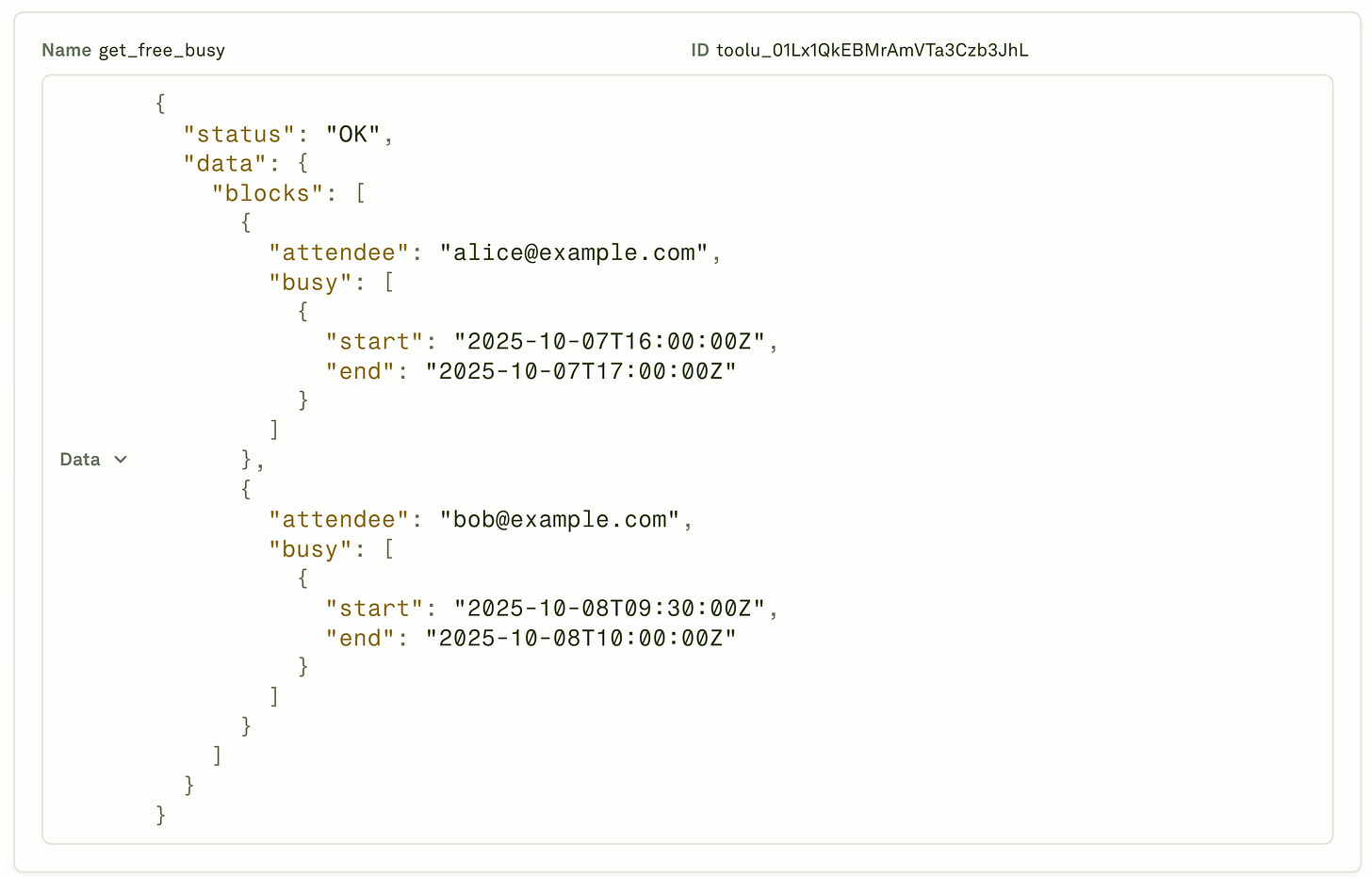

Upon running the prompt with the necessary variables, the model will make the tool request and get the tool response.

Let’s look at the two scenarios — the success and failure cases.

Success Case

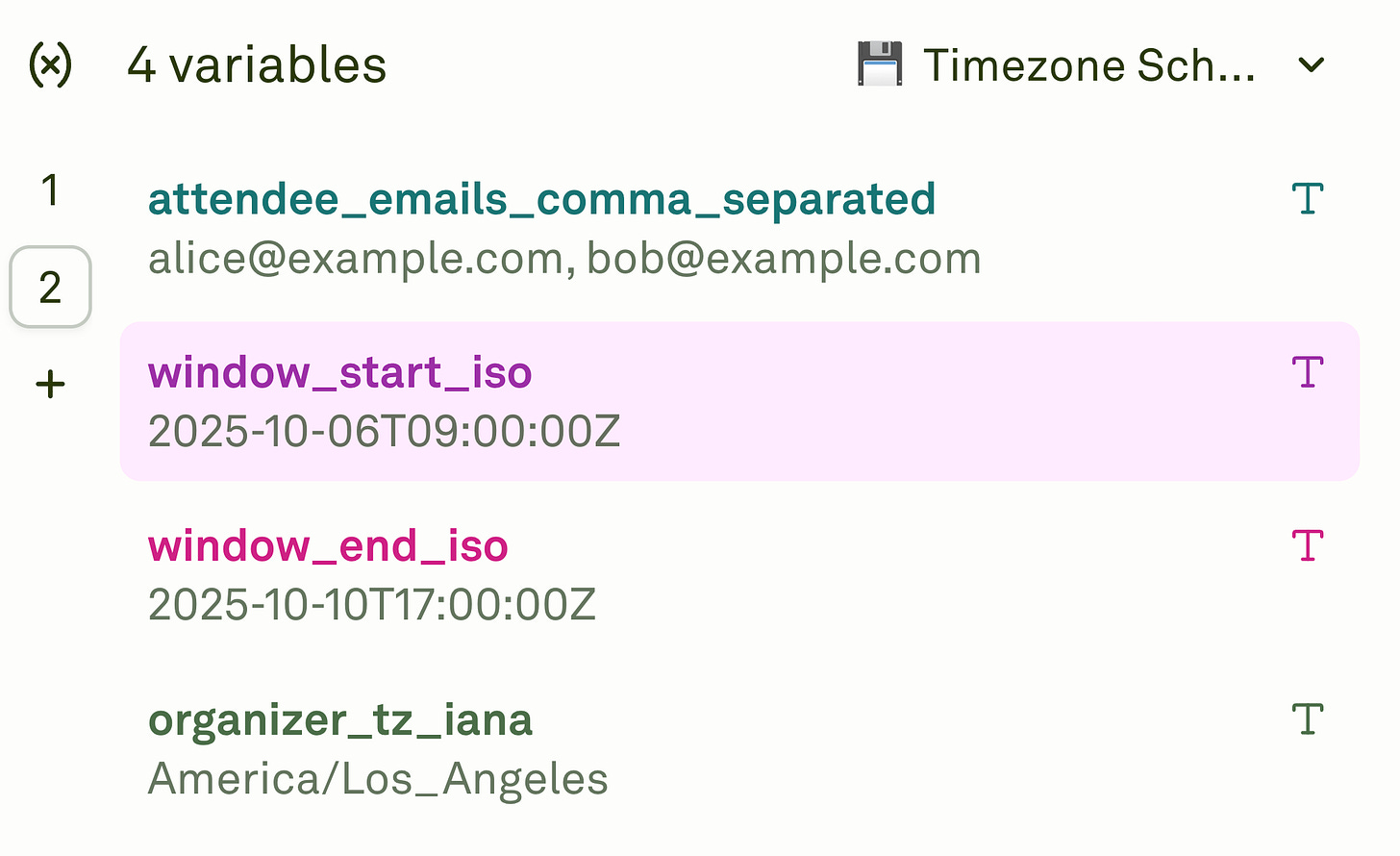

Let’s assign some values to the variables.

You can see that the variables adhere to the “policy” described in the system prompt and will not contradict each other. As such, the tool call made by the LLM will be successful.

You can then add the tool response. (To learn more about tool response, check out the previous blog.)

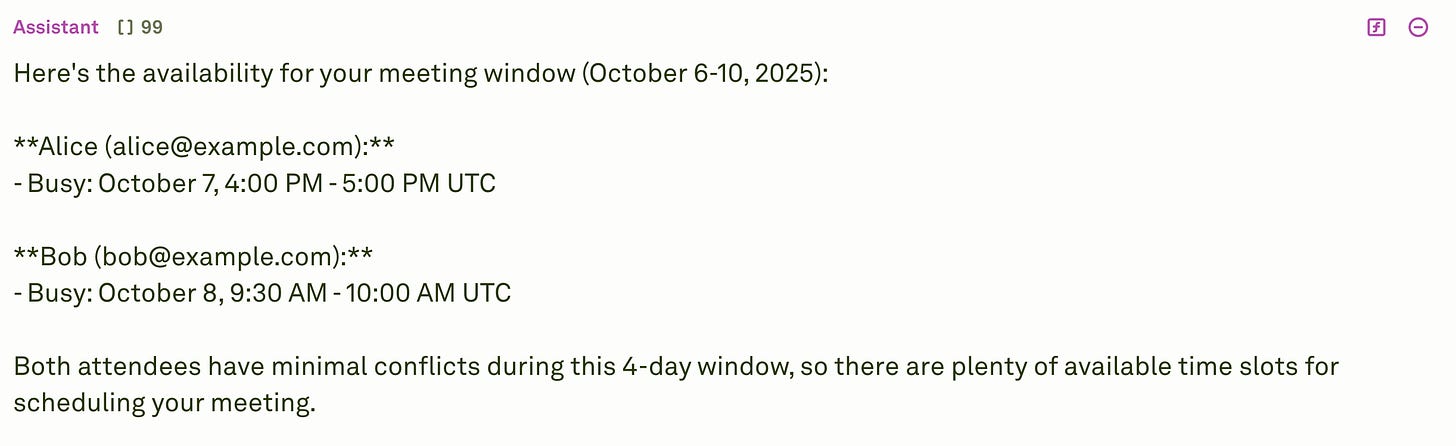

The LLM will read through the response and provide you with a readable and well-structured reply.

Failure Case

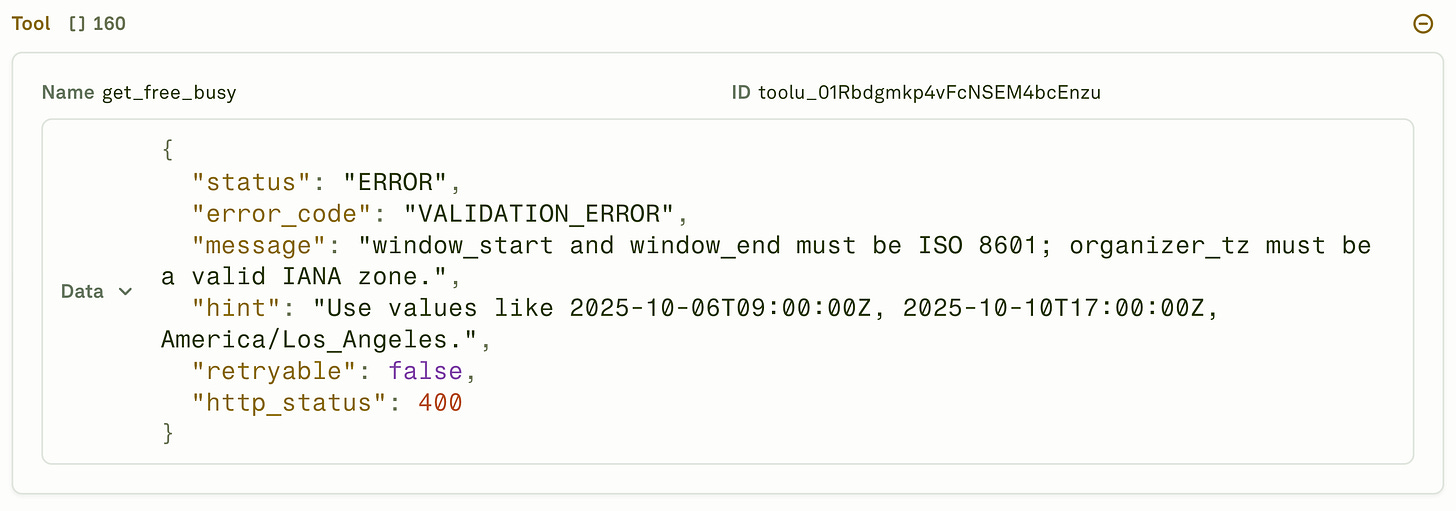

Now there can be two types of failures: a deterministic success path and a precise failure path.

A deterministic success path is one that passes the validation check in the system prompt. Essentially, the LLM will make the tool call only when the variables are in the correct format.

For instance, if the email has a typo or mismatch then it will not call the tool and will ask the user to provide the correct format.

A precise failure path is where the LLM fails the validation check in the system prompt. In this case, the LLM will make the tool call, but the tool will raise an error.

If the error has been mentioned in the tool schema, then the LLM will provide all the necessary details of the error/failure.

Closing

We covered the essentials to make tool calls reliable in production. Treat the schema as a contract with strict types, required fields, format checks, and no extras.

Pair it with a small error taxonomy (VALIDATION_ERROR, AUTH_SCOPE_DENIED, RATE_LIMITED, UPSTREAM_TIMEOUT) so retries and dashboards stay predictable.

Align prompts to the schema with field mirroring to reduce drift. Show both paths clearly: a deterministic success flow and a precise failure flow that returns a standard error object.

Adaline helps design, validate, and trace these behaviors before rollout. Start small: ship one function, add golden tests, assert error shapes, and watch call success rate, unmet-intent rate, and p95 latency to guide iteration.