Skills To Become AI PM

A general guide to becoming an AI PM in 2025 and 2026.

Recently, a lot of people have been asking me these two questions: one, “how to become an AI PM”, and two, “how do I improve myself further to enhance my skills as an AI PM?”

So, I thought to write a comprehensive blog on these questions. There is a lot of open-sourced, free-to-use information, and I would like to point it out with some additional insight.

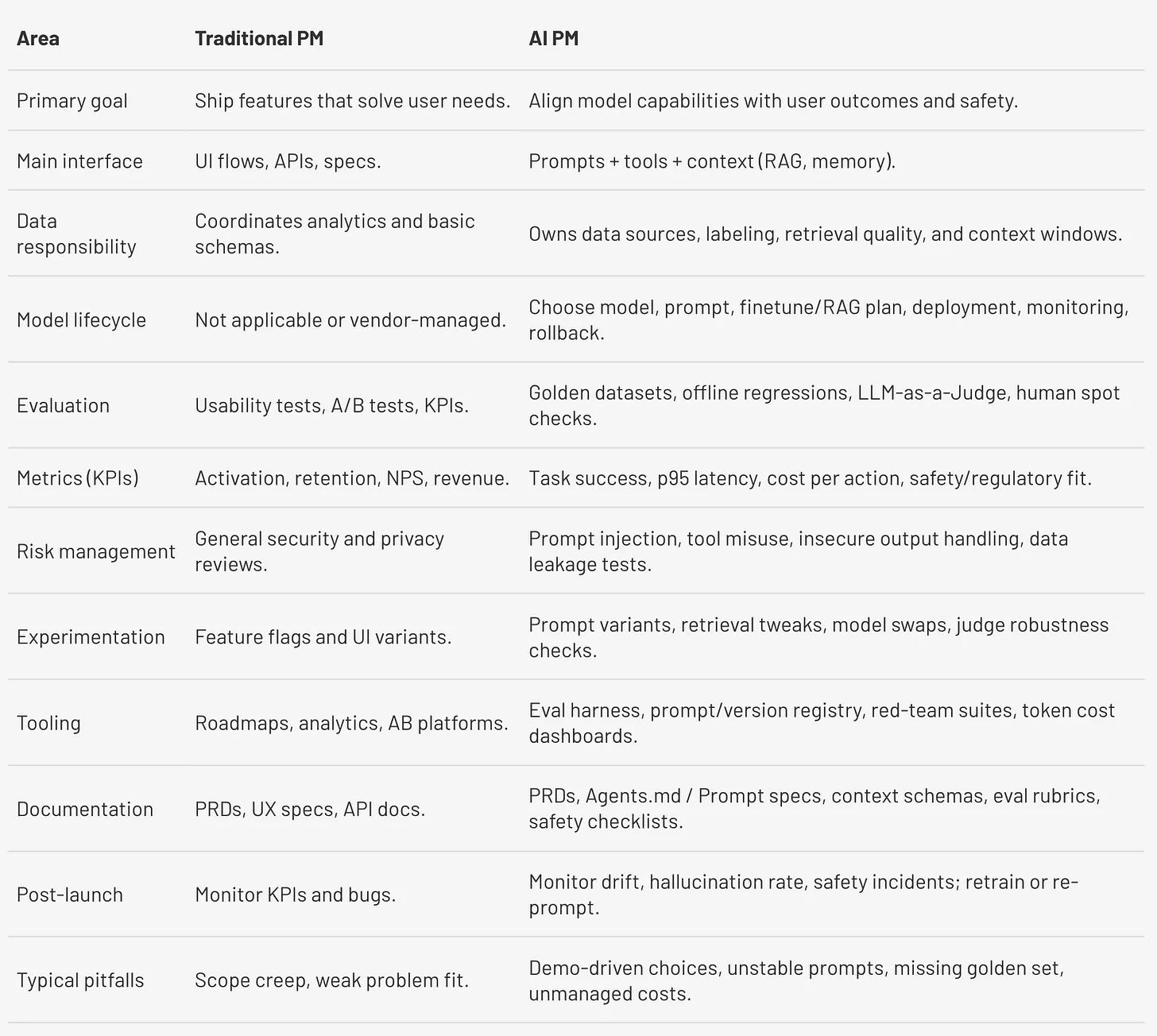

But before I begin, it is important to define what AI PM is and how it differs from Traditional PM.

An AI PM is someone who aligns user problems/feedback with the product’s capabilities. Now, the product in this case refers to those products that build on top of AI [which are mostly LLM-based]. As such, the main tools that AI PM uses to align these two [parties] together are domain expertise and prompt engineering.

“In a more simplistic manner, AI PM possesses the users’ mindset and aligns the product to fit their needs and product value or mission.” – Source

So, where should you start? And if you are already an AI PM, what should you do?

I think the best place to start is learning the basics. These will be:

Understanding the LLMs

Prompt Engineering

Once you have covered the basics, move on to more advanced concepts.

Context Engineering

Tool Calling

Evaluation

Prototyping via Vibe coding

Learning and practically exploring the Agentic workflow

Basic Concepts

Now, let’s explore the basic concepts.

Since we are dealing with AI, it is important to understand which type of AI is being used to build the next products. Generally, most of the products are built on top of LLMs. What we are using today is a multimodal LLM that can process text, image, video, audio, etc. And sometimes the term AI is used interchangeably with LLM [which shouldn’t be the case].

But LLM is the core technology behind most products today, which is why knowing about the basics of LLM does help.

When it comes to LLMs, you should learn and familiarize yourself with these concepts:

Phases of LLM development:

How is data prepared for training?

What is autoregressive training?

Training the pretrain LLM with [domain-specific] Instructions

Post-training

Human preference

Reward model

Reinforcement Learning with Human Feedback (RLHF)

Reinforcement Learning with Verifiable Rewards (RLVR)

Inference

LLMs

Difference between Architecture and Model

Floating-point operation or FLOP

Train-time and test-time compute

How do LLMs reason?

Chain-of-thought

Tree-of-thought

llm.txt

Artificial General Intelligence and Artificial Super Intelligence

Also, if possible, the best way to learn about LLMs is to build one from scratch. This will help you become more familiar with the concepts in a more natural way.

Once you have familiarized yourself with these concepts, you can then learn to prompt these models or prompt engineering.

Prompt Engineering is a skill for honing LLMs to provide user-centric answers, and it is extremely important. User in case is you. If you don’t provide the right prompts, then the LLM will not provide satisfactory answers in response. Then three things will happen:

You will get frustrated trying to explain to the LLM what your exact needs are.

You will waste your time.

Your burn tokens.

A prompt engineer must think thoroughly about what they want. One effective way is to write on a piece of paper what you want. This way, you will spend time much more thoughtfully and get a logical flow and structure for the idea.

In my experience, the first prompt matters the most. If your first prompt isn’t clear and articulate, then the following conversation with the LLM can get worse. I am not telling you it will be, but I have experienced it.

Now, let’s move into advanced concepts.

Advanced Concepts

In this section, we will cover advanced concepts built on basic ones. And the first one will be types of prompting methods. Now, there are a lot of prompting methods, and as an AI PM, you must learn all of them, but there are a few that you must learn first.

Here is a list of prompting methods:

Among the nine prompting methods above, you must definitely learn and use One-shot, Few-shot, and Decomposed Prompting. These prompting techniques will enable you to get the desired output:

In one try, when given the proper instructions.

By giving a few [2 or 3] examples within the prompt.

By breaking complex tasks into simpler, manageable sub-tasks.

Once you are comfortable with prompt engineering and see that you are able to hone the LLM and get the desired results with fewer follow-up prompts, you can then move on to the next concept. Now, you learn and explore context engineering.

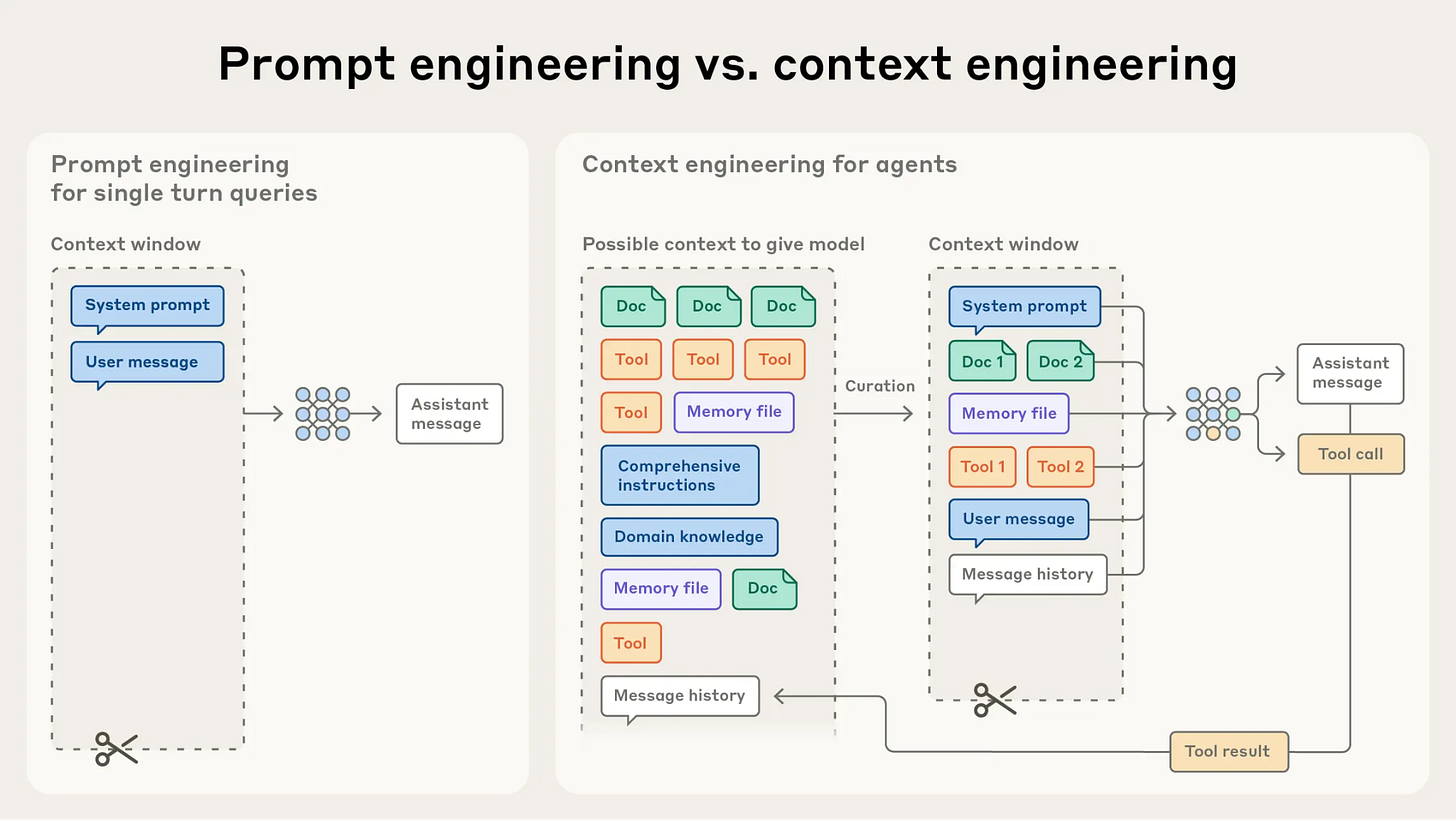

Context engineering, as Andrej Karapathy describes, is “the delicate art and science of filling the context window with just the right information for the next step.”

It is a progression of prompt engineering and allows the LLM to get relevant information from vector databases, Model Context Protocols, RAGs, memory file [like Agents.md and claude.md], system prompt, etc.

As the AI PM context is all you need. For instance, you might need to connect the Figma design with a product description, along with a prompt to guide the LLM to build a desired feature. With context engineering, you give prompt powers to leverage information that can provide better results.

Next up is tool calling.

Tool calling allows LLM to leverage an external API/software to complete a response or get the desired response.

Now, all these things are extensions of prompt engineering. Meaning, design your prompt in such a way that the LLM gets the necessary context and selects the right tool to get the job done.

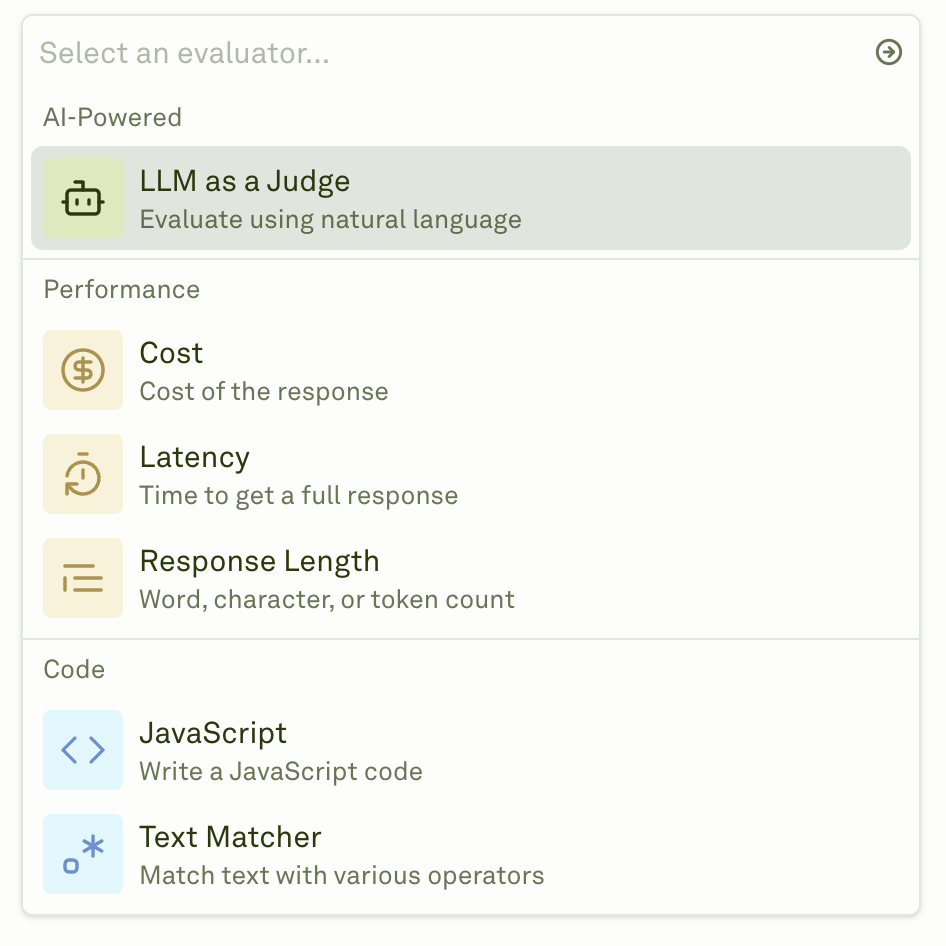

But how do you know that the prompt is performing well? This is why you should know how to evaluate your prompts.

Evaluation is an important skill that you, as an AI PM, should have. Evaluation lets you understand where the prompt is failing.

Sometimes you have one single prompt template that your product relies on. With evaluation, you can test the same prompt on multiple test cases. This allows you to understand which test case can break your prompt.

Once you are familiar with all these concepts, you should make a prototype. While making a prototype, try vibe coding. This is what I will propose:

Research on a certain idea. For example, a running coach.

Take a notebook and spend some time writing down what the app should do. Essentially, write down couple of features. Expand it later.

Design a simple UI in Figma or Canva.

Spend some time designing a prompt. Remember, the first prompt sets the tone most of the time. After that, the follow-up conversation is much productive.

Select one LLM of your choice. I would recommend ChatGPT as it is versatile and budget-friendly.

Paste and run the prompt with the UI design.

Now, start vibing with the LLM keep adding details and features as you please.

Also, if you find an error or you want to change anything, just prompt the LLM.

Vibe coding is great for prototyping and creating MVPs.

I think these are some of the important concepts that you must learn to become an AI PM.

However, there is still one concept you definitely need to learn. And that is Agents.

Agents are systems that can observe the environment, make decisions, and take action to get rewards. In an agentic workflow, a human submits a query through an interface. The LLM then engages with the environment through iterative cycles. It clarifies requirements, searches files, writes code, runs tests, and refines outputs until tasks are completed successfully. This persistent, goal-directed behavior distinguishes agents from traditional AI systems.

Today, almost all LLMs are agentic LLMs. So, it is important to learn and understand how they work. The best to learn about agents is to learn a tool and create a workflow.

Some of the tools that I would recommend are:

n8n

Crewai

Claude Code by Anthropic

Agentkit by OpenAI

Visual Studio Code

Cursor

In conjunction with agents, learn about the Model Context Protocol or MCP. They are of great help. Again, read about MCP and learn more by doing it practically. I would recommend downloading and using Cursor, Claude desktop, and Claude Code to learn MCP.

Check out this course on MCP: Build your own MCP server and connect it to Cursor

These are what I think are some of the most important concepts that you should learn to become an AI PM.